Hadoop系列文章目录

1、hadoop3.1.4简单介绍及部署、简单验证

2、HDFS操作 - shell客户端

3、HDFS的使用(读写、上传、下载、遍历、查找文件、整个目录拷贝、只拷贝文件、列出文件夹下文件、删除文件及目录、获取文件及文件夹属性等)-java

4、HDFS-java操作类HDFSUtil及junit测试(HDFS的常见操作以及HA环境的配置)

5、HDFS API的RESTful风格–WebHDFS

6、HDFS的HttpFS-代理服务

7、大数据中常见的文件存储格式以及hadoop中支持的压缩算法

8、HDFS内存存储策略支持和“冷热温”存储

9、hadoop高可用HA集群部署及三种方式验证

10、HDFS小文件解决方案–Archive

11、hadoop环境下的Sequence File的读写与合并

12、HDFS Trash垃圾桶回收介绍与示例

13、HDFS Snapshot快照

14、HDFS 透明加密KMS

15、MapReduce介绍及wordcount

16、MapReduce的基本用法示例-自定义序列化、排序、分区、分组和topN

17、MapReduce的分区Partition介绍

18、MapReduce的计数器与通过MapReduce读取/写入数据库示例

19、Join操作map side join 和 reduce side join

20、MapReduce 工作流介绍

21、MapReduce读写SequenceFile、MapFile、ORCFile和ParquetFile文件

22、MapReduce使用Gzip压缩、Snappy压缩和Lzo压缩算法写文件和读取相应的文件

23、hadoop集群中yarn运行mapreduce的内存、CPU分配调度计算与优化

本文介绍HDFS的存储策略以及“冷热温”存储的配置。

本文的前提依赖是hadoop集群环境可以正常的运行。

一、HDFS内存存储策略支持

1、LAZY PERSIST介绍

- HDFS支持把数据写入由DataNode管理的堆外内存

- DataNode异步地将内存中数据刷新到磁盘,从而减少代价较高的磁盘IO操作,这种写入称为 Lazy Persist写入

- 该特性从Apache Hadoop 2.6.0开始支持

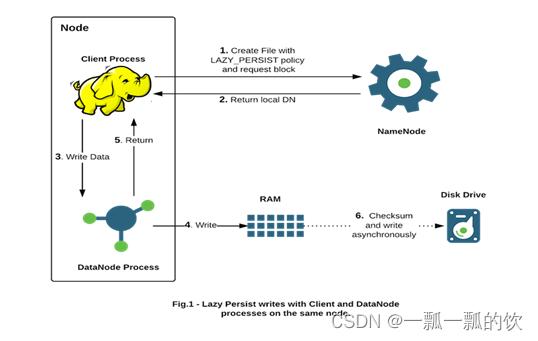

2、LAZY PERSIST执行流程

- 对目标文件目录设置 StoragePolicy 为 LAZY_PERSIST 的内存存储策略

- 客户端进程向 NameNode发起创建/写文件的请求

- 客户端请求到具体的 DataNode 后 DataNode 会把这些数据块写入 RAM内存中,同时启动异步线程服务将内存数据持久化写到磁盘上

- 内存的异步持久化存储是指数据不是马上落盘,而是懒惰的、延时地进行处理

3、LAZY PERSIST设置使用

参考链接:

https://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-hdfs/MemoryStorage.html

https://blog.csdn.net/fighting_111/article/details/109304120

实现步骤:

1、虚拟内存盘配置

# 该步骤需要在每台机器上都需要做,也就是hadoop集群上的每台机器

# root用户才可以做此操作

# 创建目录/mnt/dn-tmpfs/

# 将tmpfs挂载到目录/mnt/dn-tmpfs/,并且限制内存使用大小为2GB

mount -t tmpfs -o size=2g tmpfs /mnt/dn-tmpfs/

# 执行示例

[root@server1 ~]# mkdir -p /mnt/dn-tmpfs/

[root@server1 ~]# cd /mnt/dn-tmpfs/

[root@server1 dn-tmpfs]# mount -t tmpfs -o size=2g tmpfs /mnt/dn-tmpfs/

#如果不是root用户创建的,则需要针对该目录进行授权

chown -R alanchan:root /mnt/dn-tmpfs

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

2、内存存储介质设置

将机器中已经完成好的虚拟内存盘配置到 dfs.datanode.data.dir 中,其次还要带上 RAM_DISK 标签

# 在server1上执行,修改hdfs-site.xml文件

cd /usr/local/bigdata//hadoop-3.1.4/etc/hadoop

vim hdfs-site.xml

# 添加如下内容

<property>

<name>dfs.datanode.data.dir</name>

<value>[DISK]file://${hadoop.tmp.dir}/dfs/data,[ARCHIVE]file://${hadoop.tmp.dir}/dfs/data/archive,[RAM_DISK]/mnt/dn-tmpfs</value>

</property>

# 复制文件到集群的其他机器

scp -r hdfs-site.xml server2:$PWD

scp -r hdfs-site.xml server3:$PWD

scp -r hdfs-site.xml server4:$PWD

# 是否开启异构存储,默认true开启

dfs.storage.policy.enabled

# 用于在数据节点上的内存中缓存块副本的内存量(以字节为单位)

# 默认情况下,此参数设置为0,这将禁用 内存中缓存。内存值过小会导致内存中的总的可存储的数据块变少,但如果超过 DataNode 能承受的最大内存大小的话,部分内存块会被直接移出

dfs.datanode.max.locked.memory

# 该参数本例中没有设置,一旦设置了datanode启动不成功,报的异常如下:

1G=1073741824*2

java.lang.RuntimeException: Cannot start datanode because the configured max locked memory size (dfs.datanode.max.locked.memory) of 1073741824 bytes is more than the datanode's available RLIMIT_MEMLOCK ulimit of 65536 bytes.

通过网上查询设置如下命令,依然出现上面的异常,

ulimit -l 1073741824*2

#hdfs-site.xml

# 32GB

<property>

<name>dfs.datanode.max.locked.memory</name>

<value>34359738368</value>

</property>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

下面的部分是没有设置dfs.datanode.max.locked.memory的情况下完成的

3、重启HDFS集群

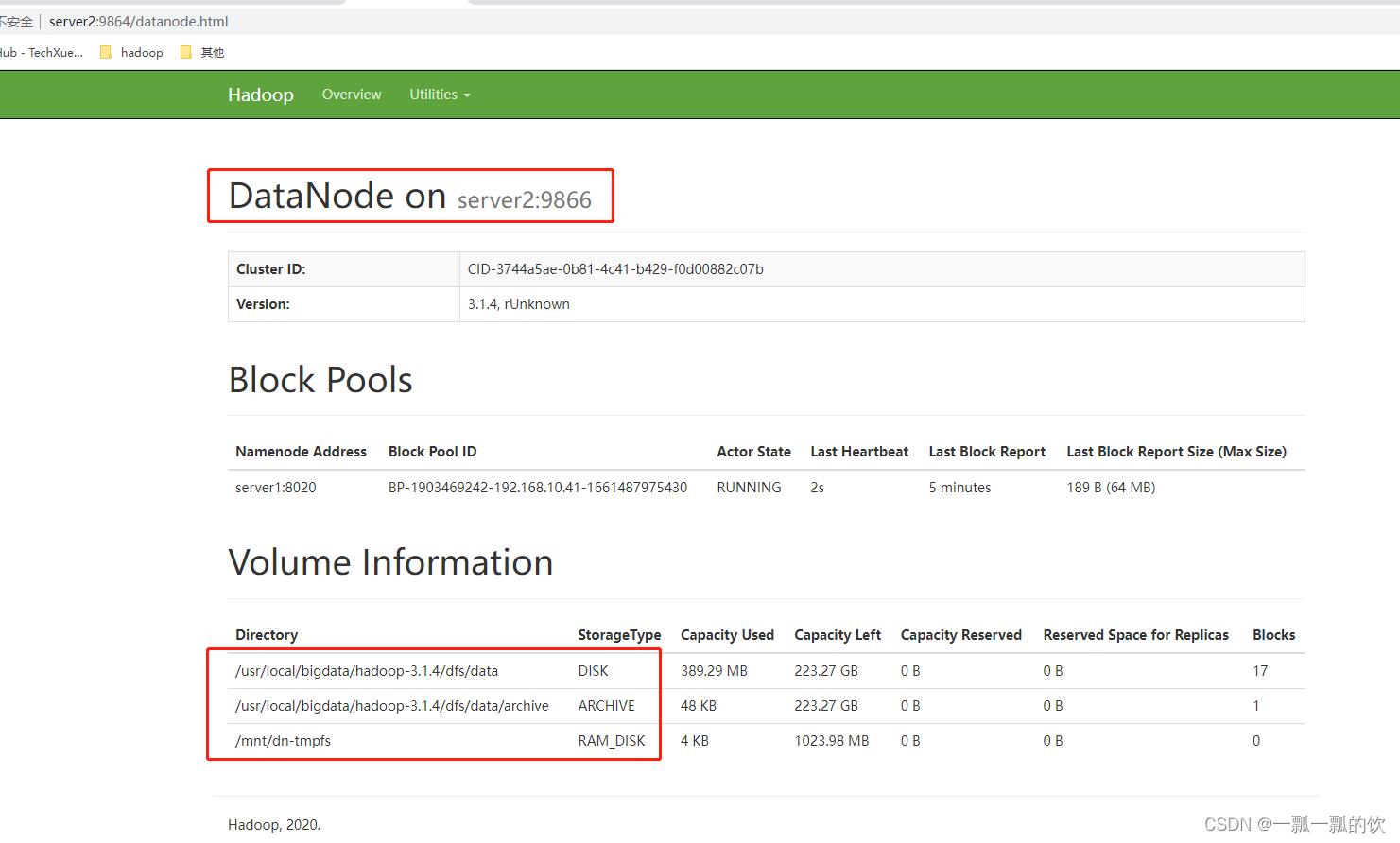

web UI查看配置结果

4、在目录上设置存储策略

[DatanodeInfoWithStorage[192.168.10.44:9866,DS-97245afa-f1ec-4c50-93f8-0ba963e5f594,DISK],

DatanodeInfoWithStorage[192.168.10.42:9866,DS-a551a688-b8f7-4b0c-b536-13032e26846f,DISK],

DatanodeInfoWithStorage[192.168.10.43:9866,DS-189c3394-2fba-40e2-ad24-1b57785ec4d5,DISK]]

- 1

- 2

- 3

设置内存存储策略

# 命令

hdfs storagepolicies -setStoragePolicy -path <path> -policy LAZY_PERSIST

#1、创建需要存放数据的目录

hdfs dfs -mkdir -p /hdfs-test/data_phase/ram

#2、设置/hdfs-test/data_phase/ram存储策略

hdfs storagepolicies -setStoragePolicy -path /hdfs-test/data_phase/ram -policy LAZY_PERSIST

#3、查看/hdfs-test/data_phase/ram存储策略

hdfs storagepolicies -getStoragePolicy -path /hdfs-test/data_phase/ram

#4、上传文件到存放/hdfs-test/data_phase/ram

hdfs dfs -put /usr/local/tools/caskey /hdfs-test/data_phase/ram

#5、验证上传文件的存储策略

hdfs fsck /hdfs-test/data_phase/ram/caskey -files -blocks -locations

# 操作示例

[alanchan@server4 root]$ hdfs dfs -mkdir -p /hdfs-test/data_phase/ram

[alanchan@server4 root]$ hdfs storagepolicies -setStoragePolicy -path /hdfs-test/data_phase/ram -policy LAZY_PERSIST

Set storage policy LAZY_PERSIST on /hdfs-test/data_phase/ram

[alanchan@server4 root]$ hdfs storagepolicies -getStoragePolicy -path /hdfs-test/data_phase/ram

The storage policy of /hdfs-test/data_phase/ram:

BlockStoragePolicy{LAZY_PERSIST:15, storageTypes=[RAM_DISK, DISK], creationFallbacks=[DISK], replicationFallbacks=[DISK]}

[alanchan@server1 root]$ hdfs dfs -put /usr/local/tools/caskey /hdfs-test/data_phase/ram

[alanchan@server1 root]$ hdfs fsck /hdfs-test/data_phase/ram/caskey -files -blocks -locations

Connecting to namenode via http://server1:9870/fsck?ugi=alanchan&files=1&blocks=1&locations=1&path=%2Fhdfs-test%2Fdata_phase%2Fram%2Fcaskey

FSCK started by alanchan (auth:SIMPLE) from /192.168.10.41 for path /hdfs-test/data_phase/ram/caskey at Fri Sep 02 15:04:20 CST 2022

/hdfs-test/data_phase/ram/caskey 2204 bytes, replicated: replication=3, 1 block(s): OK

0. BP-1903469242-192.168.10.41-1661487975430:blk_1073742713_1925 len=2204 Live_repl=3 [DatanodeInfoWithStorage[192.168.10.44:9866,DS-97245afa-f1ec-4c50-93f8-0ba963e5f594,DISK], DatanodeInfoWithStorage[192.168.10.42:9866,DS-a551a688-b8f7-4b0c-b536-13032e26846f,DISK], DatanodeInfoWithStorage[192.168.10.43:9866,DS-189c3394-2fba-40e2-ad24-1b57785ec4d5,DISK]]

Status: HEALTHY

Number of data-nodes: 3

Number of racks: 1

Total dirs: 0

Total symlinks: 0

Replicated Blocks:

Total size: 2204 B

Total files: 1

Total blocks (validated): 1 (avg. block size 2204 B)

Minimally replicated blocks: 1 (100.0 %)

Over-replicated blocks: 0 (0.0 %)

Under-replicated blocks: 0 (0.0 %)

Mis-replicated blocks: 0 (0.0 %)

Default replication factor: 3

Average block replication: 3.0

Missing blocks: 0

Corrupt blocks: 0

Missing replicas: 0 (0.0 %)

Erasure Coded Block Groups:

Total size: 0 B

Total files: 0

Total block groups (validated): 0

Minimally erasure-coded block groups: 0

Over-erasure-coded block groups: 0

Under-erasure-coded block groups: 0

Unsatisfactory placement block groups: 0

Average block group size: 0.0

Missing block groups: 0

Corrupt block groups: 0

Missing internal blocks: 0

FSCK ended at Fri Sep 02 15:04:20 CST 2022 in 3 milliseconds

The filesystem under path '/hdfs-test/data_phase/ram/caskey' is HEALTHY

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

二、“冷热温”存储的配置

- "热"数据:一般数据使用模式是新产生的数据被应用程序大量使用

- "温"数据:随着时间的推移,数据访问频率逐渐降低,如每周被访问几次

- "冷"数据:在接下来的几周和几个月、年中,数据使用率下降得更多。

该数据的类型定义主要视具体的业务场景,时间往往是一个重要的定义标准。

Hadoop允许将不是热数据或者活跃数据的数据分配到比较便宜的存储上,用于归档或冷存储。可以设置存储策略,将较旧的数据从昂贵的高性能存储上转移到性价比较低(较便宜)的存储设备上。

Hadoop 2.5及以上版本都支持存储策略,在该策略下,不仅可以在默认的传统磁盘上存储HDFS数据,还可以在SSD(固态硬盘)上存储数据。

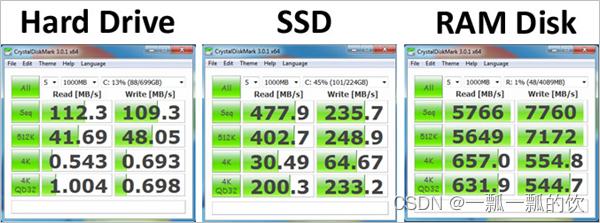

“冷热温”存储是Hadoop2.6.0版本出现的新特性,可以根据各个存储介质读写特性不同进行选择。例如冷热数据的存储,对冷数据采取容量大,读写性能不高的存储介质如机械硬盘,对于热数据,可使用SSD硬盘存储。在读写效率上性能差距大。异构特性允许我们对不同文件选择不同的存储介质进行保存,以实现机器性能的最大化。

不同介质性能对比,如下图。

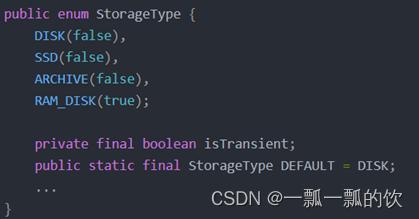

1、HDFS存储类型

HDFS中声明定义了4种存储类型

- RAM_DISK(内存)

- SSD(固态硬盘)

- DISK(机械硬盘),默认使用

- ARCHIVE(高密度存储介质,存储档案历史数据)

其中true和false指是否使用transient,transient代表非持久化,而只有内存存储是transient

配置属性时主动声明。HDFS并没有自动检测的能力。

配置参数dfs.datanode.data.dir = [SSD]file:///grid/dn/ssdO

如果目录前没有带上[SSD] [DISK] [ARCHIVE] [RAM_DISK] 这4种类型中的任何一种,则默认是DISK类型

2、块存储类型选择策略

块存储指的是对HDFS文件的数据块副本储存。

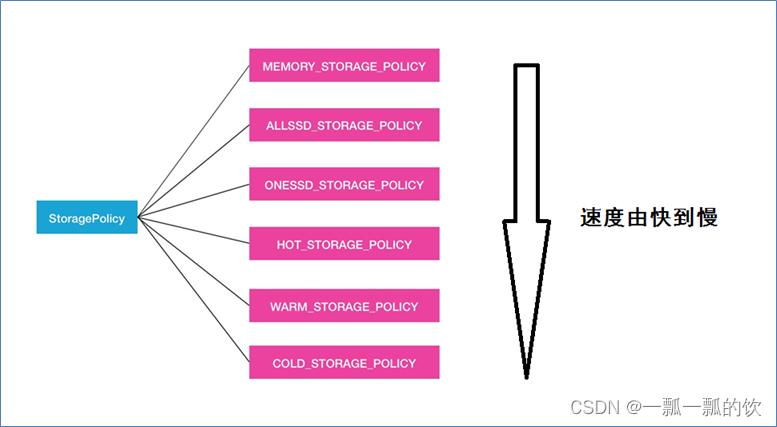

对于数据的存储介质,HDFS的BlockStoragePolicySuite 类内部定义了6种策略。

HOT(默认策略)

COLD

WARM

ALL_SSD

ONE_SSD

LAZY_PERSIST

前三种根据冷热数据区分,后三种根据磁盘性质区分

- HOT:用于存储和计算。流行且仍用于处理的数据将保留在此策略中。所有副本都存储在DISK中

- COLD:仅适用于计算量有限的存储。不再使用的数据或需要归档的数据从热存储移动到冷存储。所有副本都存储在ARCHIVE中

- WARM:部分热和部分冷。热时,其某些副本存储在DISK中,其余副本存储在ARCHIVE中

- All_SSD:将所有副本存储在SSD中

- One_SSD:用于将副本之一存储在SSD中。其余副本存储在DISK中

- Lazy_Persist:用于在内存中写入具有单个副本的块。首先将副本写入RAM_DISK,然后将其延迟保存在DISK中

3、块存储类型选择策略–命令

1、设置存储策略

hdfs storagepolicies -setStoragePolicy -path <path> -policy <policy>

# path 引用目录或文件的路径

# policy 存储策略名称

- 1

- 2

- 3

2、查看存储策略

# 命令

hdfs storagepolicies -listPolicies

# 示例

[alanchan@server1 sbin]$ hdfs storagepolicies -listPolicies

Block Storage Policies:

BlockStoragePolicy{PROVIDED:1, storageTypes=[PROVIDED, DISK], creationFallbacks=[PROVIDED, DISK], replicationFallbacks=[PROVIDED, DISK]}

BlockStoragePolicy{COLD:2, storageTypes=[ARCHIVE], creationFallbacks=[], replicationFallbacks=[]}

BlockStoragePolicy{WARM:5, storageTypes=[DISK, ARCHIVE], creationFallbacks=[DISK, ARCHIVE], replicationFallbacks=[DISK, ARCHIVE]}

BlockStoragePolicy{HOT:7, storageTypes=[DISK], creationFallbacks=[], replicationFallbacks=[ARCHIVE]}

BlockStoragePolicy{ONE_SSD:10, storageTypes=[SSD, DISK], creationFallbacks=[SSD, DISK], replicationFallbacks=[SSD, DISK]}

BlockStoragePolicy{ALL_SSD:12, storageTypes=[SSD], creationFallbacks=[DISK], replicationFallbacks=[DISK]}

BlockStoragePolicy{LAZY_PERSIST:15, storageTypes=[RAM_DISK, DISK], creationFallbacks=[DISK], replicationFallbacks=[DISK]}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

3、取消存储策略

在执行unset命令之后,将应用当前目录最近的祖先存储策略,如果没有任何祖先的策略,则将应用默认的存储策略

hdfs storagepolicies -unsetStoragePolicy -path <path>

- 1

4、冷热温数据存储策略(示例)

将数据分为冷、热、温三个阶段来存储,具体如下:

- 热数据存储目录:/hdfs-test/data_phase/hot

- 温数据存储目录:/hdfs-test/data_phase/warm

- 冷数据存储目录:/hdfs-test/data_phase/cold

配置步骤如下:

1、配置DataNode存储目录,指定存储介质类型, hdfs-site.xml文件

# 在server1上找到hdfs-site.xml文件位置

cd /usr/local/bigdata//hadoop-3.1.4/etc/hadoop

vim hdfs-site.xml

# 添加如下内容

<property>

<name>dfs.datanode.data.dir</name>

<value>[DISK]file://${hadoop.tmp.dir}/dfs/data,[ARCHIVE]file://${hadoop.tmp.dir}/dfs/data/archive</value>

</property>

# 复制到集群的其他机器

scp -r hdfs-site.xml server2:$PWD

scp -r hdfs-site.xml server3:$PWD

scp -r hdfs-site.xml server4:$PWD

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

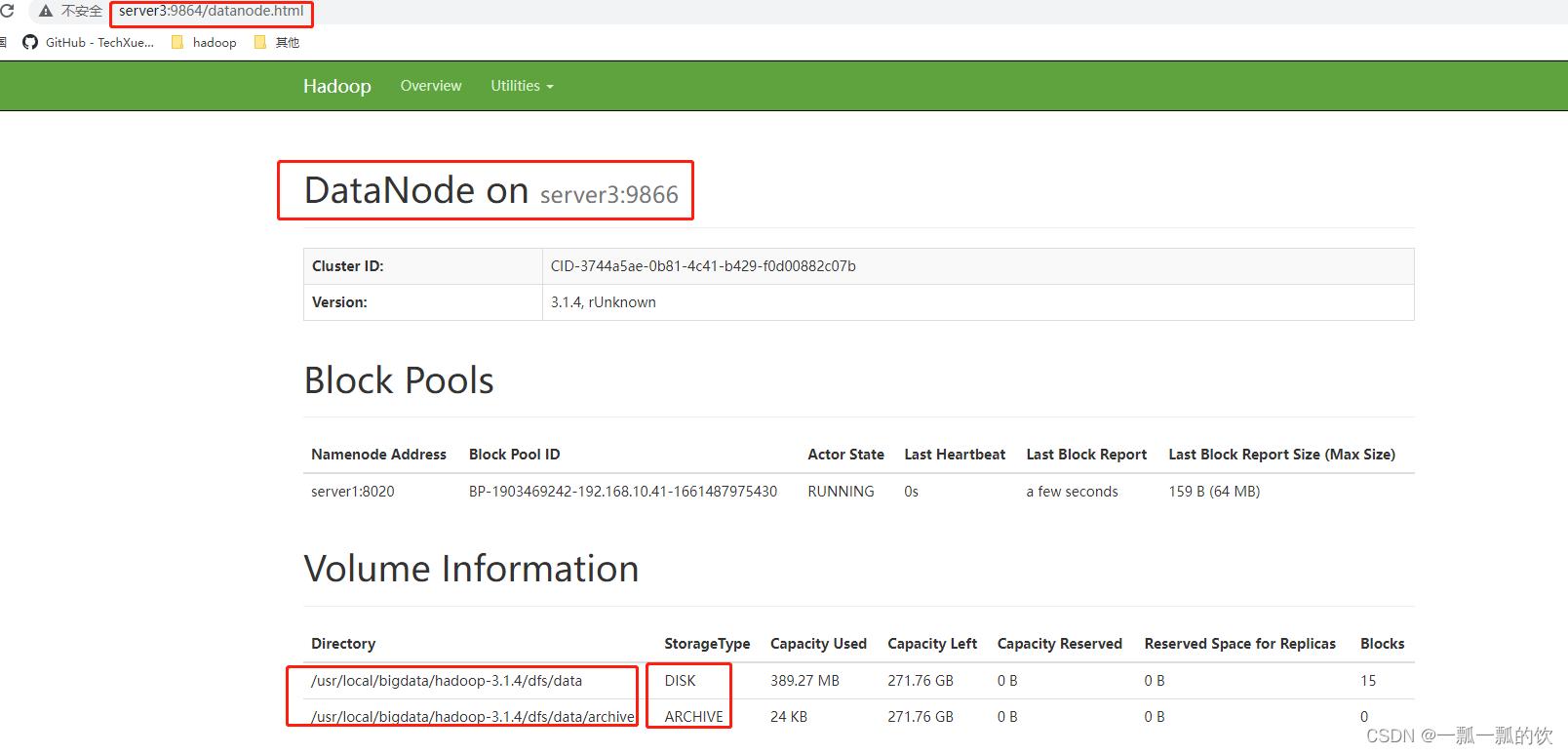

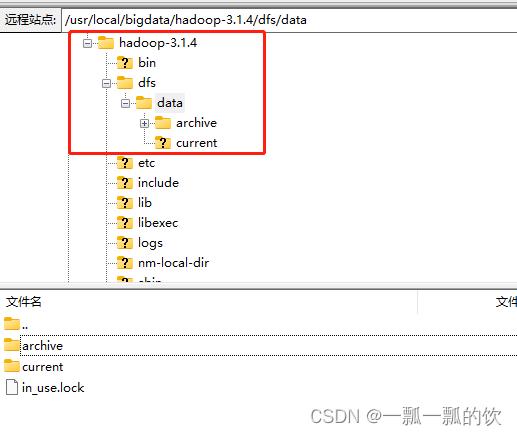

2、重启HDFS集群,验证配置

点击任意一个datanode进入下面的页面

服务器上实际的目录结构

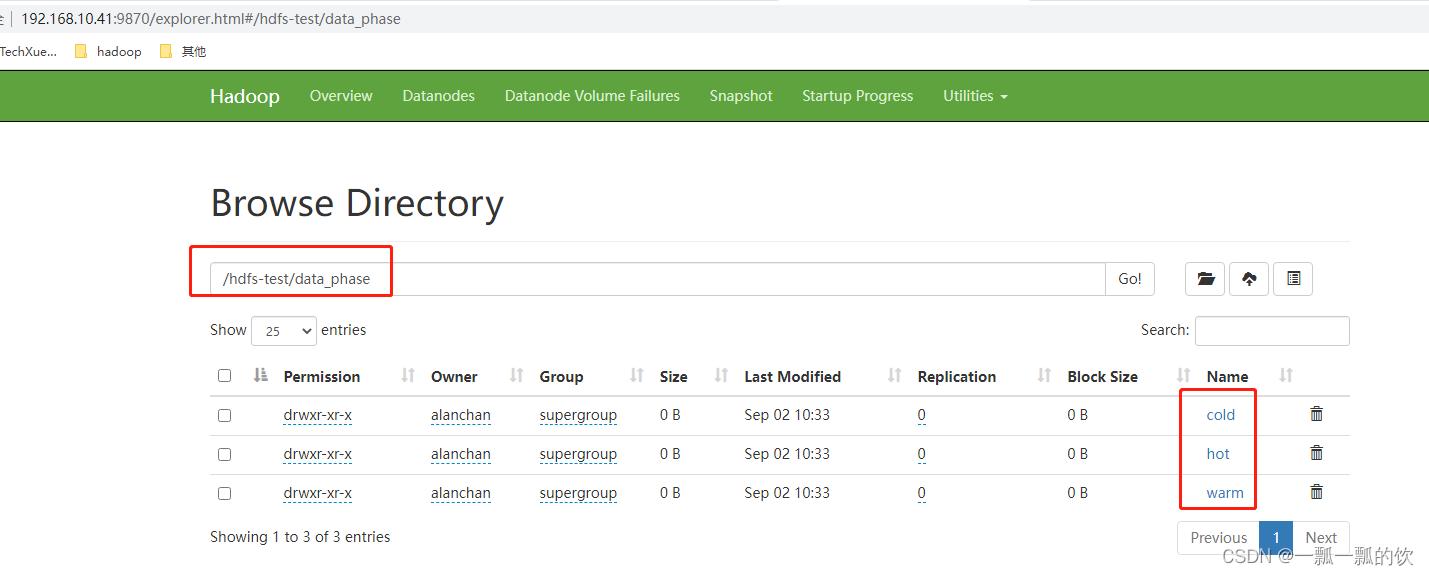

3、创建需求中的目录结构

- 热数据存储目录:/hdfs-test/data_phase/hot

- 温数据存储目录:/hdfs-test/data_phase/warm

- 冷数据存储目录:/hdfs-test/data_phase/cold

# 在任一台集群中的机器上执行

hdfs dfs -mkdir -p /hdfs-test/data_phase/hot

hdfs dfs -mkdir -p /hdfs-test/data_phase/warm

hdfs dfs -mkdir -p /hdfs-test/data_phase/cold

# 也可以通过web UI 创建对应的目录

- 1

- 2

- 3

- 4

- 5

- 6

创建好了目录结构后,如下图示

4、分别设置三个目录的存储策略

# 在任一台集群中的机器上执行命令

hdfs storagepolicies -setStoragePolicy -path /hdfs-test/data_phase/hot -policy HOT

hdfs storagepolicies -setStoragePolicy -path /hdfs-test/data_phase/warm -policy WARM

hdfs storagepolicies -setStoragePolicy -path /hdfs-test/data_phase/cold -policy COLD

# 具体执行命令

[alanchan@server1 hadoop]$ hdfs storagepolicies -setStoragePolicy -path /hdfs-test/data_phase/hot -policy HOT

Set storage policy HOT on /hdfs-test/data_phase/hot

[alanchan@server1 hadoop]$ hdfs storagepolicies -setStoragePolicy -path /hdfs-test/data_phase/warm -policy WARM

Set storage policy WARM on /hdfs-test/data_phase/warm

[alanchan@server1 hadoop]$ hdfs storagepolicies -setStoragePolicy -path /hdfs-test/data_phase/cold -policy COLD

Set storage policy COLD on /hdfs-test/data_phase/cold

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

5、查看三个目录的存储策略

# 在任一台集群中的机器上执行命令

hdfs storagepolicies -getStoragePolicy -path /hdfs-test/data_phase/hot

hdfs storagepolicies -getStoragePolicy -path /hdfs-test/data_phase/warm

hdfs storagepolicies -getStoragePolicy -path /hdfs-test/data_phase/cold

# 具体执行命令

[alanchan@server1 hadoop]$ hdfs storagepolicies -getStoragePolicy -path /hdfs-test/data_phase/hot

The storage policy of /hdfs-test/data_phase/hot:

BlockStoragePolicy{HOT:7, storageTypes=[DISK], creationFallbacks=[], replicationFallbacks=[ARCHIVE]}

[alanchan@server1 hadoop]$ hdfs storagepolicies -getStoragePolicy -path /hdfs-test/data_phase/warm

The storage policy of /hdfs-test/data_phase/warm:

BlockStoragePolicy{WARM:5, storageTypes=[DISK, ARCHIVE], creationFallbacks=[DISK, ARCHIVE], replicationFallbacks=[DISK, ARCHIVE]}

[alanchan@server1 hadoop]$ hdfs storagepolicies -getStoragePolicy -path /hdfs-test/data_phase/cold

The storage policy of /hdfs-test/data_phase/cold:

BlockStoragePolicy{COLD:2, storageTypes=[ARCHIVE], creationFallbacks=[], replicationFallbacks=[]}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

6、上传文件验证存储策略

# 在任一台集群中的机器上执行命令

hdfs dfs -put /usr/local/tools/caskey /hdfs-test/data_phase/hot

hdfs dfs -put /usr/local/tools/caskey /hdfs-test/data_phase/warm

hdfs dfs -put /usr/local/tools/caskey /hdfs-test/data_phase/cold

# 具体执行命令

[alanchan@server1 sbin]$ hadoop fs -ls -R /hdfs-test/data_phase

drwxr-xr-x - alanchan supergroup 0 2022-09-02 10:45 /hdfs-test/data_phase/cold

-rw-r--r-- 3 alanchan supergroup 2204 2022-09-02 10:45 /hdfs-test/data_phase/cold/caskey

drwxr-xr-x - alanchan supergroup 0 2022-09-02 10:45 /hdfs-test/data_phase/hot

-rw-r--r-- 3 alanchan supergroup 2204 2022-09-02 10:45 /hdfs-test/data_phase/hot/caskey

drwxr-xr-x - alanchan supergroup 0 2022-09-02 10:45 /hdfs-test/data_phase/warm

-rw-r--r-- 3 alanchan supergroup 2204 2022-09-02 10:45 /hdfs-test/data_phase/warm/caskey

# 也可以通过web UI 上传测试文件

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

7、查看不同存储策略文件的block位置

# 查看热数据存储的datanode策略

hdfs fsck /hdfs-test/data_phase/hot/caskey -files -blocks -locations

[DatanodeInfoWithStorage[192.168.10.43:9866,DS-189c3394-2fba-40e2-ad24-1b57785ec4d5,DISK],

DatanodeInfoWithStorage[192.168.10.44:9866,DS-97245afa-f1ec-4c50-93f8-0ba963e5f594,DISK],

DatanodeInfoWithStorage[192.168.10.42:9866,DS-a551a688-b8f7-4b0c-b536-13032e26846f,DISK]]

# 查看温数据存储的datanode策略

hdfs fsck /hdfs-test/data_phase/warm/caskey -files -blocks -locations

[DatanodeInfoWithStorage[192.168.10.44:9866,DS-4b2f3768-6b77-4d2e-9cf5-00c6647613e1,ARCHIVE],

DatanodeInfoWithStorage[192.168.10.43:9866,DS-5d9436ad-4b1a-4b0a-b4ee-989e4f76dbe5,ARCHIVE],

DatanodeInfoWithStorage[192.168.10.42:9866,DS-a551a688-b8f7-4b0c-b536-13032e26846f,DISK]]

# 查看冷数据存储的datanode策略

hdfs fsck /hdfs-test/data_phase/cold/caskey -files -blocks -locations

[DatanodeInfoWithStorage[192.168.10.42:9866,DS-57f4c7be-2462-4bdb-a2a9-cc703bfd03e4,ARCHIVE],

DatanodeInfoWithStorage[192.168.10.43:9866,DS-5d9436ad-4b1a-4b0a-b4ee-989e4f76dbe5,ARCHIVE],

DatanodeInfoWithStorage[192.168.10.44:9866,DS-4b2f3768-6b77-4d2e-9cf5-00c6647613e1,ARCHIVE]]

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

8、更多信息参考下面内容

hdfs fsck /hdfs-test/data_phase/hot/caskey -files -blocks -locations

hdfs fsck /hdfs-test/data_phase/warm/caskey -files -blocks -locations

hdfs fsck /hdfs-test/data_phase/cold/caskey -files -blocks -locations

[alanchan@server1 sbin]$ hdfs fsck /hdfs-test/data_phase/hot/caskey -files -blocks -locations

Connecting to namenode via http://server1:9870/fsck?ugi=alanchan&files=1&blocks=1&locations=1&path=%2Fhdfs-test%2Fdata_phase%2Fhot%2Fcaskey

FSCK started by alanchan (auth:SIMPLE) from /192.168.10.41 for path /hdfs-test/data_phase/hot/caskey at Fri Sep 02 10:48:29 CST 2022

/hdfs-test/data_phase/hot/caskey 2204 bytes, replicated: replication=3, 1 block(s): OK

0. BP-1903469242-192.168.10.41-1661487975430:blk_1073742710_1922 len=2204 Live_repl=3 [DatanodeInfoWithStorage[192.168.10.43:9866,DS-189c3394-2fba-40e2-ad24-1b57785ec4d5,DISK], DatanodeInfoWithStorage[192.168.10.44:9866,DS-97245afa-f1ec-4c50-93f8-0ba963e5f594,DISK], DatanodeInfoWithStorage[192.168.10.42:9866,DS-a551a688-b8f7-4b0c-b536-13032e26846f,DISK]]

Status: HEALTHY

Number of data-nodes: 3

Number of racks: 1

Total dirs: 0

Total symlinks: 0

Replicated Blocks:

Total size: 2204 B

Total files: 1

Total blocks (validated): 1 (avg. block size 2204 B)

Minimally replicated blocks: 1 (100.0 %)

Over-replicated blocks: 0 (0.0 %)

Under-replicated blocks: 0 (0.0 %)

Mis-replicated blocks: 0 (0.0 %)

Default replication factor: 3

Average block replication: 3.0

Missing blocks: 0

Corrupt blocks: 0

Missing replicas: 0 (0.0 %)

Erasure Coded Block Groups:

Total size: 0 B

Total files: 0

Total block groups (validated): 0

Minimally erasure-coded block groups: 0

Over-erasure-coded block groups: 0

Under-erasure-coded block groups: 0

Unsatisfactory placement block groups: 0

Average block group size: 0.0

Missing block groups: 0

Corrupt block groups: 0

Missing internal blocks: 0

FSCK ended at Fri Sep 02 10:48:29 CST 2022 in 4 milliseconds

The filesystem under path '/hdfs-test/data_phase/hot/caskey' is HEALTHY

[alanchan@server1 sbin]$ hdfs fsck /hdfs-test/data_phase/warm/caskey -files -blocks -locations

Connecting to namenode via http://server1:9870/fsck?ugi=alanchan&files=1&blocks=1&locations=1&path=%2Fhdfs-test%2Fdata_phase%2Fwarm%2Fcaskey

FSCK started by alanchan (auth:SIMPLE) from /192.168.10.41 for path /hdfs-test/data_phase/warm/caskey at Fri Sep 02 10:50:43 CST 2022

/hdfs-test/data_phase/warm/caskey 2204 bytes, replicated: replication=3, 1 block(s): OK

0. BP-1903469242-192.168.10.41-1661487975430:blk_1073742711_1923 len=2204 Live_repl=3 [DatanodeInfoWithStorage[192.168.10.44:9866,DS-4b2f3768-6b77-4d2e-9cf5-00c6647613e1,ARCHIVE], DatanodeInfoWithStorage[192.168.10.43:9866,DS-5d9436ad-4b1a-4b0a-b4ee-989e4f76dbe5,ARCHIVE], DatanodeInfoWithStorage[192.168.10.42:9866,DS-a551a688-b8f7-4b0c-b536-13032e26846f,DISK]]

Status: HEALTHY

Number of data-nodes: 3

Number of racks: 1

Total dirs: 0

Total symlinks: 0

Replicated Blocks:

Total size: 2204 B

Total files: 1

Total blocks (validated): 1 (avg. block size 2204 B)

Minimally replicated blocks: 1 (100.0 %)

Over-replicated blocks: 0 (0.0 %)

Under-replicated blocks: 0 (0.0 %)

Mis-replicated blocks: 0 (0.0 %)

Default replication factor: 3

Average block replication: 3.0

Missing blocks: 0

Corrupt blocks: 0

Missing replicas: 0 (0.0 %)

Erasure Coded Block Groups:

Total size: 0 B

Total files: 0

Total block groups (validated): 0

Minimally erasure-coded block groups: 0

Over-erasure-coded block groups: 0

Under-erasure-coded block groups: 0

Unsatisfactory placement block groups: 0

Average block group size: 0.0

Missing block groups: 0

Corrupt block groups: 0

Missing internal blocks: 0

FSCK ended at Fri Sep 02 10:50:43 CST 2022 in 1 milliseconds

The filesystem under path '/hdfs-test/data_phase/warm/caskey' is HEALTHY

[alanchan@server1 sbin]$ hdfs fsck /hdfs-test/data_phase/cold/caskey -files -blocks -locations

Connecting to namenode via http://server1:9870/fsck?ugi=alanchan&files=1&blocks=1&locations=1&path=%2Fhdfs-test%2Fdata_phase%2Fcold%2Fcaskey

FSCK started by alanchan (auth:SIMPLE) from /192.168.10.41 for path /hdfs-test/data_phase/cold/caskey at Fri Sep 02 10:51:20 CST 2022

/hdfs-test/data_phase/cold/caskey 2204 bytes, replicated: replication=3, 1 block(s): OK

0. BP-1903469242-192.168.10.41-1661487975430:blk_1073742712_1924 len=2204 Live_repl=3 [DatanodeInfoWithStorage[192.168.10.42:9866,DS-57f4c7be-2462-4bdb-a2a9-cc703bfd03e4,ARCHIVE], DatanodeInfoWithStorage[192.168.10.43:9866,DS-5d9436ad-4b1a-4b0a-b4ee-989e4f76dbe5,ARCHIVE], DatanodeInfoWithStorage[192.168.10.44:9866,DS-4b2f3768-6b77-4d2e-9cf5-00c6647613e1,ARCHIVE]]

Status: HEALTHY

Number of data-nodes: 3

Number of racks: 1

Total dirs: 0

Total symlinks: 0

Replicated Blocks:

Total size: 2204 B

Total files: 1

Total blocks (validated): 1 (avg. block size 2204 B)

Minimally replicated blocks: 1 (100.0 %)

Over-replicated blocks: 0 (0.0 %)

Under-replicated blocks: 0 (0.0 %)

Mis-replicated blocks: 0 (0.0 %)

Default replication factor: 3

Average block replication: 3.0

Missing blocks: 0

Corrupt blocks: 0

Missing replicas: 0 (0.0 %)

Erasure Coded Block Groups:

Total size: 0 B

Total files: 0

Total block groups (validated): 0

Minimally erasure-coded block groups: 0

Over-erasure-coded block groups: 0

Under-erasure-coded block groups: 0

Unsatisfactory placement block groups: 0

Average block group size: 0.0

Missing block groups: 0

Corrupt block groups: 0

Missing internal blocks: 0

FSCK ended at Fri Sep 02 10:51:20 CST 2022 in 1 milliseconds

The filesystem under path '/hdfs-test/data_phase/cold/caskey' is HEALTHY

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

评论记录:

回复评论: