版本:VS2019、Kinect3.0、相机传感器SDK1.4.0、OpenCV3.4.1、PCL1.10.1

参考文章:

基于Azure Kinect SDK获取物体rgb图、深度图、红外IR图和点云数据并保存到本地

RGB-D相机(Azure Kinect DK)RGB图、深度图的获取,配准与保存

项目配置

OpenCV和PCL安装

VS2019配置PCL(windows)

VS2019配置OpenCV(windows)

相机安装

1、将相机连接电脑

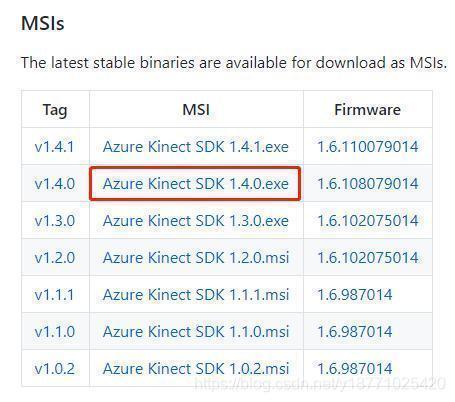

2、下载并安装传感器SDK

下载地址:http://iyenn.com/index/link?url=https://github.com/microsoft/Azure-Kinect-Sensor-SDK/blob/develop/docs/usage.md

下载完成后点击安装即可

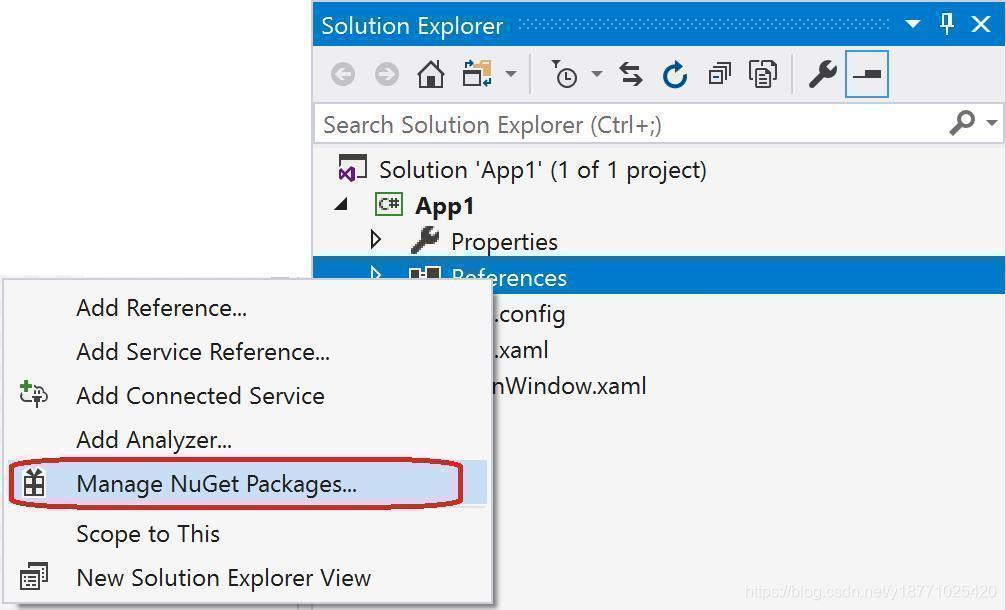

配置到VS项目中

1、选择项目中的引用,右键选择管理NuGet包

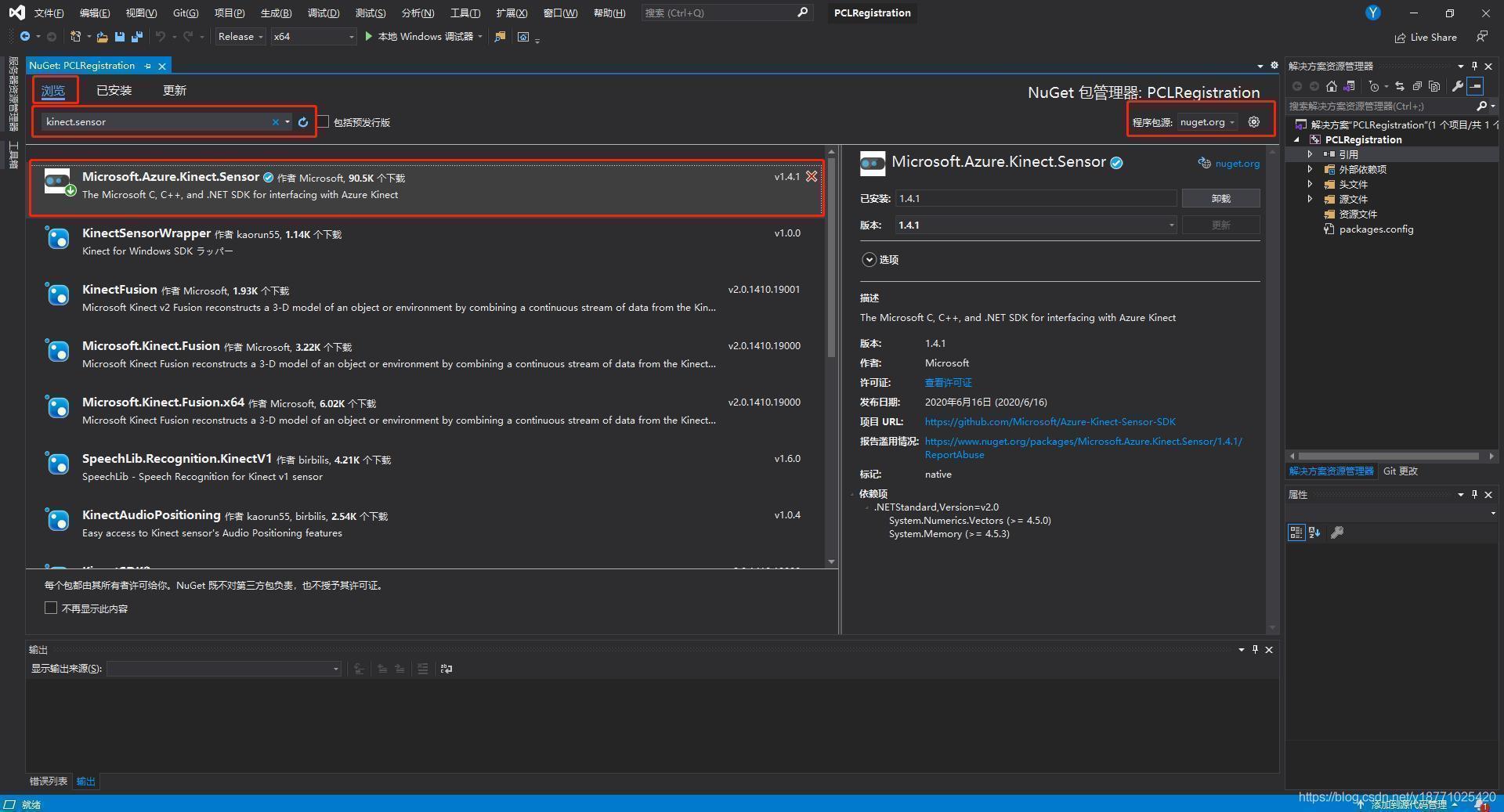

2、在浏览中,选择下图所示包,点击下载安装即可

3、在项目中添加头文件

#include - 1

- 2

- 3

获取RGB、Depth、IR图并保存

需要在脚本目录下创建rgb、depth、ir三个文件夹才可以保存图片

代码

// C++

#include - 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

- 245

- 246

- 247

- 248

- 249

- 250

- 251

- 252

- 253

- 254

- 255

- 256

点云融合

要想生成点云并获取需要在转换的时候对深度图进行归一化处理

代码

slamBase.h

# pragma once //保证头文件只被编译一次

#include - 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

slamBase.cpp

#include "slamBase.h"

#include - 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

pointCloudFusion.cpp

#include "slamBase.h"

int main(int argc, char** argv)

{

CAMERA_INTRINSIC_PARAMETERS cameraParams{ 913.451, 913.122, 953.516, 554.09, 1 }; //相机内参

int frameNum = 3;

PointCloud::Ptr fusedCloud(new PointCloud());

for (int idx = 0; idx < frameNum; idx++)

{

string rgbPath = "D:\data\rgb\" + to_string(idx) + ".png"; //图像以数字命名

string depthPath = "D:\data\depth\" + to_string(idx) + ".png";

FRAME frm;

frm.rgb = cv::imread(rgbPath);

if (frm.rgb.empty()) {

cerr << "Fail to load rgb image!" << endl;

}

frm.depth = cv::imread(depthPath, -1);

if (frm.depth.empty()) {

cerr << "Fail to load depth image!" << endl;

}

if (idx == 0)

{

fusedCloud = image2PointCloud(frm.rgb, frm.depth, cameraParams);

}

else

{

fusedCloud = pointCloudFusion(fusedCloud, frm, cameraParams);

}

}

pcl::io::savePCDFile("D:\data\fusedCloud.pcd", *fusedCloud);

pcl::io::savePLYFile("D:\data\fusedCloud.ply", *fusedCloud);

cout << "END!" << endl;

return 0;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

获取彩色图和深度图并保存为点云

// C++

#include poses;

PointCloud::Ptr fusedCloud(new PointCloud());

//string cameraPosePath = path + "cameraTrajectory.txt";

//readCameraTrajectory(cameraPosePath, poses);

for (int idx = 0; idx < frameNum; idx++)

{

//string rgbPath = "D:\data\rgb\" + to_string(idx) + ".png";

//string depthPath = "D:\data\depth\" + to_string(idx) + ".png";

string rgbPath = "D:\data\0rgb.png";

string depthPath = "D:\data\0d.png";

//string pclPath = "D:\data\" + to_string(idx) + ".ply";

string pclPath = "D:\data\ply.ply";

FRAME frm;

frm.rgb = cv::imread(rgbPath);

if (frm.rgb.empty()) {

cerr << "Fail to load rgb image!" << endl;

}

frm.depth = cv::imread(depthPath, -1);

if (frm.depth.empty()) {

cerr << "Fail to load depth image!" << endl;

}

if (idx == 0)

{

//fusedCloud = image2PointCloud(frm.rgb, frm.depth, cameraParams);

//pcl::io::savePLYFile("D:\data\fusedCloud1.ply", *fusedCloud);

}

else

{

fusedCloud = pointCloudFusion(fusedCloud, frm, cameraParams);

}

fusedCloud = image2PointCloud(frm.rgb, frm.depth, cameraParams);

pcl::io::savePLYFile(pclPath, *fusedCloud);

}

//pcl::io::savePCDFile("D:\data\fusedCloud.pcd", *fusedCloud);

pcl::io::savePLYFile("D:\data\fusedCloud.ply", *fusedCloud);

cout << "END!" << endl;

}

int main3(int argc, char* argv[]) {

/*

找到并打开 Azure Kinect 设备

*/

// 发现已连接的设备数

const uint32_t device_count = k4a::device::get_installed_count();

if (0 == device_count) {

std::cout << "Error: no K4A devices found. " << std::endl;

return -1;

}

else {

std::cout << "Found " << device_count << " connected devices. " << std::endl;

if (1 != device_count)// 超过1个设备,也输出错误信息。

{

std::cout << "Error: more than one K4A devices found. " << std::endl;

return -1;

}

else// 该示例代码仅限对1个设备操作

{

std::cout << "Done: found 1 K4A device. " << std::endl;

}

}

// 打开(默认)设备

k4a::device device = k4a::device::open(K4A_DEVICE_DEFAULT);

std::cout << "Done: open device. " << std::endl;

/*

检索并保存 Azure Kinect 图像数据

*/

// 配置并启动设备

k4a_device_configuration_t config = K4A_DEVICE_CONFIG_INIT_DISABLE_ALL;

config.camera_fps = K4A_FRAMES_PER_SECOND_30;

//config.camera_fps = K4A_FRAMES_PER_SECOND_15;

config.color_format = K4A_IMAGE_FORMAT_COLOR_BGRA32;

config.color_resolution = K4A_COLOR_RESOLUTION_720P;

config.depth_mode = K4A_DEPTH_MODE_NFOV_UNBINNED;

//config.depth_mode = K4A_DEPTH_MODE_WFOV_2X2BINNED;

config.synchronized_images_only = true;// ensures that depth and color images are both available in the capture

device.start_cameras(&config);

std::cout << "Done: start camera." << std::endl;

//写入txt文件流

ofstream rgb_out;

ofstream d_out;

rgb_out.open("./rgb.txt");

d_out.open("./depth.txt");

rgb_out << "# color images" << endl;

rgb_out << "# file: rgbd_dataset" << endl;

rgb_out << "# timestamp" << " " << "filename" << endl;

d_out << "# depth images" << endl;

d_out << "# file: rgbd_dataset" << endl;

d_out << "# timestamp" << " " << "filename" << endl;

rgb_out << flush;

d_out << flush;

// 稳定化

k4a::capture capture;

int iAuto = 0;//用来稳定,类似自动曝光

int iAutoError = 0;// 统计自动曝光的失败次数

while (true) {

if (device.get_capture(&capture)) {

std::cout << iAuto << ". Capture several frames to give auto-exposure" << std::endl;

// 跳过前 n 个(成功的数据采集)循环,用来稳定

if (iAuto != 30) {

iAuto++;

continue;

}

else {

std::cout << "Done: auto-exposure" << std::endl;

break;// 跳出该循环,完成相机的稳定过程

}

}

else {

std::cout << iAutoError << ". K4A_WAIT_RESULT_TIMEOUT." << std::endl;

if (iAutoError != 30) {

iAutoError++;

continue;

}

else {

std::cout << "Error: failed to give auto-exposure. " << std::endl;

return -1;

}

}

}

std::cout << "-----------------------------------" << std::endl;

std::cout << "----- Have Started Kinect DK. -----" << std::endl;

std::cout << "-----------------------------------" << std::endl;

// 从设备获取捕获

k4a::image rgbImage;

k4a::image depthImage;

//k4a::image irImage;

k4a::image transformed_depthImage;

cv::Mat cv_rgbImage_with_alpha;

cv::Mat cv_rgbImage_no_alpha;

cv::Mat cv_depth;

cv::Mat cv_depth_8U;

int index = 0;

while (index < 1) {

if (device.get_capture(&capture)) {

// rgb

// * Each pixel of BGRA32 data is four bytes. The first three bytes represent Blue, Green,

// * and Red data. The fourth byte is the alpha channel and is unused in the Azure Kinect APIs.

rgbImage = capture.get_color_image();

#if DEBUG_std_cout == 1

std::cout << "[rgb] " << "

"

<< "format: " << rgbImage.get_format() << "

"

<< "device_timestamp: " << rgbImage.get_device_timestamp().count() << "

"

<< "system_timestamp: " << rgbImage.get_system_timestamp().count() << "

"

<< "height*width: " << rgbImage.get_height_pixels() << ", " << rgbImage.get_width_pixels()

<< std::endl;

#endif

// depth

// * Each pixel of DEPTH16 data is two bytes of little endian unsigned depth data. The unit of the data is in

// * millimeters from the origin of the camera.

depthImage = capture.get_depth_image();

#if DEBUG_std_cout == 1

std::cout << "[depth] " << "

"

<< "format: " << depthImage.get_format() << "

"

<< "device_timestamp: " << depthImage.get_device_timestamp().count() << "

"

<< "system_timestamp: " << depthImage.get_system_timestamp().count() << "

"

<< "height*width: " << depthImage.get_height_pixels() << ", " << depthImage.get_width_pixels()

<< std::endl;

#endif

//获取彩色点云

k4a::calibration k4aCalibration = device.get_calibration(config.depth_mode, config.color_resolution);

k4a::transformation k4aTransformation = k4a::transformation(k4aCalibration);

//PointCloud::Ptr cloud(new PointCloud);

int color_image_width_pixels = rgbImage.get_width_pixels();

int color_image_height_pixels = rgbImage.get_height_pixels();

transformed_depthImage = NULL;

transformed_depthImage = k4a::image::create(K4A_IMAGE_FORMAT_DEPTH16,

color_image_width_pixels,

color_image_height_pixels,

color_image_width_pixels * (int)sizeof(uint16_t));

k4a::image point_cloud_image = NULL;

point_cloud_image = k4a::image::create(K4A_IMAGE_FORMAT_CUSTOM,

color_image_width_pixels,

color_image_height_pixels,

color_image_width_pixels * 3 * (int)sizeof(int16_t));

if (depthImage.get_width_pixels() == rgbImage.get_width_pixels() && depthImage.get_height_pixels() == rgbImage.get_height_pixels()) {

std::copy(depthImage.get_buffer(), depthImage.get_buffer() + depthImage.get_height_pixels() * depthImage.get_width_pixels() * (int)sizeof(uint16_t), transformed_depthImage.get_buffer());

}

else {

k4aTransformation.depth_image_to_color_camera(depthImage, &transformed_depthImage);

}

k4aTransformation.depth_image_to_point_cloud(transformed_depthImage, K4A_CALIBRATION_TYPE_COLOR, &point_cloud_image);

pcl::PointCloud<pcl::PointXYZRGB>::Ptr cloud(new pcl::PointCloud<pcl::PointXYZRGB>);

cloud->width = color_image_width_pixels;

cloud->height = color_image_height_pixels;

cloud->is_dense = false;

cloud->resize(static_cast<size_t>(color_image_width_pixels) * color_image_height_pixels);

const int16_t* point_cloud_image_data = reinterpret_cast<const int16_t*>(point_cloud_image.get_buffer());

const uint8_t* color_image_data = rgbImage.get_buffer();

for (int i = 0; i < color_image_width_pixels * color_image_height_pixels; i++) {

PointT point;

point.x = point_cloud_image_data[3 * i + 0] / 1000.0f;

point.y = point_cloud_image_data[3 * i + 1] / 1000.0f;

point.z = point_cloud_image_data[3 * i + 2] / 1000.0f;

point.b = color_image_data[4 * i + 0];

point.g = color_image_data[4 * i + 1];

point.r = color_image_data[4 * i + 2];

uint8_t alpha = color_image_data[4 * i + 3];

if (point.x == 0 && point.y == 0 && point.z == 0 && alpha == 0)

continue;

cloud->points[i] = point;

}

pcl::io::savePLYFile("D:\data\ply.ply", *cloud); //将点云数据保存为ply文件

}

else {

std::cout << "false: K4A_WAIT_RESULT_TIMEOUT." << std::endl;

}

index++;

}

cv::destroyAllWindows();

rgb_out << flush;

d_out << flush;

rgb_out.close();

d_out.close();

// 释放,关闭设备

rgbImage.reset();

depthImage.reset();

capture.reset();

device.close();

return 1;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

- 245

- 246

- 247

- 248

- 249

- 250

- 251

- 252

- 253

- 254

- 255

- 256

- 257

- 258

- 259

- 260

- 261

- 262

- 263

- 264

- 265

- 266

- 267

- 268

- 269

- 270

- 271

- 272

- 273

- 274

- 275

- 276

- 277

- 278

评论记录:

回复评论: