一、Sequetia API实现原理

前面介绍了RAW/Callback API编程,同时也谈到了RAW API编程的缺点:用户应用程序和协议栈内核处于同一进程中,两者间会出现相互制约的关系,影响协议栈接收、处理新数据包的效率,甚至出现丢包错误。本文将介绍协议栈在有操作系统模拟层下的编程方式,这时协议栈内核和用户程序就可以处在两个相互独立的进程中运行,两者间也就不存在相互制约的问题了。

在Linux中有BSD Socket API进行网络编程,它遵循了一套简单的编程步骤和规则,可以提高应用程序的开发效率。但由于BSD Socket函数实现上具有很高的抽象特性,所以并不适合在小型嵌入式TCP/IP协议栈中使用,更重要的是BSD Socket编程时,在应用程序进程和协议栈内核进程之间会出现数据拷贝的情况,拷贝消耗的时间和空间资源对嵌入式系统来说是非常宝贵的资源。

LwIP协议栈针对上述特点提供了Sequetia API,它的出发点是上层已经预知了协议栈内核的部分结构,API可以使用这种预知来避免数据拷贝的出现,Sequetia API与BSD Socket具有很大的相似性,但工作在更低的层次(Sequetia API操作的是一个网络连接,BSD Socket像操作普通文件那样来操作一个网络连接,充分贯彻linux一切皆文件的理念),用户进程可以直接操作内核进程中的数据包数据。

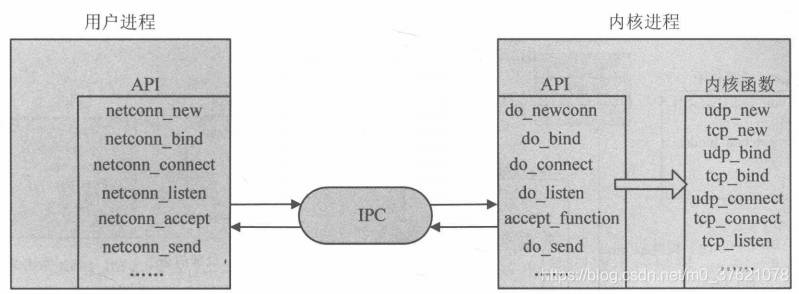

Sequetia API的实现由两部分组成:一部分作为用户编程接口函数提供给用户,这些函数在用户进程中执行;另一部分驻留在协议栈内核进程中,这两部分通过进程通信机制(IPC:Inter-Process Communication)实现通信和同步,共同为应用程序提供服务。被用到的进程通信机制包括以下三种:

- 消息邮箱,用于两部分API的通信;

- 信号量,用于两部分API的同步;

- 共享内存,用于保存用户进程与内核进程的消息结构。

Sequetia API设计的核心在于让用户进程负责尽可能多的工作,例如数据的计算、拷贝等;而协议栈进程只负责简单的通信工作,这点很关键,因为系统可能存在多个应用程序,它们都使用协议栈进程提供的通信服务,保证内核进程的高效性和实时性是提高系统性能的重要保障。Sequetia API的实现中,两部分API间的关系如下图示:

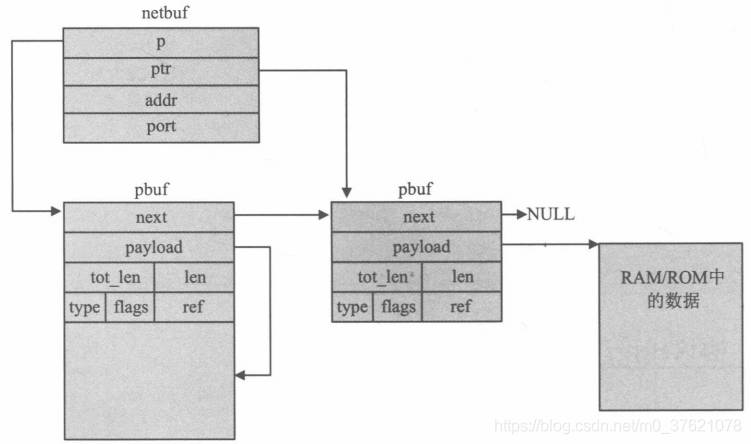

Sequetia API实现时,也为用户提供了数据包管理函数,可以完成数据包内存申请、释放、数据拷贝等任务,应用程序使用netbuf结构来描述、组装数据包,该结构只是对内核pbuf的简单封装,通过共享一个netbuf结构,两部分API就能实现对数据包的共同处理,避免了数据的拷贝。同时,由于RAW API针对RAW、UDP、TCP各有一套API,使用不够方便,协议栈内核对RAW API进行了更高抽象的封装,将RAW、UDP、TCP统一为一套接口(像上图中do_xxx格式的函数,本文称该接口函数为内核进程API),根据类型字段自动选择合适的调用对象,实现原理下文再介绍。

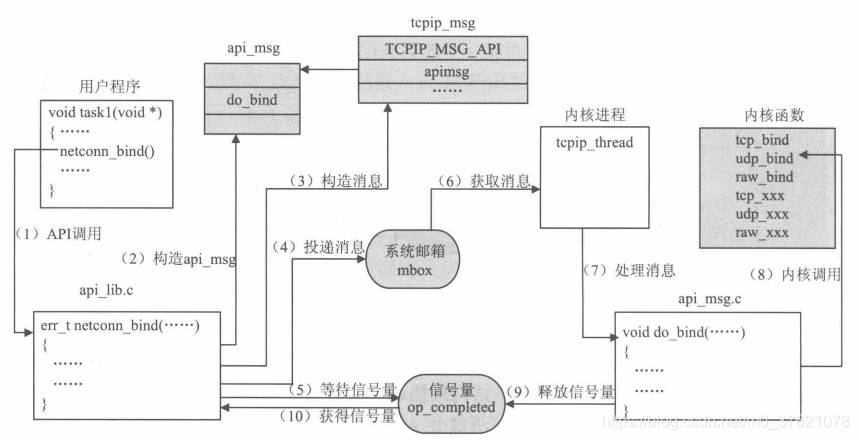

上图中用户进程API与内核进程API之间进行交互信息时,通常是由用户进程API函数调用时发出消息,用来告诉内核进程执行哪一个内核API函数(通过函数指针和参数指针的传递),各种类型内核消息的具体消息内容都可以直接包含在内核消息结构tcpip_msg中,两部分API互相配合共同完成一个完整的API功能。举个例子,如果用户程序中调用函数netconn_bind绑定了一个连接,该函数实现时是通过向内核进程发送一个TCPIP_MSG_API类型的消息,告诉内核进程执行do_bind函数;在消息发出后,函数阻塞在信号量上,等待内核处理该消息;内核在处理消息时,会根据消息内容调用do_bind,而do_bind会根据连接的类型调用内核函数udp_bind、tcp_bind或raw_bind;当do_bind执行完成后,它会释放信号量,这使被阻塞的netconn_bind得以继续执行,整个过程如下图所示:

二、协议栈消息机制

2.1 内核进程消息结构

内核进程tcpip_thread在前篇介绍协议栈内核定时事件时简单介绍过,该进程阻塞在邮箱上接收消息,系统消息是通过结构tcpip_msg来描述的,内核进程接收到消息后根据识别出的消息类型,调用相应的内核进程API函数处理这些消息。消息tcpip_msg的数据结构及内核进程tcpip_thread的实现代码如下:

// rt-thread\components\net\lwip-1.4.1\src\include\lwip\tcpip.h

enum tcpip_msg_type {

TCPIP_MSG_API,

TCPIP_MSG_INPKT,

TCPIP_MSG_NETIFAPI,

TCPIP_MSG_TIMEOUT,

TCPIP_MSG_UNTIMEOUT,

TCPIP_MSG_CALLBACK,

TCPIP_MSG_CALLBACK_STATIC

};

struct tcpip_msg {

enum tcpip_msg_type type;

sys_sem_t *sem;

union {

struct api_msg *apimsg;

struct netifapi_msg *netifapimsg;

struct {

struct pbuf *p;

struct netif *netif;

} inp;

struct {

tcpip_callback_fn function;

void *ctx;

} cb;

struct {

u32_t msecs;

sys_timeout_handler h;

void *arg;

} tmo;

} msg;

};

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

// rt-thread\components\net\lwip-1.4.1\src\api\tcpip.c

/* global variables */

static tcpip_init_done_fn tcpip_init_done;

static void *tcpip_init_done_arg;

static sys_mbox_t mbox;

/** The global semaphore to lock the stack. */

sys_mutex_t lock_tcpip_core;

/**

* The main lwIP thread. This thread has exclusive access to lwIP core functions

* (unless access to them is not locked). Other threads communicate with this

* thread using message boxes.

* It also starts all the timers to make sure they are running in the right

* thread context.

* @param arg unused argument

*/

static void tcpip_thread(void *arg)

{

struct tcpip_msg *msg;

if (tcpip_init_done != NULL) {

tcpip_init_done(tcpip_init_done_arg);

}

LOCK_TCPIP_CORE();

while (1) { /* MAIN Loop */

UNLOCK_TCPIP_CORE();

LWIP_TCPIP_THREAD_ALIVE();

/* wait for a message, timeouts are processed while waiting */

sys_timeouts_mbox_fetch(&mbox, (void **)&msg);

LOCK_TCPIP_CORE();

switch (msg->type) {

case TCPIP_MSG_API:

msg->msg.apimsg->function(&(msg->msg.apimsg->msg));

break;

case TCPIP_MSG_INPKT:

if (msg->msg.inp.netif->flags & (NETIF_FLAG_ETHARP | NETIF_FLAG_ETHERNET)) {

ethernet_input(msg->msg.inp.p, msg->msg.inp.netif);

} else {

ip_input(msg->msg.inp.p, msg->msg.inp.netif);

}

memp_free(MEMP_TCPIP_MSG_INPKT, msg);

break;

case TCPIP_MSG_NETIFAPI:

msg->msg.netifapimsg->function(&(msg->msg.netifapimsg->msg));

break;

case TCPIP_MSG_TIMEOUT:

sys_timeout(msg->msg.tmo.msecs, msg->msg.tmo.h, msg->msg.tmo.arg);

memp_free(MEMP_TCPIP_MSG_API, msg);

break;

case TCPIP_MSG_UNTIMEOUT:

sys_untimeout(msg->msg.tmo.h, msg->msg.tmo.arg);

memp_free(MEMP_TCPIP_MSG_API, msg);

break;

case TCPIP_MSG_CALLBACK:

msg->msg.cb.function(msg->msg.cb.ctx);

memp_free(MEMP_TCPIP_MSG_API, msg);

break;

case TCPIP_MSG_CALLBACK_STATIC:

msg->msg.cb.function(msg->msg.cb.ctx);

break;

default:

LWIP_ASSERT("tcpip_thread: invalid message", 0);

break;

}

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

枚举类型tcpip_msg_type定义了系统中可能出现的消息类型,消息结构的msg字段是一个共用体union,共用体中定义了各类型消息的具体内容,每种类型的消息对应了共用体中的一个字段,其中注册定时事件和注销定时事件消息共用一个tmo结构、回调事件与静态回调事件消息也共用一个cb结构;API调用与NETIFAPI调用相关的消息具体内容比较多,不宜直接放在tcpip_msg中,系统用了专门的结构体api_msg和netifapimsg来描述对应消息的具体内容,tcpip_msg中只保存了一个指向api_msg和netifapimsg的指针。

2.2 用户进程消息结构

内核进程tcpip_thread负责接收被投递到邮箱中的tcpip_msg消息,那么这个消息是被哪个函数投递的呢?我们以上图为例,查看netconn_bind的代码可知,向邮箱中投递TCPIP_MSG_API类型消息的函数是tcpip_apimsg,函数代码如下:

// rt-thread\components\net\lwip-1.4.1\src\api\api_lib.c

/**

* Bind a netconn to a specific local IP address and port.

* Binding one netconn twice might not always be checked correctly!

* @param conn the netconn to bind

* @param addr the local IP address to bind the netconn to (use IP_ADDR_ANY

* to bind to all addresses)

* @param port the local port to bind the netconn to (not used for RAW)

* @return ERR_OK if bound, any other err_t on failure

*/

err_t netconn_bind(struct netconn *conn, ip_addr_t *addr, u16_t port)

{

struct api_msg msg;

err_t err;

msg.function = do_bind;

msg.msg.conn = conn;

msg.msg.msg.bc.ipaddr = addr;

msg.msg.msg.bc.port = port;

err = TCPIP_APIMSG(&msg);

return err;tcpip

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

// rt-thread\components\net\lwip-1.4.1\src\include\lwip\tcpip.h

#define TCPIP_APIMSG(m) tcpip_apimsg(m)

// rt-thread\components\net\lwip-1.4.1\src\api\tcpip.c

/**

* Call the lower part of a netconn_* function

* This function is then running in the thread context

* of tcpip_thread and has exclusive access to lwIP core code.

* @param apimsg a struct containing the function to call and its parameters

* @return ERR_OK if the function was called, another err_t if not

*/

err_t tcpip_apimsg(struct api_msg *apimsg)

{

struct tcpip_msg msg;

if (sys_mbox_valid(&mbox)) {

msg.type = TCPIP_MSG_API;

msg.msg.apimsg = apimsg;

sys_mbox_post(&mbox, &msg);

sys_arch_sem_wait(&apimsg->msg.conn->op_completed, 0);

return apimsg->msg.err;

}

return ERR_VAL;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

在tcpip_apimsg函数中完成将api_msg结构的消息封装为tcpip_msg结构的消息,并向邮箱中投递该消息,并阻塞等待信号量,直到内核进程tcpip_thread处理完该消息并释放信号量后,tcpip_apimsg函数获得信号量继续执行。

用户进程调用的API(比如netconn_bind)实际操作的是api_msg结构的消息,在内核进程tcpip_thread中处理TCPIP_MSG_API类型的消息时,也是调用api_msg结构消息中的函数指针,api_msg数据结构的描述如下:

// rt-thread\components\net\lwip-1.4.1\src\include\lwip\api_msg.h

/** This struct includes everything that is necessary to execute a function

for a netconn in another thread context (mainly used to process netconns

in the tcpip_thread context to be thread safe).

*/

struct api_msg_msg {

/** The netconn which to process - always needed: it includes the semaphore

which is used to block the application thread until the function finished. */

struct netconn *conn;

/** The return value of the function executed in tcpip_thread. */

err_t err;

/** Depending on the executed function, one of these union members is used */

union {

/** used for do_send */

struct netbuf *b;

/** used for do_newconn */

struct {

u8_t proto;

} n;

/** used for do_bind and do_connect */

struct {

ip_addr_t *ipaddr;

u16_t port;

} bc;

/** used for do_getaddr */

struct {

ip_addr_t *ipaddr;

u16_t *port;

u8_t local;

} ad;

/** used for do_write */

struct {

const void *dataptr;

size_t len;

u8_t apiflags;

} w;

/** used for do_recv */

struct {

u32_t len;

} r;

/** used for do_close (/shutdown) */

struct {

u8_t shut;

} sd;

#if LWIP_IGMP

/** used for do_join_leave_group */

struct {

ip_addr_t *multiaddr;

ip_addr_t *netif_addr;

enum netconn_igmp join_or_leave;

} jl;

#endif /* LWIP_IGMP */

} msg;

};

/** This struct contains a function to execute in another thread context and

a struct api_msg_msg that serves as an argument for this function.

This is passed to tcpip_apimsg to execute functions in tcpip_thread context.

*/

struct api_msg {

/** function to execute in tcpip_thread context */

void (* function)(struct api_msg_msg *msg);

/** arguments for this function */

struct api_msg_msg msg;

};

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

api_msg结构主要由两部分组成:一是希望内核进程执行的API函数do_xxx;另一个是执行相应函数时需要的参数api_msg_msg。api_msg_msg结构包含了三个字段:一是描述连接信息的conn,它包含了与该连接相关的信号量、邮箱等信息,do_xxx执行时要用这些信息来完成与应用进程间的通信和同步;二是内核回调执行结果err,记录内核do_xxx函数的执行结果;三是共用体msg,各个成员记录了各个函数执行时需要的详细参数。

2.3 内核进程API

内核进程tcpip_thread从邮箱中获取到tcpip_msg消息后,看到netconn_bind投递过来的消息类型是TCPIP_MSG_API,根据类型执行回调函数msg->msg.apimsg->function(&(msg->msg.apimsg->msg)),也即api_msg结构定义的函数指针和参数指针,这里的函数指针是do_bind,参数指针除了连接结构conn和执行结果err外还包括本地IP和本地port,实际内核进程执行的是do_bind(msg)。那么,do_bind最终又是如何调用RAW API的呢?或者说do_xxx形式的内核API是如何统一IP RAW、UDP RAW、TCP RAW API的呢?下面下看看do_bind的函数实现代码:

// rt-thread\components\net\lwip-1.4.1\src\include\lwip\api.h

/* Helpers to process several netconn_types by the same code */

#define NETCONNTYPE_GROUP(t) (t&0xF0)

/** Protocol family and type of the netconn */

enum netconn_type {

NETCONN_INVALID = 0,

/* NETCONN_TCP Group */

NETCONN_TCP = 0x10,

/* NETCONN_UDP Group */

NETCONN_UDP = 0x20,

NETCONN_UDPLITE = 0x21,

NETCONN_UDPNOCHKSUM= 0x22,

/* NETCONN_RAW Group */

NETCONN_RAW = 0x40

};

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

// rt-thread\components\net\lwip-1.4.1\src\api\api_msg.c

/**

* Bind a pcb contained in a netconn

* Called from netconn_bind.

* @param msg the api_msg_msg pointing to the connection and containing

* the IP address and port to bind to

*/

void do_bind(struct api_msg_msg *msg)

{

if (ERR_IS_FATAL(msg->conn->last_err)) {

msg->err = msg->conn->last_err;

} else {

msg->err = ERR_VAL;

if (msg->conn->pcb.tcp != NULL) {

switch (NETCONNTYPE_GROUP(msg->conn->type)) {

case NETCONN_RAW:

msg->err = raw_bind(msg->conn->pcb.raw, msg->msg.bc.ipaddr);

break;

case NETCONN_UDP:

msg->err = udp_bind(msg->conn->pcb.udp, msg->msg.bc.ipaddr, msg->msg.bc.port);

break;

case NETCONN_TCP:

msg->err = tcp_bind(msg->conn->pcb.tcp, msg->msg.bc.ipaddr, msg->msg.bc.port);

break;

default:

break;

}

}

}

TCPIP_APIMSG_ACK(msg);

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

在参数指针msg中有一个表示连接的字段conn,其中包含了类型字段conn_type,do_bind函数根据连接类型调用相应的RAW API,实现将多种RAW API抽象为统一的内核进程API(do_xxx的形式)的目的。下面列出内核进程API的其余接口函数:

// rt-thread\components\net\lwip-1.4.1\src\include\lwip\api_msg.h

void do_newconn ( struct api_msg_msg *msg); // * Create a new pcb of a specific type inside a netconn, Called from netconn_new_with_proto_and_callback.

void do_delconn ( struct api_msg_msg *msg); // * Delete the pcb inside a netconn, Called from netconn_delete.

void do_bind ( struct api_msg_msg *msg); // * Bind a pcb contained in a netconn, Called from netconn_bind.

void do_connect ( struct api_msg_msg *msg); // * Connect a pcb contained inside a netconn, Called from netconn_connect.

void do_disconnect ( struct api_msg_msg *msg); // * Disconnect a pcb contained inside a netconn, Only used for UDP netconns, Called from netconn_disconnect.

void do_listen ( struct api_msg_msg *msg); // * Set a TCP pcb contained in a netconn into listen mode, Called from netconn_listen.

void do_send ( struct api_msg_msg *msg); // * Send some data on a RAW or UDP pcb contained in a netconn, Called from netconn_send.

void do_recv ( struct api_msg_msg *msg); // * Indicate data has been received from a TCP pcb contained in a netconn, Called from netconn_recv.

void do_write ( struct api_msg_msg *msg); // * Send some data on a TCP pcb contained in a netconn, Called from netconn_write.

void do_getaddr ( struct api_msg_msg *msg); // * Return a connection's local or remote address, Called from netconn_getaddr.

void do_close ( struct api_msg_msg *msg); // * Close a TCP pcb contained in a netconn, Called from netconn_close.

void do_shutdown ( struct api_msg_msg *msg);

#if LWIP_IGMP

void do_join_leave_group( struct api_msg_msg *msg); // * Join multicast groups for UDP netconns, Called from netconn_join_leave_group.

#endif /* LWIP_IGMP */

#if LWIP_DNS

void do_gethostbyname(void *arg); // * Execute a DNS query, Called from netconn_gethostbyname.

#endif /* LWIP_DNS */

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

2.4 内核回调接口

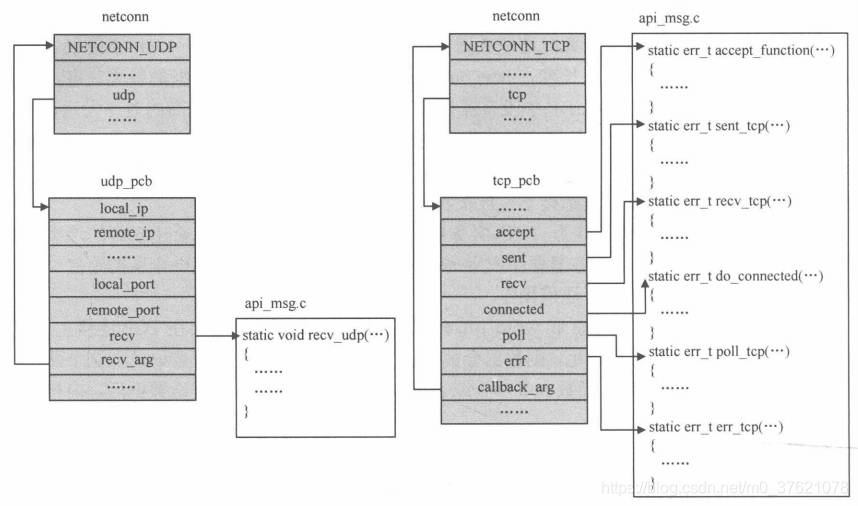

使用Raw/Callback API编程时,用户编程的方法就是向内核注册各种自定义的回调函数,回调是与内核实现交互的唯一方式。协议栈Sequetia API是基于Raw/Callback API来实现的,它与内核交互的方式也只能通过回调,但协议栈Sequetia API的一个重要目的就是简化网络编程(Raw/Callback API编写并注册回调函数的方式没有明确规定用户编程的步骤和规则,使用这种API需要对协议栈内核有透彻的理解),因此协议栈实现了几个默认的回调函数,当为新连接创建内核控制块时,这些回调函数会被默认的注册到控制块中相关字段,这就为内核和协议栈Sequetia API的交互提供了保证。

这些默认的回调函数如下图所示,其中用于RAW的有1个函数(图中省略了),用于UDP的有1个函数,用于TCP的有6个函数:

这些默认的回调函数是在为新连接创建内核控制块时,被默认注册到控制块中相关字段的,默认回调函数注册过程的相关代码如下:

// rt-thread\components\net\lwip-1.4.1\src\api\api_msg.c

/**

* Create a new pcb of a specific type inside a netconn.

* Called from netconn_new_with_proto_and_callback.

* @param msg the api_msg_msg describing the connection type

*/

void do_newconn(struct api_msg_msg *msg)

{

msg->err = ERR_OK;

if(msg->conn->pcb.tcp == NULL) {

pcb_new(msg);

}

TCPIP_APIMSG_ACK(msg);

}

/**

* Create a new pcb of a specific type.

* Called from do_newconn().

* @param msg the api_msg_msg describing the connection type

* @return msg->conn->err, but the return value is currently ignored

*/

static void pcb_new(struct api_msg_msg *msg)

{

LWIP_ASSERT("pcb_new: pcb already allocated", msg->conn->pcb.tcp == NULL);

/* Allocate a PCB for this connection */

switch(NETCONNTYPE_GROUP(msg->conn->type)) {

case NETCONN_RAW:

msg->conn->pcb.raw = raw_new(msg->msg.n.proto);

if(msg->conn->pcb.raw == NULL) {

msg->err = ERR_MEM;

break;

}

raw_recv(msg->conn->pcb.raw, recv_raw, msg->conn);

break;

case NETCONN_UDP:

msg->conn->pcb.udp = udp_new();

if(msg->conn->pcb.udp == NULL) {

msg->err = ERR_MEM;

break;

}

if (msg->conn->type==NETCONN_UDPNOCHKSUM) {

udp_setflags(msg->conn->pcb.udp, UDP_FLAGS_NOCHKSUM);

}

udp_recv(msg->conn->pcb.udp, recv_udp, msg->conn);

break;

case NETCONN_TCP:

msg->conn->pcb.tcp = tcp_new();

if(msg->conn->pcb.tcp == NULL) {

msg->err = ERR_MEM;

break;

}

setup_tcp(msg->conn);

break;

default:

/* Unsupported netconn type, e.g. protocol disabled */

msg->err = ERR_VAL;

break;

}

}

/**

* Setup a tcp_pcb with the correct callback function pointers

* and their arguments.

* @param conn the TCP netconn to setup

*/

static void setup_tcp(struct netconn *conn)

{

struct tcp_pcb *pcb;

pcb = conn->pcb.tcp;

tcp_arg(pcb, conn);

tcp_recv(pcb, recv_tcp);

tcp_sent(pcb, sent_tcp);

tcp_poll(pcb, poll_tcp, 4);

tcp_err(pcb, err_tcp);

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

下面分别看看协议栈帮我们实现的8个默认回调函数:

- Raw接收数据回调函数—recv_raw

函数原型:static u8_t recv_raw(void *arg, struct raw_pcb *pcb, struct pbuf *p, ip_addr_t *addr)

当RAW内核接收到关于某个控制块的数据后,在RAW控制块上默认注册的回调函数是recv_raw,主要功能为:将回调时的参数(数据包pbuf)组装成一个上层的数据包格式netbuf,并把这个netbuf投递到连接netconn的recvmbox邮箱中。应用程序通过调用API函数netconn_recv可以从该邮箱中获取一个netbuf数据包,获取数据包成功后,用户程序可以对该数据包中的数据进行处理。Raw回调函数的实现代码如下:

// rt-thread\components\net\lwip-1.4.1\src\api\api_msg.c

/**

* Receive callback function for RAW netconns.

* Doesn't 'eat' the packet, only references it and sends it to

* conn->recvmbox

* @see raw.h (struct raw_pcb.recv) for parameters and return value

*/

static u8_t recv_raw(void *arg, struct raw_pcb *pcb, struct pbuf *p, ip_addr_t *addr)

{

struct pbuf *q;

struct netbuf *buf;

struct netconn *conn = (struct netconn *)arg;

if ((conn != NULL) && sys_mbox_valid(&conn->recvmbox)) {

/* copy the whole packet into new pbufs */

q = pbuf_alloc(PBUF_RAW, p->tot_len, PBUF_RAM);

if(q != NULL) {

if (pbuf_copy(q, p) != ERR_OK) {

pbuf_free(q);

q = NULL;

}

}

if (q != NULL) {

u16_t len;

buf = (struct netbuf *)memp_malloc(MEMP_NETBUF);

if (buf == NULL) {

pbuf_free(q);

return 0;

}

buf->p = q;

buf->ptr = q;

ip_addr_copy(buf->addr, *ip_current_src_addr());

buf->port = pcb->protocol;

len = q->tot_len;

if (sys_mbox_trypost(&conn->recvmbox, buf) != ERR_OK) {

netbuf_delete(buf);

return 0;

} else {

/* Register event with callback */

API_EVENT(conn, NETCONN_EVT_RCVPLUS, len);

}

}

}

return 0; /* do not eat the packet */

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- UDP接收数据回调函数—recv_udp

函数原型:static void recv_udp(void *arg, struct udp_pcb *pcb, struct pbuf *p, ip_addr_t *addr, u16_t port)

当UDP内核接收到关于某个控制块的数据后,在UDP控制块上默认注册的回调函数是recv_udp,主要功能跟recv_raw类似:将数据包pbuf封装为netbuf递交给连接结构netconn的recvmbox邮箱上,该函数的实现代码如下:

// rt-thread\components\net\lwip-1.4.1\src\api\api_msg.c

/**

* Receive callback function for UDP netconns.

* Posts the packet to conn->recvmbox or deletes it on memory error.

* @see udp.h (struct udp_pcb.recv) for parameters

*/

static void recv_udp(void *arg, struct udp_pcb *pcb, struct pbuf *p, ip_addr_t *addr, u16_t port)

{

struct netbuf *buf;

struct netconn *conn = (struct netconn *)arg;

u16_t len;

if ((conn == NULL) || !sys_mbox_valid(&conn->recvmbox)) {

pbuf_free(p);

return;

}

buf = (struct netbuf *)memp_malloc(MEMP_NETBUF);

if (buf == NULL) {

pbuf_free(p);

return;

} else {

buf->p = p;

buf->ptr = p;

ip_addr_set(&buf->addr, addr);

buf->port = port;

#if LWIP_NETBUF_RECVINFO

{

const struct ip_hdr* iphdr = ip_current_header();

/* get the UDP header - always in the first pbuf, ensured by udp_input */

const struct udp_hdr* udphdr = (void*)(((char*)iphdr) + IPH_LEN(iphdr));

ip_addr_set(&buf->toaddr, ip_current_dest_addr());

buf->toport_chksum = udphdr->dest;

}

#endif /* LWIP_NETBUF_RECVINFO */

}

len = p->tot_len;

if (sys_mbox_trypost(&conn->recvmbox, buf) != ERR_OK) {

netbuf_delete(buf);

return;

} else {

/* Register event with callback */

API_EVENT(conn, NETCONN_EVT_RCVPLUS, len);

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- TCP接受新连接回调函数—accept_function

函数原型:static err_t accept_function(void *arg, struct tcp_pcb *newpcb, err_t err)

当TCP服务器接受一个新的连接请求并完成三次握手后,TCP控制块上的accept函数将被回调,新连接TCP控制块将作为参数传递给accept。accept_function函数的功能是使用新连接的TCP控制块建立一个新连接结构netconn,并将该结构投递到服务器连接的acceptmbox邮箱中。服务器应用程序通过调用API函数netconn_accept可以从这个邮箱中取得一个新的连接结构,然后可以对新的连接进行操作,例如发送数据、接收数据等,该函数的实现代码如下:

// rt-thread\components\net\lwip-1.4.1\src\api\api_msg.c

/**

* Accept callback function for TCP netconns.

* Allocates a new netconn and posts that to conn->acceptmbox.

* @see tcp.h (struct tcp_pcb_listen.accept) for parameters and return value

*/

static err_t accept_function(void *arg, struct tcp_pcb *newpcb, err_t err)

{

struct netconn *newconn;

struct netconn *conn = (struct netconn *)arg;

if (!sys_mbox_valid(&conn->acceptmbox)) {

return ERR_VAL;

}

/* We have to set the callback here even though

* the new socket is unknown. conn->socket is marked as -1. */

newconn = netconn_alloc(conn->type, conn->callback);

if (newconn == NULL) {

return ERR_MEM;

}

newconn->pcb.tcp = newpcb;

setup_tcp(newconn);

/* no protection: when creating the pcb, the netconn is not yet known

to the application thread */

newconn->last_err = err;

if (sys_mbox_trypost(&conn->acceptmbox, newconn) != ERR_OK) {

/* When returning != ERR_OK, the pcb is aborted in tcp_process(),

so do nothing here! */

/* remove all references to this netconn from the pcb */

struct tcp_pcb* pcb = newconn->pcb.tcp;

tcp_arg(pcb, NULL);

tcp_recv(pcb, NULL);

tcp_sent(pcb, NULL);

tcp_poll(pcb, NULL, 4);

tcp_err(pcb, NULL);

/* remove reference from to the pcb from this netconn */

newconn->pcb.tcp = NULL;

/* no need to drain since we know the recvmbox is empty. */

sys_mbox_free(&newconn->recvmbox);

sys_mbox_set_invalid(&newconn->recvmbox);

netconn_free(newconn);

return ERR_MEM;

} else {

/* Register event with callback */

API_EVENT(conn, NETCONN_EVT_RCVPLUS, 0);

}

return ERR_OK;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- TCP连接已建立回调函数—do_connected

函数原型:static err_t do_connected(void *arg, struct tcp_pcb *pcb, err_t err)

当作为TCP客户端,在向服务器发起连接请求,并完成三次握手后,TCP控制块中的connected函数将被回调执行,do_connected函数作为API实现时的默认回调函数,它的功能是释放信号量op_completed。从用户编程角度看,当调用上层API函数netconn_connect时,该函数会阻塞在信号量op_completed上等待连接建立,信号量op_completed的释放表示连接建立完毕,函数netconn_connect可以解除阻塞并继续执行。该函数的实现代码如下:

// rt-thread\components\net\lwip-1.4.1\src\api\api_msg.c

/**

* TCP callback function if a connection (opened by tcp_connect/do_connect) has

* been established (or reset by the remote host).

* @see tcp.h (struct tcp_pcb.connected) for parameters and return values

*/

static err_t do_connected(void *arg, struct tcp_pcb *pcb, err_t err)

{

struct netconn *conn = (struct netconn *)arg;

int was_blocking;

if (conn == NULL) {

return ERR_VAL;

}

if (conn->current_msg != NULL) {

conn->current_msg->err = err;

}

if ((conn->type == NETCONN_TCP) && (err == ERR_OK)) {

setup_tcp(conn);

}

was_blocking = !IN_NONBLOCKING_CONNECT(conn);

SET_NONBLOCKING_CONNECT(conn, 0);

conn->current_msg = NULL;

conn->state = NETCONN_NONE;

if (!was_blocking) {

NETCONN_SET_SAFE_ERR(conn, ERR_OK);

}

API_EVENT(conn, NETCONN_EVT_SENDPLUS, 0);

if (was_blocking) {

sys_sem_signal(&conn->op_completed);

}

return ERR_OK;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- TCP发送数据回调函数—sent_tcp

函数原型:static err_t sent_tcp(void *arg, struct tcp_pcb *pcb, u16_t len)

当本地待确认数据被对方TCP报文中的ACK确认后,TCP控制块中的sent函数将会被回调执行,sent_tcp作为默认的回调函数,它将调用do_writemore检查当前连接netconn是否仍然有数据需要发送,若是则发送数据,并在所有数据发送完成后,释放netconn结构中的信号量op_completed,因为数据的发送是由上层调用API函数netconn_write引起的,该函数会等待在信号量op_completed上直至数据发送完毕,sent_tcp释放信号量将使函数解除阻塞,用户程序得以继续执行。该函数的实现代码如下:

// rt-thread\components\net\lwip-1.4.1\src\api\api_msg.c

/**

* Sent callback function for TCP netconns.

* Signals the conn->sem and calls API_EVENT.

* netconn_write waits for conn->sem if send buffer is low.

* @see tcp.h (struct tcp_pcb.sent) for parameters and return value

*/

static err_t sent_tcp(void *arg, struct tcp_pcb *pcb, u16_t len)

{

struct netconn *conn = (struct netconn *)arg;

if (conn->state == NETCONN_WRITE) {

do_writemore(conn);

} else if (conn->state == NETCONN_CLOSE) {

do_close_internal(conn);

}

if (conn) {

/* If the queued byte- or pbuf-count drops below the configured low-water limit,

let select mark this pcb as writable again. */

if ((conn->pcb.tcp != NULL) && (tcp_sndbuf(conn->pcb.tcp) > TCP_SNDLOWAT) &&

(tcp_sndqueuelen(conn->pcb.tcp) < TCP_SNDQUEUELOWAT)) {

conn->flags &= ~NETCONN_FLAG_CHECK_WRITESPACE;

API_EVENT(conn, NETCONN_EVT_SENDPLUS, len);

}

}

return ERR_OK;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- TCP接收回调函数—recv_tcp

函数原型:static err_t recv_tcp(void *arg, struct tcp_pcb *pcb, struct pbuf *p, err_t err)

当TCP内核接收到关于某个TCP控制块的数据后,在TCP控制块上默认注册的回调函数是recv_tcp,主要功能跟recv_udp类似:将数据包pbuf递交给连接结构netconn的recvmbox邮箱上。与recv_udp不同的是,recv_tcp投递到邮箱中的数据包并不是组织在netbuf结构中的,而仍然是pbuf结构。应用程序通过调用API函数netconn_recv可以从该邮箱中获取一个数据包信息,若获取成功则在函数netconn_recv中来完成将TCP数据pbuf组装成netbuf的工作,最后整个netbuf被返回给用户程序处理。该函数的实现代码如下:

// rt-thread\components\net\lwip-1.4.1\src\api\api_msg.c

/**

* Receive callback function for TCP netconns.

* Posts the packet to conn->recvmbox, but doesn't delete it on errors.

* @see tcp.h (struct tcp_pcb.recv) for parameters and return value

*/

static err_t recv_tcp(void *arg, struct tcp_pcb *pcb, struct pbuf *p, err_t err)

{

struct netconn *conn = (struct netconn *)arg;

u16_t len;

if (conn == NULL) {

return ERR_VAL;

}

if (!sys_mbox_valid(&conn->recvmbox)) {

/* recvmbox already deleted */

if (p != NULL) {

tcp_recved(pcb, p->tot_len);

pbuf_free(p);

}

return ERR_OK;

}

/* Unlike for UDP or RAW pcbs, don't check for available space

using recv_avail since that could break the connection

(data is already ACKed) */

if (p != NULL) {

len = p->tot_len;

} else {

len = 0;

}

if (sys_mbox_trypost(&conn->recvmbox, p) != ERR_OK) {

/* don't deallocate p: it is presented to us later again from tcp_fasttmr! */

return ERR_MEM;

} else {

/* Register event with callback */

API_EVENT(conn, NETCONN_EVT_RCVPLUS, len);

}

return ERR_OK;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- TCP错误处理回调函数—err_tcp

函数原型:static void err_tcp(void *arg, err_t err)

在TCP内核中,当某个连接上出现错误时,TCP控制块中注册的errf函数会被回调执行。当错误发生时,TCP控制块已被内核删除,err_tcp函数作为默认的错误处理函数,它会向netconn结构的两个邮箱中投递一条空消息,告诉上层,当前连接发生错误。同时,函数还会判断当前是否有API函数阻塞在连接上(通过连接的状态),如果有则释放一个信号量op_completed,让API函数解除阻塞。该函数的实现代码如下:

// rt-thread\components\net\lwip-1.4.1\src\api\api_msg.c

/**

* Error callback function for TCP netconns.

* Signals conn->sem, posts to all conn mboxes and calls API_EVENT.

* The application thread has then to decide what to do.

* @see tcp.h (struct tcp_pcb.err) for parameters

*/

static void err_tcp(void *arg, err_t err)

{

struct netconn *conn = (struct netconn *)arg;

enum netconn_state old_state;

SYS_ARCH_DECL_PROTECT(lev);

LWIP_ASSERT("conn != NULL", (conn != NULL));

conn->pcb.tcp = NULL;

/* no check since this is always fatal! */

SYS_ARCH_PROTECT(lev);

conn->last_err = err;

SYS_ARCH_UNPROTECT(lev);

/* reset conn->state now before waking up other threads */

old_state = conn->state;

conn->state = NETCONN_NONE;

/* Notify the user layer about a connection error. Used to signal

select. */

API_EVENT(conn, NETCONN_EVT_ERROR, 0);

/* Try to release selects pending on 'read' or 'write', too.

They will get an error if they actually try to read or write. */

API_EVENT(conn, NETCONN_EVT_RCVPLUS, 0);

API_EVENT(conn, NETCONN_EVT_SENDPLUS, 0);

/* pass NULL-message to recvmbox to wake up pending recv */

if (sys_mbox_valid(&conn->recvmbox)) {

/* use trypost to prevent deadlock */

sys_mbox_trypost(&conn->recvmbox, NULL);

}

/* pass NULL-message to acceptmbox to wake up pending accept */

if (sys_mbox_valid(&conn->acceptmbox)) {

/* use trypost to preven deadlock */

sys_mbox_trypost(&conn->acceptmbox, NULL);

}

if ((old_state == NETCONN_WRITE) || (old_state == NETCONN_CLOSE) ||

(old_state == NETCONN_CONNECT)) {

/* calling do_writemore/do_close_internal is not necessary

since the pcb has already been deleted! */

int was_nonblocking_connect = IN_NONBLOCKING_CONNECT(conn);

SET_NONBLOCKING_CONNECT(conn, 0);

if (!was_nonblocking_connect) {

/* set error return code */

LWIP_ASSERT("conn->current_msg != NULL", conn->current_msg != NULL);

conn->current_msg->err = err;

conn->current_msg = NULL;

/* wake up the waiting task */

sys_sem_signal(&conn->op_completed);

}

} else {

LWIP_ASSERT("conn->current_msg == NULL", conn->current_msg == NULL);

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- TCP轮询处理回调函数—poll_tcp

函数原型:static err_t poll_tcp(void *arg, struct tcp_pcb *pcb)

TCP慢定时器tcp_slowtmr可以周期性的调用用户注册的函数poll,以实现用户周期性事件的处理。注册到TCP控制块poll字段的默认回调函数是poll_tcp,调用周期为4个慢时钟时长即2秒。该函数的功能是检查连接netconn是否仍处于数据发送状态,若是则调用函数do_writemore将连接上的剩余数据发送出去。若所以数据都发送完毕,则释放netconn结构中的信号量op_completed,使netconn_write函数解除阻塞,用户程序得以继续执行。该函数的实现代码如下:

// rt-thread\components\net\lwip-1.4.1\src\api\api_msg.c

/**

* Poll callback function for TCP netconns.

* Wakes up an application thread that waits for a connection to close

* or data to be sent. The application thread then takes the

* appropriate action to go on.

* Signals the conn->sem.

* netconn_close waits for conn->sem if closing failed.

* @see tcp.h (struct tcp_pcb.poll) for parameters and return value

*/

static err_t poll_tcp(void *arg, struct tcp_pcb *pcb)

{

struct netconn *conn = (struct netconn *)arg;

LWIP_ASSERT("conn != NULL", (conn != NULL));

if (conn->state == NETCONN_WRITE) {

do_writemore(conn);

} else if (conn->state == NETCONN_CLOSE) {

do_close_internal(conn);

}

/* @todo: implement connect timeout here? */

/* Did a nonblocking write fail before? Then check available write-space. */

if (conn->flags & NETCONN_FLAG_CHECK_WRITESPACE) {

/* If the queued byte- or pbuf-count drops below the configured low-water limit,

let select mark this pcb as writable again. */

if ((conn->pcb.tcp != NULL) && (tcp_sndbuf(conn->pcb.tcp) > TCP_SNDLOWAT) &&

(tcp_sndqueuelen(conn->pcb.tcp) < TCP_SNDQUEUELOWAT)) {

conn->flags &= ~NETCONN_FLAG_CHECK_WRITESPACE;

API_EVENT(conn, NETCONN_EVT_SENDPLUS, 0);

}

}

return ERR_OK;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

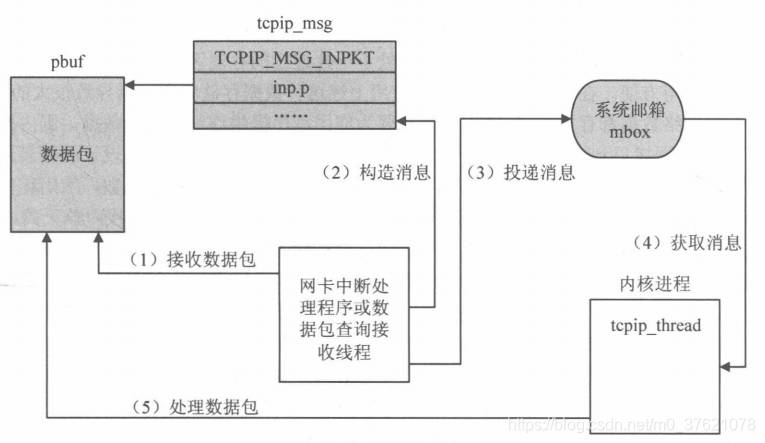

2.5 底层数据包消息处理

在无操作系统模拟层的环境中移植LwIP协议栈时,假如使用以太网卡,在添加网络接口netif_add时,传给该函数的数据包接收函数为ethernet_input;但在有操作系统模拟层的环境中移植LwIP协议栈时,在添加网络接口netif_add时,传给该函数的数据包接收函数为tcpip_input。因此,在网络接口接收到一个数据包后,tcpip_input函数会被回调,向内核进程输入数据包,该函数的实现代码如下:

// rt-thread\components\net\lwip-1.4.1\src\api\tcpip.c

/**

* Pass a received packet to tcpip_thread for input processing

*

* @param p the received packet, p->payload pointing to the Ethernet header or

* to an IP header (if inp doesn't have NETIF_FLAG_ETHARP or

* NETIF_FLAG_ETHERNET flags)

* @param inp the network interface on which the packet was received

*/

err_t tcpip_input(struct pbuf *p, struct netif *inp)

{

struct tcpip_msg *msg;

if (!sys_mbox_valid(&mbox)) {

return ERR_VAL;

}

msg = (struct tcpip_msg *)memp_malloc(MEMP_TCPIP_MSG_INPKT);

if (msg == NULL) {

return ERR_MEM;

}

msg->type = TCPIP_MSG_INPKT;

msg->msg.inp.p = p;

msg->msg.inp.netif = inp;

if (sys_mbox_trypost(&mbox, msg) != ERR_OK) {

memp_free(MEMP_TCPIP_MSG_INPKT, msg);

return ERR_MEM;

}

return ERR_OK;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

函数tcpip_input在内存池中为系统消息结构申请空间,并根据消息类型初始化结构中的相关字段,最后将TCPIP_MSG_INPKT类型的消息投递到系统邮箱中等待内核进程tcpip_thread处理,内核进程通过调用ethernet_input来处理消息,通过消息机制来处理数据包和直接注册ethernet_input为网卡接收回调函数处理数据包有着相同的效果。不过,此时的数据包处理流程将会有所改变,处理过程如下图所示:

在无操作系统模拟层的环境下,中断程序或者数据包查询线程需要接收一个数据包,然后依次调用内核函数对数据包进行处理,接着再处理下一个数据包;而在有操作系统模拟层的环境下,中断程序或者数据包查询线程只需要接收数据包,并组装成消息投递到系统邮箱,此后又可以继续接收下一个数据包,这样中断程序的执行时间缩短,同时网卡的数据接收能力也提高了。

三、协议栈Sequetia API实现

3.1 用户数据缓冲结构netbuf描述

协议栈内核使用pbuf结构来描述数据包,前面介绍内核回调函数时说到,回调函数将数据包pbuf封装为netbuf后才投递到连接netconn的recvmbox邮箱中的,用户进程使用netbuf来描述数据包。netbuf结构是对pbuf的再封装,并增加了IP地址和Port等字段信息,应用程序可以使用netbuf来管理发送数据、接收数据的缓冲区。netbuf的数据结构描述如下:

// rt-thread\components\net\lwip-1.4.1\src\include\lwip\netbuf.h

struct netbuf {

struct pbuf *p, *ptr;

ip_addr_t addr;

u16_t port;

};

- 1

- 2

- 3

- 4

- 5

- 6

- 7

netbuf仅仅相当于一个数据首部,而真正保存数据的是字段p指向的pbuf链表,字段ptr也指向该netbuf的pbuf链表,但与p的区别在于:p一直指向pbuf链表中的第一个pbuf结构,而ptr则可能指向链表中的其他位置(源文档里面把它描述为fragment pointer);还有两个字段addr和port分别记录数据发送方的IP地址和端口号。用户数据缓冲结构netbuf与内核数据包结构pbuf的关系如下:

3.2 如何操作用户数据缓冲

netbuf是应用程序描述待发送数据和已接收数据的基本结构,无论是UDP连接还是TCP连接,当协议栈接收到数据包后,都会将数据封装在一个netbuf中,并递交给应用程序。在数据发送时,不同类型的连接将导致不同的数据处理方式:对于TCP连接,用户只需要提供待发送数据的起始地址和长度,内核会根据实际情况将数据封装在合适大小的数据包中,并放入发送队列;对于UDP来说,用户需要自行将数据封装在netbuf结构中,当发送函数被调用时,内核直接将该数据包中的数据发送出去。

引入netbuf结构看似会让应用程序更加复杂,但实际上内核为用户提供了一系列操作函数,通过这些函数可以直接对netbuf中的数据进行读取、填写等操作,为应用程序开发带来方便。内核提供的操作函数主要如下(实际调用pbuf的操作函数,实现代码比较简单,这里就略去了):

// rt-thread\components\net\lwip-1.4.1\src\include\lwip\netbuf.h

/* Network buffer functions: */

// * Create (allocate) and initialize a new netbuf. The netbuf doesn't yet contain a packet buffer!

struct netbuf * netbuf_new (void);

// * Deallocate a netbuf allocated by netbuf_new().

void netbuf_delete (struct netbuf *buf);

// * Allocate memory for a packet buffer for a given netbuf.

void * netbuf_alloc (struct netbuf *buf, u16_t size);

// * Free the packet buffer included in a netbuf

void netbuf_free (struct netbuf *buf);

// * Let a netbuf reference existing (non-volatile) data.

err_t netbuf_ref (struct netbuf *buf, const void *dataptr, u16_t size);

// * Chain one netbuf to another (@see pbuf_chain)

void netbuf_chain (struct netbuf *head, struct netbuf *tail);

// * Get the data pointer and length of the data inside a netbuf.

err_t netbuf_data (struct netbuf *buf, void **dataptr, u16_t *len);

// * Move the current data pointer of a packet buffer contained in a netbuf to the next part. The packet buffer itself is not modified.

s8_t netbuf_next (struct netbuf *buf);

// * Move the current data pointer of a packet buffer contained in a netbuf to the beginning of the packet. The packet buffer itself is not modified.

void netbuf_first (struct netbuf *buf);

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

3.3 连接结构netconn描述

在基于Raw/Callback API编程时,不同类型的连接(RAW、UDP和TCP)使用的是三套互不相关的编程函数(raw_xxx、udp_xxx和tcp_xxx),熟悉BSD Socket编程的读者清楚Socket能够提供给用户一个统一的编程接口。前面介绍协议栈内核API(do_xxx)也能够借助连接结构netconn的type字段提供一个统一的编程接口,这里的连接结构netconn抽象的描述了一个连接,供应用程序使用,同时协议栈API函数也已对各种连接类型的操作函数进行了统一的封装,这样用户程序就可以直接忽略连接类型的差异而使用统一的连接结构和编程函数了。连接结构netconn的描述代码如下:

// rt-thread\components\net\lwip-1.4.1\src\include\lwip\api.h

/** Protocol family and type of the netconn */

enum netconn_type {

NETCONN_INVALID = 0,

/* NETCONN_TCP Group */

NETCONN_TCP = 0x10,

/* NETCONN_UDP Group */

NETCONN_UDP = 0x20,

NETCONN_UDPLITE = 0x21,

NETCONN_UDPNOCHKSUM= 0x22,

/* NETCONN_RAW Group */

NETCONN_RAW = 0x40

};

/** Current state of the netconn. Non-TCP netconns are always

* in state NETCONN_NONE! */

enum netconn_state {

NETCONN_NONE,

NETCONN_WRITE,

NETCONN_LISTEN,

NETCONN_CONNECT,

NETCONN_CLOSE

};

/** A callback prototype to inform about events for a netconn */

typedef void (* netconn_callback)(struct netconn *, enum netconn_evt, u16_t len);

/** A netconn descriptor */

struct netconn {

/** type of the netconn (TCP, UDP or RAW) */

enum netconn_type type;

/** current state of the netconn */

enum netconn_state state;

/** the lwIP internal protocol control block */

union {

struct ip_pcb *ip;

struct tcp_pcb *tcp;

struct udp_pcb *udp;

struct raw_pcb *raw;

} pcb;

/** the last error this netconn had */

err_t last_err;

/** sem that is used to synchroneously execute functions in the core context */

sys_sem_t op_completed;

/** mbox where received packets are stored until they are fetched

by the netconn application thread (can grow quite big) */

sys_mbox_t recvmbox;

/** mbox where new connections are stored until processed

by the application thread */

sys_mbox_t acceptmbox;

/** only used for socket layer */

int socket;

/** maximum amount of bytes queued in recvmbox

not used for TCP: adjust TCP_WND instead! */

int recv_bufsize;

/** number of bytes currently in recvmbox to be received,

tested against recv_bufsize to limit bytes on recvmbox

for UDP and RAW, used for FIONREAD */

s16_t recv_avail;

/** flags holding more netconn-internal state, see NETCONN_FLAG_* defines */

u8_t flags;

/** TCP: when data passed to netconn_write doesn't fit into the send buffer,

this temporarily stores how much is already sent. */

size_t write_offset;

/** TCP: when data passed to netconn_write doesn't fit into the send buffer,

this temporarily stores the message.

Also used during connect and close. */

struct api_msg_msg *current_msg;

/** A callback function that is informed about events for this netconn */

netconn_callback callback;

};

/* Flags for netconn_write (u8_t) */

#define NETCONN_NOFLAG 0x00

#define NETCONN_NOCOPY 0x00 /* Only for source code compatibility */

#define NETCONN_COPY 0x01

#define NETCONN_MORE 0x02

#define NETCONN_DONTBLOCK 0x04

/* Flags for struct netconn.flags (u8_t) */

/** TCP: when data passed to netconn_write doesn't fit into the send buffer,

this temporarily stores whether to wake up the original application task

if data couldn't be sent in the first try. */

#define NETCONN_FLAG_WRITE_DELAYED 0x01

/** Should this netconn avoid blocking? */

#define NETCONN_FLAG_NON_BLOCKING 0x02

/** Was the last connect action a non-blocking one? */

#define NETCONN_FLAG_IN_NONBLOCKING_CONNECT 0x04

/** If this is set, a TCP netconn must call netconn_recved() to update

the TCP receive window (done automatically if not set). */

#define NETCONN_FLAG_NO_AUTO_RECVED 0x08

/** If a nonblocking write has been rejected before, poll_tcp needs to

check if the netconn is writable again */

#define NETCONN_FLAG_CHECK_WRITESPACE 0x10

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

上面的结构中,socket字段和callback字段在实现BSD Socket API时使用到,由于Socket API基于Sequential API实现,这两个字段主要为Socket API的实现提供支持。

type字段描述了连接的类型,当前连接类型可以是RAW、UDP或TCP,连接结构提供了对这三种协议连接的统一抽象,前面介绍内核API(do_xxx)实现统一接口时就是根据这个类型字段的值来判断调用哪个Raw/Callback API函数的。共用体pcb用来记录与type类型连接相关的内核控制块(RAW、UDP或TCP控制块)。last_err字段记录了当前连接上函数调用的执行结果。

state描述了当前连接的状态,这里的状态和TCP状态机中的状态不同,state只是简单抽象了三种类型连接的共有属性,部分API函数需要根据连接的状态来选择处理方式。flags字段用来记录当前控制块的属性,这些属性更多的是对描述当前控制块在各种特定场景下如何处理连接上的数据:比如在发送缓冲不够时,发送函数是否阻塞;接收内核数据后是否自动更新内核接收窗口等,flags字段可能的取值如上面的代码所示。

op_completed是用户进程与内核进程实现同步的重要字段,前面介绍消息机制时谈到,netconn_xxx函数在投递完消息后,便会阻塞在该连接的这个信号量上,当内核的do_xxx执行完成后便释放该信号量。

recvmbox是该连接的数据接收邮箱,也可以叫做缓冲队列,内核会把所有属于该连接的数据包(封装在netbuf中)投递到该邮箱,应用程序调用数据接收函数,就是从该邮箱中等待并取得一个数据包。recv_avail字段记录了当前邮箱中已经缓冲好的数据总长度;recv_bufsize表示邮箱中允许缓冲数据的最大长度,这两个字段常在UDP中使用,因为在UDP中没有流量控制机制,引入这两个字段可以在接收端来不及处理收到的数据包时,直接丢弃新数据以免出现邮箱数据溢出异常。

acceptmbox字段在连接作为TCP服务器端时用到,内核会把所有新建立好的连接结构netconn投递到该邮箱中,服务器程序调用netconn_accept函数便会得到一个新的连接结构,之后便可在这个新结构上进行通信操作。

剩下的两个字段current_msg和write_offset与连接数据发送有关,主要在TCP连接上使用。在数据发送时,若发送缓冲不足,则数据会被延迟发送,未发送的数据将被记录在这两个字段内,在TCP的周期性处理函数poll中,或者当TCP在该连接上成功发送数据后,内核会再次尝试发送这些未发送数据。

下面看看netconn结构的分配和释放过程,相关字段是如何处理的:

// rt-thread\components\net\lwip-1.4.1\src\api\api_msg.c

/**

* Create a new netconn (of a specific type) that has a callback function.

* The corresponding pcb is NOT created!

* @param t the type of 'connection' to create (@see enum netconn_type)

* @param proto the IP protocol for RAW IP pcbs

* @param callback a function to call on status changes (RX available, TX'ed)

* @return a newly allocated struct netconn or

* NULL on memory error

*/

struct netconn* netconn_alloc(enum netconn_type t, netconn_callback callback)

{

struct netconn *conn;

int size;

conn = (struct netconn *)memp_malloc(MEMP_NETCONN);

if (conn == NULL) {

return NULL;

}

conn->last_err = ERR_OK;

conn->type = t;

conn->pcb.tcp = NULL;

switch(NETCONNTYPE_GROUP(t)) {

case NETCONN_RAW:

size = DEFAULT_RAW_RECVMBOX_SIZE;

break;

case NETCONN_UDP:

size = DEFAULT_UDP_RECVMBOX_SIZE;

break;

case NETCONN_TCP:

size = DEFAULT_TCP_RECVMBOX_SIZE;

break;

default:

LWIP_ASSERT("netconn_alloc: undefined netconn_type", 0);

goto free_and_return;

}

if (sys_sem_new(&conn->op_completed, 0) != ERR_OK) {

goto free_and_return;

}

if (sys_mbox_new(&conn->recvmbox, size) != ERR_OK) {

sys_sem_free(&conn->op_completed);

goto free_and_return;

}

sys_mbox_set_invalid(&conn->acceptmbox);

conn->state = NETCONN_NONE;

#if LWIP_SOCKET

/* initialize socket to -1 since 0 is a valid socket */

conn->socket = -1;

#endif /* LWIP_SOCKET */

conn->callback = callback;

conn->current_msg = NULL;

conn->write_offset = 0;

conn->recv_bufsize = RECV_BUFSIZE_DEFAULT;

conn->recv_avail = 0;

conn->flags = 0;

return conn;

free_and_return:

memp_free(MEMP_NETCONN, conn);

return NULL;

}

/**

* Delete a netconn and all its resources.

* The pcb is NOT freed (since we might not be in the right thread context do this).

* @param conn the netconn to free

*/

void netconn_free(struct netconn *conn)

{

LWIP_ASSERT("PCB must be deallocated outside this function", conn->pcb.tcp == NULL);

LWIP_ASSERT("recvmbox must be deallocated before calling this function",

!sys_mbox_valid(&conn->recvmbox));

LWIP_ASSERT("acceptmbox must be deallocated before calling this function",

!sys_mbox_valid(&conn->acceptmbox));

sys_sem_free(&conn->op_completed);

sys_sem_set_invalid(&conn->op_completed);

memp_free(MEMP_NETCONN, conn);

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

3.4 Sequetia API接口函数

Raw/Callback API编程主要是对相应连接控制块(比如RAW、UDP、TCP控制块)的操作,Sequetia API编程则主要是对相应连接结构netconn的操作。在用户进程中通过调用Sequetia API函数,可以完成连接管理、接收数据、发送数据等Raw/Callback API支持的所有操作,内核提供面向用户进程对连接netconn的操作函数主要如下:

// rt-thread\components\net\lwip-1.4.1\src\include\lwip\api.h

/* Network connection functions: */

// * Create a new netconn (of a specific type) that has a callback function. The corresponding pcb is also created.

struct netconn *netconn_new_with_proto_and_callback(enum netconn_type t, u8_t proto,

netconn_callback callback);

#define netconn_new(t) netconn_new_with_proto_and_callback(t, 0, NULL)

#define netconn_new_with_callback(t, c) netconn_new_with_proto_and_callback(t, 0, c)

// * Close a netconn 'connection' and free its resources. UDP and RAW connection are completely closed, TCP pcbs might still be in a waitstate after this returns.

err_t netconn_delete(struct netconn *conn);

// * Get the local or remote IP address and port of a netconn. For RAW netconns, this returns the protocol instead of a port!

err_t netconn_getaddr(struct netconn *conn, ip_addr_t *addr, u16_t *port, u8_t local);

#define netconn_peer(c,i,p) netconn_getaddr(c,i,p,0)

#define netconn_addr(c,i,p) netconn_getaddr(c,i,p,1)

// * Bind a netconn to a specific local IP address and port.

err_t netconn_bind(struct netconn *conn, ip_addr_t *addr, u16_t port);

// * Connect a netconn to a specific remote IP address and port.

err_t netconn_connect(struct netconn *conn, ip_addr_t *addr, u16_t port);

// * Disconnect a netconn from its current peer (only valid for UDP netconns).

err_t netconn_disconnect (struct netconn *conn);

// * Set a TCP netconn into listen mode

err_t netconn_listen_with_backlog(struct netconn *conn, u8_t backlog);

#define netconn_listen(conn) netconn_listen_with_backlog(conn, TCP_DEFAULT_LISTEN_BACKLOG)

// * Accept a new connection on a TCP listening netconn.

err_t netconn_accept(struct netconn *conn, struct netconn **new_conn);

// * Receive data (in form of a netbuf containing a packet buffer) from a netconn

err_t netconn_recv(struct netconn *conn, struct netbuf **new_buf);

// * Receive data (in form of a pbuf) from a TCP netconn

err_t netconn_recv_tcp_pbuf(struct netconn *conn, struct pbuf **new_buf);

// * TCP: update the receive window: by calling this, the application tells the stack that it has processed data and is able to accept new data.

void netconn_recved(struct netconn *conn, u32_t length);

// * Send data (in form of a netbuf) to a specific remote IP address and port. Only to be used for UDP and RAW netconns (not TCP).

err_t netconn_sendto(struct netconn *conn, struct netbuf *buf, ip_addr_t *addr, u16_t port);

// * Send data over a UDP or RAW netconn (that is already connected).

err_t netconn_send(struct netconn *conn, struct netbuf *buf);

// * Send data over a TCP netconn.

err_t netconn_write_partly(struct netconn *conn, const void *dataptr, size_t size,

u8_t apiflags, size_t *bytes_written);

#define netconn_write(conn, dataptr, size, apiflags) \

netconn_write_partly(conn, dataptr, size, apiflags, NULL)

// * Close a TCP netconn (doesn't delete it).

err_t netconn_close(struct netconn *conn);

// * Shut down one or both sides of a TCP netconn (doesn't delete it).

err_t netconn_shutdown(struct netconn *conn, u8_t shut_rx, u8_t shut_tx);

#if LWIP_IGMP

// * Join multicast groups for UDP netconns.

err_t netconn_join_leave_group(struct netconn *conn, ip_addr_t *multiaddr,

ip_addr_t *netif_addr, enum netconn_igmp join_or_leave);

#endif /* LWIP_IGMP */

#if LWIP_DNS

// * Execute a DNS query, only one IP address is returned

err_t netconn_gethostbyname(const char *name, ip_addr_t *addr);

#endif /* LWIP_DNS */

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

上面的用户进程API(netconn_xxx)函数基本跟内核进程API(do_xxx)一一对应,实际上调用netconn_xxx最终还是调用的do_xxx,netconn_xxx主要完成了相应api_msg消息结构的封装,并把该消息通过邮箱投递给内核进程tcpip_thread执行相应的do_xxx,为了保证用户进程与内核进程的同步,还增加了信号量op_completed的获取和释放(参考前面介绍的tcpip_apimsg函数)。前面已经介绍过netconn_bind的实现代码,其他函数的实现逻辑与此类似,就不再一一展示实现代码了,下面再以比较复杂的数据接收函数netconn_recv为例展示下其实现过程:

// rt-thread\components\net\lwip-1.4.1\src\api\api_lib.c

/**

* Receive data (in form of a netbuf containing a packet buffer) from a netconn

* @param conn the netconn from which to receive data

* @param new_buf pointer where a new netbuf is stored when received data

* @return ERR_OK if data has been received, an error code otherwise (timeout,

* memory error or another error)

*/

err_t netconn_recv(struct netconn *conn, struct netbuf **new_buf)

{

struct netbuf *buf = NULL;

err_t err;

LWIP_ERROR("netconn_recv: invalid pointer", (new_buf != NULL), return ERR_ARG;);

*new_buf = NULL;

LWIP_ERROR("netconn_recv: invalid conn", (conn != NULL), return ERR_ARG;);

LWIP_ERROR("netconn_accept: invalid recvmbox", sys_mbox_valid(&conn->recvmbox), return ERR_CONN;);

if (conn->type == NETCONN_TCP)

{

struct pbuf *p = NULL;

/* This is not a listening netconn, since recvmbox is set */

buf = (struct netbuf *)memp_malloc(MEMP_NETBUF);

if (buf == NULL) {

return ERR_MEM;

}

err = netconn_recv_data(conn, (void **)&p);

if (err != ERR_OK) {

memp_free(MEMP_NETBUF, buf);

return err;

}

LWIP_ASSERT("p != NULL", p != NULL);

buf->p = p;

buf->ptr = p;

buf->port = 0;

ip_addr_set_any(&buf->addr);

*new_buf = buf;

/* don't set conn->last_err: it's only ERR_OK, anyway */

return ERR_OK;

}

else

{

return netconn_recv_data(conn, (void **)new_buf);

}

}

/**

* Receive data: actual implementation that doesn't care whether pbuf or netbuf

* is received

* @param conn the netconn from which to receive data

* @param new_buf pointer where a new pbuf/netbuf is stored when received data

* @return ERR_OK if data has been received, an error code otherwise (timeout,

* memory error or another error)

*/

static err_t netconn_recv_data(struct netconn *conn, void **new_buf)

{

void *buf = NULL;

u16_t len;

err_t err;

struct api_msg msg;

LWIP_ERROR("netconn_recv: invalid pointer", (new_buf != NULL), return ERR_ARG;);

*new_buf = NULL;

LWIP_ERROR("netconn_recv: invalid conn", (conn != NULL), return ERR_ARG;);

LWIP_ERROR("netconn_accept: invalid recvmbox", sys_mbox_valid(&conn->recvmbox), return ERR_CONN;);

err = conn->last_err;

if (ERR_IS_FATAL(err)) {

/* don't recv on fatal errors: this might block the application task

waiting on recvmbox forever! */

/* @todo: this does not allow us to fetch data that has been put into recvmbox

before the fatal error occurred - is that a problem? */

return err;

}

sys_arch_mbox_fetch(&conn->recvmbox, &buf, 0);

if (conn->type == NETCONN_TCP)

{

if (!netconn_get_noautorecved(conn) || (buf == NULL)) {

/* Let the stack know that we have taken the data. */

/* TODO: Speedup: Don't block and wait for the answer here

(to prevent multiple thread-switches). */

msg.function = do_recv;

msg.msg.conn = conn;

if (buf != NULL) {

msg.msg.msg.r.len = ((struct pbuf *)buf)->tot_len;

} else {

msg.msg.msg.r.len = 1;

}

/* don't care for the return value of do_recv */

TCPIP_APIMSG(&msg);

}

/* If we are closed, we indicate that we no longer wish to use the socket */

if (buf == NULL) {

API_EVENT(conn, NETCONN_EVT_RCVMINUS, 0);

/* Avoid to lose any previous error code */

return ERR_CLSD;

}

len = ((struct pbuf *)buf)->tot_len;

}

else

{

LWIP_ASSERT("buf != NULL", buf != NULL);

len = netbuf_len((struct netbuf *)buf);

}

SYS_ARCH_DEC(conn->recv_avail, len);

/* Register event with callback */

API_EVENT(conn, NETCONN_EVT_RCVMINUS, len);

*new_buf = buf;

/* don't set conn->last_err: it's only ERR_OK, anyway */

return ERR_OK;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

3.5 NETIF API接口函数

前面介绍了用户进程为了方便操作数据缓冲,将内核数据包pbuf封装为netbuf;为了方便管理连接,将内核控制块封装为netconn,这些构成了用户进程进行Sequetia API编程的基础。netconn_xxx的调用都是构造api_msg消息,借由tcpip_apimsg函数封装为TCPIP_MSG_API类型的tcpip_msg消息,再通过邮箱投递给内核进程tcpip_thread完成消息处理的。用户进程除了管理网络连接和数据传输,也有管理网络接口的需求,协议栈为了方便管理网络接口,提供了netifapi_msg消息结构,该消息结构的描述如下:

// rt-thread\components\net\lwip-1.4.1\src\include\lwip\netifapi.h

typedef void (*netifapi_void_fn)(struct netif *netif);

typedef err_t (*netifapi_errt_fn)(struct netif *netif);

struct netifapi_msg_msg {

sys_sem_t sem;

err_t err;

struct netif *netif;

union {

struct {

ip_addr_t *ipaddr;

ip_addr_t *netmask;

ip_addr_t *gw;

void *state;

netif_init_fn init;

netif_input_fn input;

} add;

struct {

netifapi_void_fn voidfunc;

netifapi_errt_fn errtfunc;

} common;

} msg;

};

struct netifapi_msg {

void (* function)(struct netifapi_msg_msg *msg);

struct netifapi_msg_msg msg;

};

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

与api_msg类似,netifapi_msg也包含函数指针和参数指针两部分,其中参数netifapi_msg_msg包含四部分:一是内核网络接口结构netif;二是实现用户进程与内核进程同步的信号量sem;三是记录函数执行结果的err;四是共用体msg,包含添加网络接口参数的add和函数指针common两个结构体。

应用进程基于netifapi_msg消息能对网络接口进行的操作主要如下(可以对比网络接口netif的操作函数):

// rt-thread\components\net\lwip-1.4.1\src\include\lwip\netifapi.h

/* API for application */

err_t netifapi_netif_add ( struct netif *netif,

ip_addr_t *ipaddr,

ip_addr_t *netmask,

ip_addr_t *gw,

void *state,

netif_init_fn init,

netif_input_fn input);

err_t netifapi_netif_set_addr ( struct netif *netif,

ip_addr_t *ipaddr,

ip_addr_t *netmask,

ip_addr_t *gw );

err_t netifapi_netif_common ( struct netif *netif,

netifapi_void_fn voidfunc,

netifapi_errt_fn errtfunc);

#define netifapi_netif_remove(n) netifapi_netif_common(n, netif_remove, NULL)

#define netifapi_netif_set_up(n) netifapi_netif_common(n, netif_set_up, NULL)

#define netifapi_netif_set_down(n) netifapi_netif_common(n, netif_set_down, NULL)

#define netifapi_netif_set_default(n) netifapi_netif_common(n, netif_set_default, NULL)

#define netifapi_dhcp_start(n) netifapi_netif_common(n, NULL, dhcp_start)

#define netifapi_dhcp_stop(n) netifapi_netif_common(n, dhcp_stop, NULL)

#define netifapi_autoip_start(n) netifapi_netif_common(n, NULL, autoip_start)

#define netifapi_autoip_stop(n) netifapi_netif_common(n, NULL, autoip_stop)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

与api_msg的处理过程也类似,netif_msg在用户进程中有对应的netifapi_xxx接口函数供调用,在内核进程也有do_netifapi_xxx函数实际完成消息的处理,在内核进程实现的do_netifapi_xxx函数一共有三个,实现代码分别如下:

// rt-thread\components\net\lwip-1.4.1\src\include\lwip\tcpip.h

#define TCPIP_NETIFAPI_ACK(m) sys_sem_signal(&m->sem)

// rt-thread\components\net\lwip-1.4.1\src\api\netifapi.c

/**

* Call netif_add() inside the tcpip_thread context.

*/

void do_netifapi_netif_add(struct netifapi_msg_msg *msg)

{

if (!netif_add( msg->netif,

msg->msg.add.ipaddr,

msg->msg.add.netmask,

msg->msg.add.gw,

msg->msg.add.state,

msg->msg.add.init,

msg->msg.add.input)) {

msg->err = ERR_IF;

} else {

msg->err = ERR_OK;

}

TCPIP_NETIFAPI_ACK(msg);

}

/**

* Call netif_set_addr() inside the tcpip_thread context.

*/

void do_netifapi_netif_set_addr(struct netifapi_msg_msg *msg)

{

netif_set_addr( msg->netif,

msg->msg.add.ipaddr,

msg->msg.add.netmask,

msg->msg.add.gw);

msg->err = ERR_OK;

TCPIP_NETIFAPI_ACK(msg);

}

/**

* Call the "errtfunc" (or the "voidfunc" if "errtfunc" is NULL) inside the

* tcpip_thread context.

*/

void do_netifapi_netif_common(struct netifapi_msg_msg *msg)

{

if (msg->msg.common.errtfunc != NULL) {

msg->err = msg->msg.common.errtfunc(msg->netif);

} else {

msg->err = ERR_OK;

msg->msg.common.voidfunc(msg->netif);

}

TCPIP_NETIFAPI_ACK(msg);

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

在用户进程中,真正实现的netifapi_xxx接口函数也只有三个,其余的都是通过宏定义传入不同的函数指针实现的,三个netifapi_xxx函数的实现代码如下:

// rt-thread\components\net\lwip-1.4.1\src\include\lwip\tcpip.h

#define TCPIP_NETIFAPI(m) tcpip_netifapi(m)

// rt-thread\components\net\lwip-1.4.1\src\api\netifapi.c

/**

* Call netif_add() in a thread-safe way by running that function inside the

* tcpip_thread context.

* @note for params @see netif_add()

*/

err_t netifapi_netif_add(struct netif *netif,

ip_addr_t *ipaddr,

ip_addr_t *netmask,

ip_addr_t *gw,

void *state,

netif_init_fn init,

netif_input_fn input)

{

struct netifapi_msg msg;

msg.function = do_netifapi_netif_add;

msg.msg.netif = netif;

msg.msg.msg.add.ipaddr = ipaddr;

msg.msg.msg.add.netmask = netmask;

msg.msg.msg.add.gw = gw;

msg.msg.msg.add.state = state;

msg.msg.msg.add.init = init;

msg.msg.msg.add.input = input;

TCPIP_NETIFAPI(&msg);

return msg.msg.err;

}

/**

* Call netif_set_addr() in a thread-safe way by running that function inside the

* tcpip_thread context.

* @note for params @see netif_set_addr()

*/

err_t netifapi_netif_set_addr(struct netif *netif,

ip_addr_t *ipaddr,

ip_addr_t *netmask,

ip_addr_t *gw)

{

struct netifapi_msg msg;

msg.function = do_netifapi_netif_set_addr;

msg.msg.netif = netif;

msg.msg.msg.add.ipaddr = ipaddr;

msg.msg.msg.add.netmask = netmask;

msg.msg.msg.add.gw = gw;

TCPIP_NETIFAPI(&msg);

return msg.msg.err;

}

/**

* call the "errtfunc" (or the "voidfunc" if "errtfunc" is NULL) in a thread-safe

* way by running that function inside the tcpip_thread context.

* @note use only for functions where there is only "netif" parameter.

*/

err_t netifapi_netif_common(struct netif *netif, netifapi_void_fn voidfunc,

netifapi_errt_fn errtfunc)

{

struct netifapi_msg msg;

msg.function = do_netifapi_netif_common;

msg.msg.netif = netif;

msg.msg.msg.common.voidfunc = voidfunc;

msg.msg.msg.common.errtfunc = errtfunc;

TCPIP_NETIFAPI(&msg);

return msg.msg.err;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

完成netifapi_msg消息投递的函数是tcpip_netifapi(参考tcpip_apimsg),该函数在投递消息后会处于等待信号量的阻塞状态,直到消息被内核处理后释放信号量。该函数的实现代码如下:

// rt-thread\components\net\lwip-1.4.1\src\api\tcpip.c

/**

* Much like tcpip_apimsg, but calls the lower part of a netifapi_function.

* @param netifapimsg a struct containing the function to call and its parameters

* @return error code given back by the function that was called

*/

err_t tcpip_netifapi(struct netifapi_msg* netifapimsg)

{

struct tcpip_msg msg;

if (sys_mbox_valid(&mbox)) {

err_t err = sys_sem_new(&netifapimsg->msg.sem, 0);

if (err != ERR_OK) {

netifapimsg->msg.err = err;

return err;

}

msg.type = TCPIP_MSG_NETIFAPI;

msg.msg.netifapimsg = netifapimsg;

sys_mbox_post(&mbox, &msg);

sys_sem_wait(&netifapimsg->msg.sem);

sys_sem_free(&netifapimsg->msg.sem);

return netifapimsg->msg.err;

}

return ERR_VAL;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

四、Sequetia API编程示例

4.1 UDP回显服务器编程示例

UDP是面向非连接的服务,其数据包的传输效率非常高,常用于视频点播、网络电话等对数据丢失、数据错误不十分敏感的场合。另外,在局域网内部,由于其网络丢包、错包概率相对较低,因此也常使用UDP来设计应用程序,如果用户程序加以一定的机制来对UDP数据进行确认和重传,UDP也能达到很高的数据可靠性。

UDP回显服务器完成了简单的回显功能:这个服务器将本地接收到的数据原样返回给源主机。这里使用Sequetia API来构建一个简单的UDP回显服务器,在applications目录下新建seqapi_udp_demo.c文件,在该源文件内编辑实现代码如下:

// applications\seqapi_udp_demo.c

#include "lwip/api.h"

#include "rtthread.h"

static void seqapi_udpecho_thread(void *arg)

{

static struct netconn *conn;

static struct netbuf *buf;

static ip_addr_t *addr;

static unsigned short port;

err_t err;

LWIP_UNUSED_ARG(arg);

conn = netconn_new(NETCONN_UDP);

LWIP_ASSERT("con != NULL", conn != NULL);

netconn_bind(conn, NULL, 7);

while (1) {

err = netconn_recv(conn, &buf);

if (err == ERR_OK) {

addr = netbuf_fromaddr(buf);

port = netbuf_fromport(buf);

rt_kprintf("addr: %ld, poty: %d.\n", addr->addr, port);

err = netconn_send(conn, buf);

if(err != ERR_OK) {

LWIP_DEBUGF(LWIP_DBG_ON, ("netconn_send failed: %d\n", (int)err));

}

netbuf_delete(buf);

rt_thread_mdelay(100);

}

}

}

static void seqapi_udpecho_init(void)

{

sys_thread_new("udpecho", seqapi_udpecho_thread, NULL, 1024, 25);

}

MSH_CMD_EXPORT_ALIAS(seqapi_udpecho_init, seqapi_udpecho, sequential api udpecho init);

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

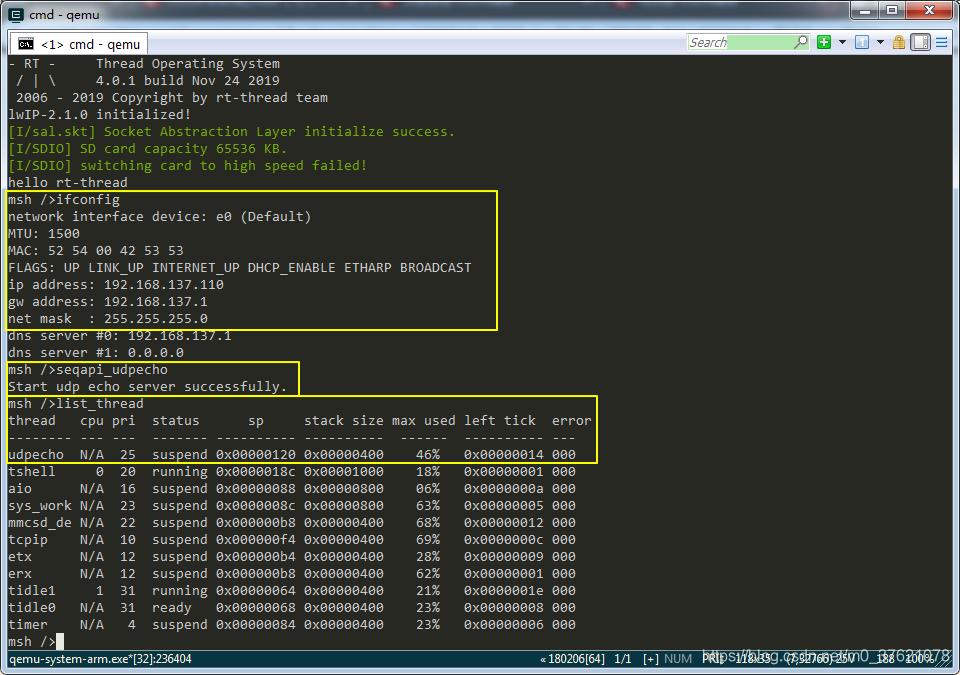

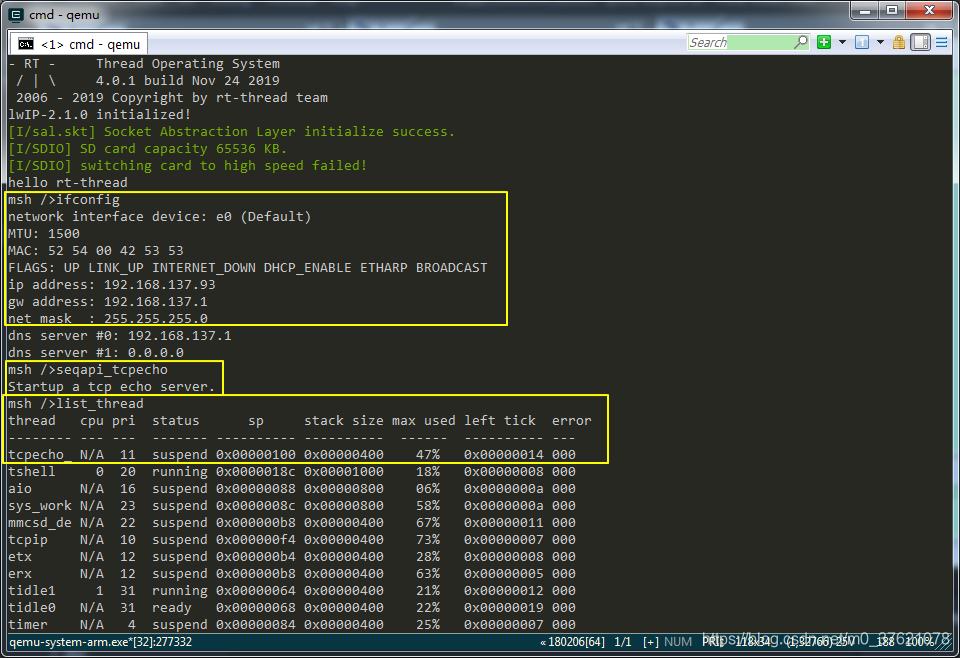

在env环境中运行scons命令编译工程,运行qemu命令启动虚拟机,执行ifconfig命令与ping命令确认网卡配置就绪后,执行MSH_CMD_EXPORT_ALIAS导出的命令别名seqapi_udpecho便启动了一个udp回显服务器,命令执行结果如下:

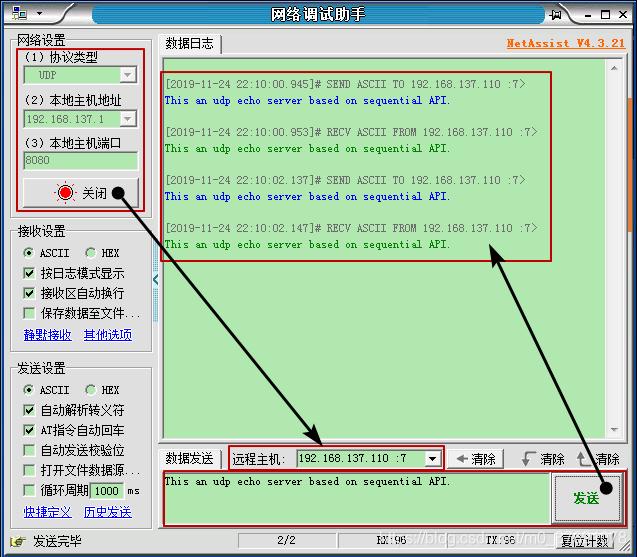

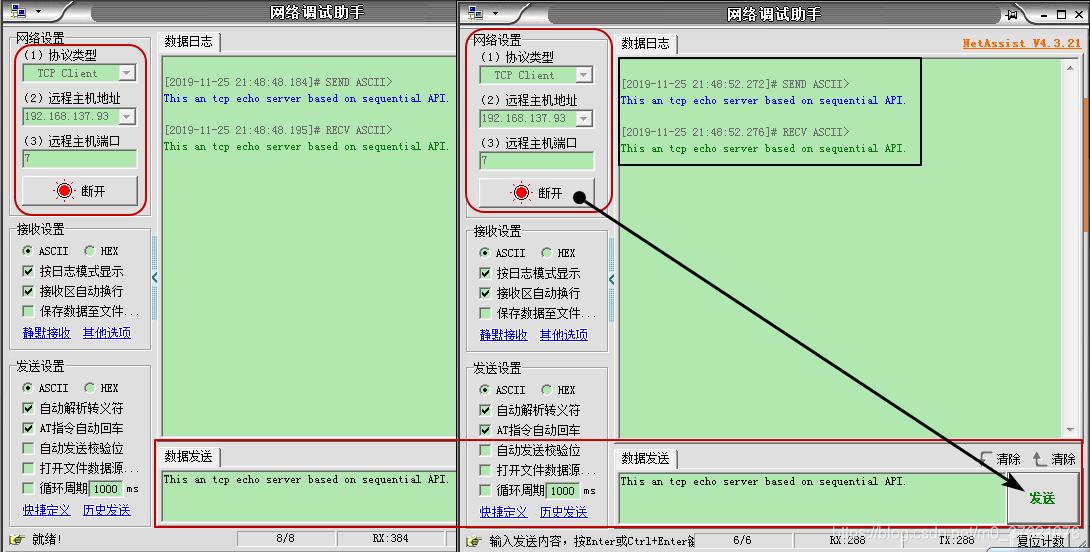

运行网络调试助手,启动UDP客户端,配置远程主机IP地址及端口号192.168.137.110 :7(IP地址从上图ifconfig命令执行结果获知,端口号是我们在程序中配置的),向远程主机(我们启动的UDP回送服务器)发送字符串,可以看到字符串被原样回送过来,结果如下:

本示例程序下载地址:https://github.com/StreamAI/LwIP_Projects/tree/master/qemu-vexpress-a9

4.2 Web服务器编程示例

Web服务器实现了一个最简单的功能:在本地打开端口80接受客户端的连接,当客户端连接成功后,接受客户端的html请求,并向客户端返回固定的html网页数据,然后主动断开到客户端的连接。这里使用Sequetia API来构建一个简单的Web服务器,在applications目录下新建seqapi_tcp_demo.c文件,在该文件中编辑实现代码如下:

// applications\seqapi_tcp_demo.c

#include "lwip/api.h"

#include "rtthread.h"

const static char http_html_hdr[] = "HTTP/1.1 200 OK\r\nContent-type: text/html\r\n\r\n";

const static char http_index_html[] = "Congrats! \

Welcome to LwIP 1.4.1 HTTP server!

\

This is a test page based on netconn API.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

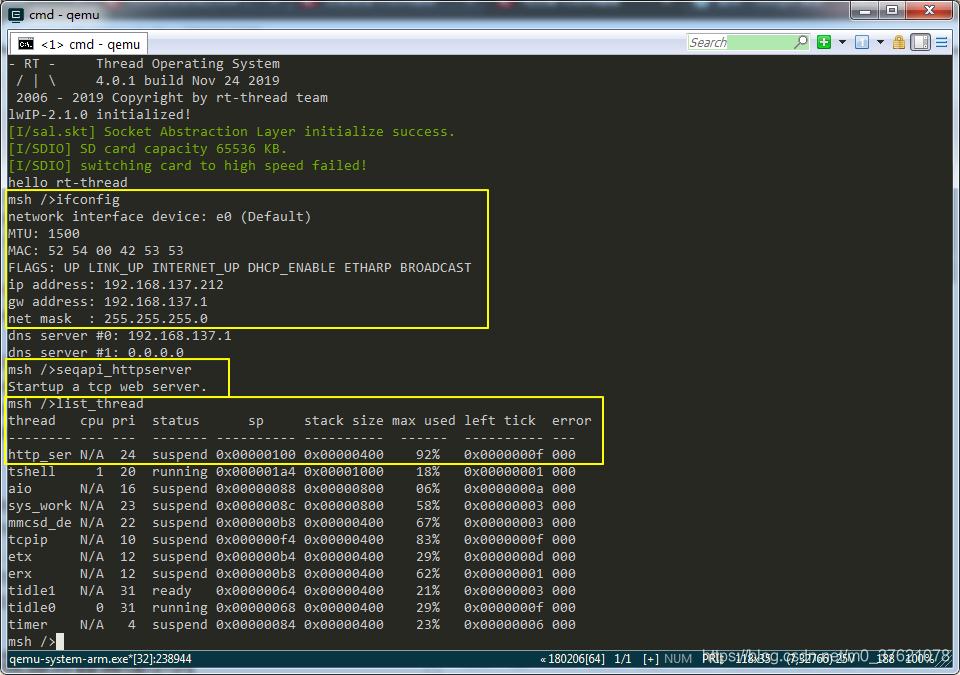

在env环境中运行scons命令编译工程,运行qemu命令启动虚拟机,执行ifconfig命令与ping命令确认网卡配置就绪后,执行MSH_CMD_EXPORT_ALIAS导出的命令别名seqapi_httpserver便启动了一个tcp网页服务器,命令执行结果如下:

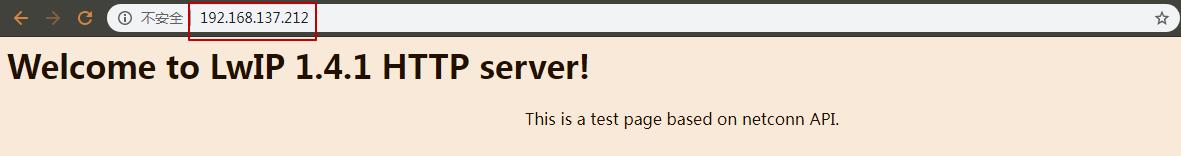

http_server线程启动后,我们创建的TCP网页服务器便运行起来了,在浏览器中输入网址192.168.137.212(从上面ifconfig命令执行结果获知),获取到的页面如下:

该界面正好是我们创建TCP服务器时配置的默认网页数据,在同一局域网内的多台主机上或者在同一主机上的多个浏览器窗口同时访问这个服务器,服务器都能正确地响应。尽管如此,本节中的Web服务器并不具备并发功能,即它不能在同一时刻同时响应多个连接的数据请求,而是每个连接在内核中排队并处理。

本示例程序下载地址:https://github.com/StreamAI/LwIP_Projects/tree/master/qemu-vexpress-a9

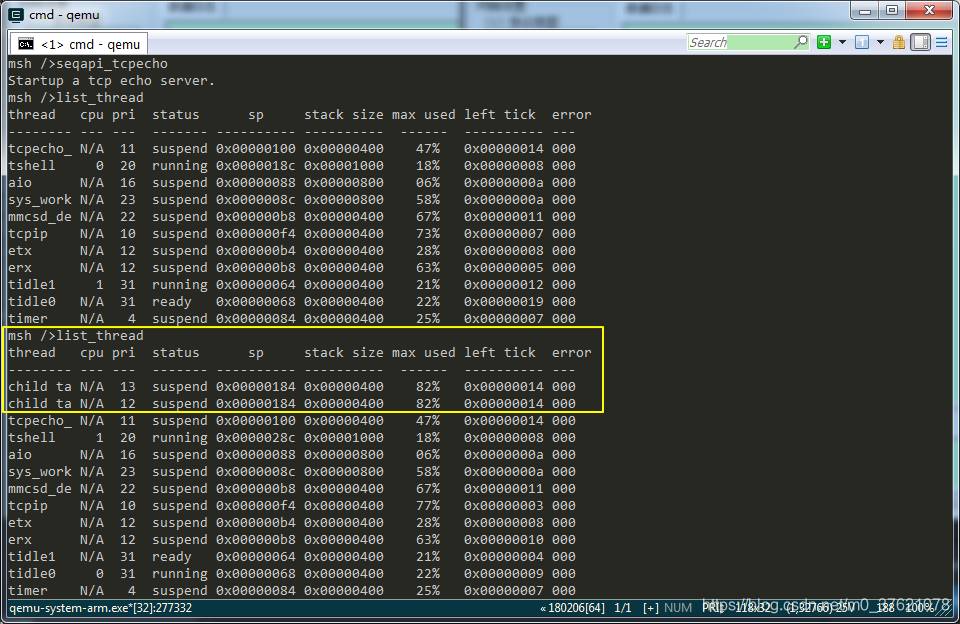

4.3 并发服务器编程示例

基于Raw/Callback API设计服务器程序时,服务器支持多个客户端的连接,最大可接入的客户端数取决于内核控制块的个数。当使用Sequetia API时,要使服务器能够同时支持多个客户端的连接,必须引入多任务机制,为每个连接创建一个单独的任务来处理连接上的数据,我们将这个设计方式称作并发服务器的设计。

由于并发服务器涉及到子任务的动态创建和销毁,用户需要自己完成对任务堆栈的管理和回收,因此并发服务器的设计流程也相对复杂。并发服务器完成的功能为:服务器能够同时支持多个客户端的连接,并能够将每个连接上收到的数据实时回显到客户端,完成简单的Echo功能,使用Sequetia API的实现代码如下(多线程并发基于RT-Thread操作系统):

// applications\seqapi_concurrent_demo.c

#include "lwip/api.h"

#include "rtthread.h"

//定义最大子任务数量,应与内核支持的最大连接数保持一致

#define MAX_CONN_TASK (MEMP_NUM_NETCONN)

//子任务起始优先级

#define CONN_TASK_PRIO_BASE (TCPIP_THREAD_PRIO + 2)

//子任务堆栈大小

#define CONN_TASK_STACK_SIZE 1024

//定义子任务管理结构

typedef struct conn_task

{

sys_thread_t tid; //子任务句柄

void* data; //子任务对应的连接数据

}conn_task_t;

//定义所有子任务管理所需要的内存空间

static conn_task_t task[MAX_CONN_TASK];

//子任务函数声明

static void child_task(void *arg);

//初始化子任务管理结构

static void conn_task_init(void)

{

int i = 0;

for(i = 0; i < MAX_CONN_TASK; i++)

{

task[i].tid = RT_NULL;