简介

Hadoop伪分布式模式在实际应用中并没有什么意义,但是在程序的测试和调试过程中,还是很有意义的。本文介绍了在Ubuntu 16.04 TLS系统上,如何安装和配置Hadoop 2.7.3的伪分布式模式。

创建Hadoop用户

创建hadoop用户,设置用户密码,并增加管理员权限。

bob@bob-virtual-machine:~$ sudo useradd -m hadoop -s /bin/bash # 创建hadoop用户

bob@bob-virtual-machine:~$ sudo passwd hadoop # 修改密码

bob@bob-virtual-machine:~$ sudo adduser hadoop sudo # 增加管理员权限ssh安装和配置

Hadoop控制脚本(并非守护进程)依赖SSH来执行针对整个集群的操作,如通过ssh来启动或终止slave列表中各台主机的守护进程。为了支持无缝式工作, SSH安装好之后,需要允许hadoop用户无需键入密码即可登录集群内的机器。最简单的方法是创建一个公钥/私钥对,存放在NFS之中,让整个集群共享该密钥对。

首先,以hadoop用户帐号登录后,键入以下指令来产生一个RSA密钥对。

%ssh-keygen -t rsa -f ~/.ssh/id_rsa

尽管期望无密码登录,但无口令的密钥并不是一个好的选择(运行在本地伪分布集群上时,倒也不妨使用一个空口令)。因此,当系统提示输入口令时,用户最好指定一个口令。可以使用ssh-agent以免为每个连接逐一输入密码。

私钥放在由-f选项指定的文件之中,例如~/.ssh/id rsa。存放公钥的文件名称与私钥类似,但是以“.pub”作为后缀,例如~/.ssh/id_rsa.pub。

接下来,需确保公钥存放在用户打算连接的所有机器的~,=./ssh/authorized_keys文件中。

下面是具体配置方法:

hadoop@bob-virtual-machine:~$ sudo apt-get install openssh-server

hadoop@bob-virtual-machine:~$ cd .ssh/

hadoop@bob-virtual-machine:~/.ssh$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:aXkPbR3dXlg/iNQtk0nCHeo/9aUE8x9PR4PMhz3umb4 hadoop@bob-virtual-machine

The key's randomart image is:

+---[RSA 2048]----+

| .o+o= .|

| ..*B==o|

| oo*=**|

| o.. =o+=|

| S o.o ++=|

| . . +..o+O|

| .o.++|

| o |

| E.|

+----[SHA256]-----+

hadoop@bob-virtual-machine:~/.ssh$ ls

id_rsa id_rsa.pub known_hosts

hadoop@bob-virtual-machine:~/.ssh$ cat id_rsa.pub >> authorized_keys

hadoop@bob-virtual-machine:~/.ssh$ ssh localhost

The authenticity of host 'localhost (127.0.0.1)' can't be established.

ECDSA key fingerprint is SHA256:1YeLhgGTygKaitVVyQCDDXKRCOHb59az/8fj0+nwvUI.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts.

Welcome to Ubuntu 16.04.3 LTS (GNU/Linux 4.10.0-28-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

104 packages can be updated.

61 updates are security updates.

Last login: Tue Jan 31 01:19:35 2017 from 10.220.33.31

To run a command as administrator (user "root"), use "sudo " .

See "man sudo_root" for details.1. 如果.ssh目录不存在,需要手工创建。

2. ssh-keygen用来生成秘钥,-t表示指定生成的秘钥加密算法。在以RSA为加密算法生成秘钥时,适用的是缺省参数,不需要用户提供输入。

3. 适用"ssh localhost"来验证是否支持无密码登录。第一次登录时会访问你是否继续连接,输入yes即可进入。实际上,在Hadoop的安装过程中,是否无密码登录是无关紧要的,但是如果不配置无密码登录,每次启动Hadoop,都需要输入密码以登录到每台机器的DataNode上,考虑到一般的Hadoop集群动辄数百台或上千台机器,因此一般来说都会配置SSH的无密码登录。

Java安装和环境配置

Hadoop主要是用Java语言编写的,需要在Hadoop安装前安装配置JDK。需要安装JDK并配置相应的环境变量。通过apt-get install命令来安装JDK和帮助文档。

hadoop@bob-virtual-machine:~$ sudo apt-get install openjdk-8-jdk

hadoop@bob-virtual-machine:~$ sudo apt-get install openjdk-8-doc

hadoop@bob-virtual-machine:~$ sudo apt-get install openjdk-8-sourceexport JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64Hadoop伪分布式模式配置

Hadoop有三种运行方式:单节点方式、单机伪分布式模式与集群方式。前两种方式不能体现云计算的优势,在实际应用中并没有什么意义,但是在程序的测试和调试过程中,还是很有意义的。伪分布式的配置过程也很简单,也是本节介绍的重点。

Hadoop的安装比较简单,在官网http://hadoop.apache.org上下载hadoop的压缩文件hadoop-2.7.3.tar.gz文件后,将其解压到/usr/local中,并设置hadoop目录的用户属性。

hadoop@bob-virtual-machine:~$ sudo tar -zxvf hadoop-2.7.3.tar.gz -C /usr/local

hadoop@bob-virtual-machine:~$ cd /usr/local

hadoop@bob-virtual-machine:/usr/local$ sudo ln -s hadoop-2.7.3 hadoop

hadoop@bob-virtual-machine:/usr/local$ sudo chown -R hadoop ./hadoop

hadoop@bob-virtual-machine:/usr/local$ ls -l

total 36

drwxr-xr-x 2 root root 4096 Aug 1 2017 bin

drwxr-xr-x 2 root root 4096 Aug 1 2017 etc

drwxr-xr-x 2 root root 4096 Aug 1 2017 games

lrwxrwxrwx 1 hadoop root 12 Jan 31 02:12 hadoop -> hadoop-2.7.3

drwxr-xr-x 9 root root 4096 Aug 17 21:49 hadoop-2.7.3

drwxr-xr-x 2 root root 4096 Aug 1 2017 include

drwxr-xr-x 4 root root 4096 Aug 1 2017 lib

lrwxrwxrwx 1 root root 9 Jan 30 18:20 man -> share/man

drwxr-xr-x 2 root root 4096 Aug 1 2017 sbin

drwxr-xr-x 8 root root 4096 Aug 1 2017 share

drwxr-xr-x 2 root root 4096 Aug 1 2017 srchadoop@bob-virtual-machine:~$ cat /etc/environment

PATH="/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/usr/local/hadoop/bin:/usr/local/hadoop/sbin"1. core-site.xml配置文件

Hadoop配置文件,存放Hadoop Core的配置项,例如HDFS和MapReduce常用的I/O配置等。这里配置的HDFS的地址、端口号和临时目录,相应的配置如下:

- "1.0" encoding="UTF-8"?>

- "text/xsl" href="configuration.xsl"?>

- <configuration>

- <property>

- <name>hadoop.tmp.dirname>

- <value>file:/usr/local/hadoop/tmpvalue>

- <description>Abase for other temporary directories.description>

- property>

- <property>

- <name>fs.defaultFSname>

- <value>hdfs://localhost:9000value>

- property>

- configuration>

Hadoop守护进程的配置项,包括namenode, 辅助namenode和datanode等。下面具体配置里,其中dfs.replication配置的是块数据备份的数目,缺省为3,由于我们这里配置的是伪分布式模式,所以,修改为1。dfs.namenode.name.dir配置的NameNode的工作目录,而dfs.datanode.data.dir配置DataNode的工作目录。关于NameNode和DataNode,可以参考本文的参考文章。

- "text/xsl" href="configuration.xsl"?>

-

-

- <configuration>

- <property>

- <name>dfs.replicationname>

- <value>1value>

- property>

- <property>

- <name>dfs.namenode.name.dirname>

- <value>file:/usr/local/hadoop/tmp/dfs/namevalue>

- property>

- <property>

- <name>dfs.datanode.data.dirname>

- <value>file:/usr/local/hadoop/tmp/dfs/datavalue>

- property>

- configuration>

这个是Hadoop中的环境变量配置文件,需要将JAVA_HOME的配置进行修改,否则会出现“localhost: Error: JAVA_HOME is not set and could not be found.”错误。

# The only required environment variable is JAVA_HOME. All others are

# optional. When running a distributed configuration it is best to

# set JAVA_HOME in this file, so that it is correctly defined on

# remote nodes.

# The java implementation to use.

export JAVA_HOME=$(readlink -f /usr/bin/javac | sed "s:/bin/javac::")启动Hadoop

在启动Hadoop前,需要格式化Hadoop的文件系统HDFS。

hadoop@bob-virtual-machine:~$ hdfs namenode -format

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

17/01/31 03:22:47 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = bob-virtual-machine/127.0.1.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.7.3

......

17/01/31 03:22:48 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

17/01/31 03:22:49 INFO util.ExitUtil: Exiting with status 0

17/01/31 03:22:49 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at bob-virtual-machine/127.0.1.1

************************************************************/hadoop@bob-virtual-machine:~$ hadoop fs -mkdir -p /user/hadoop

hadoop@bob-virtual-machine:~$ hadoop fs -mkdir temp

hadoop@bob-virtual-machine:~$ hadoop fs -ls

Found 1 items

drwxr-xr-x - hadoop supergroup 0 2017-01-31 05:03 temphadoop@bob-virtual-machine:~$ start-dfs.sh

Starting namenodes on [localhost]

localhost: starting namenode, logging to /usr/local/hadoop-2.7.3/logs/hadoop-hadoop-namenode-bob-virtual-machine.out

localhost: starting datanode, logging to /usr/local/hadoop-2.7.3/logs/hadoop-hadoop-datanode-bob-virtual-machine.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /usr/local/hadoop-2.7.3/logs/hadoop-hadoop-secondarynamenode-bob-virtual-machine.out

hadoop@bob-virtual-machine:~$ jps

10067 SecondaryNameNode

9717 NameNode

9846 DataNode

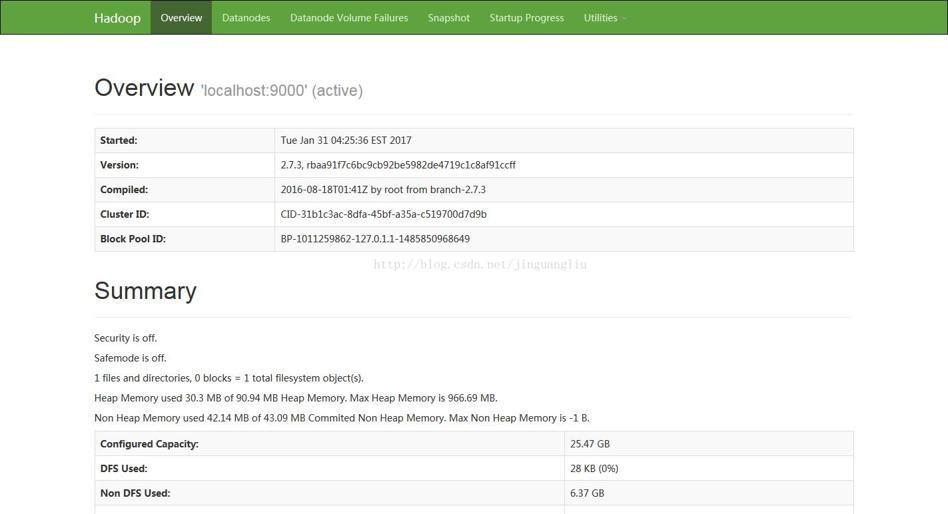

10189 Jps使用WEB浏览器可以访问http://localhost:50070来查看Hadoop状态信息:

常见问题

1. 启动过程失败,显示“localhost: Error: JAVA_HOME is not set and could not be found.”。不能正确识别JAVA_HOME环境变量,参考本文hdfs-env.sh文件配置章节

2. 执行hadoop fs命令,出现类似ls: '.': No such file or directory'错误,这种主要是未创建当前用户的工作目录,使用命令hadoop fs -mkdir -p /user/[current login user]为当前用户创建工作目录即可解决。

class="blog_extension_card_cont">

class="blog_extension_card_cont_l">

class="blog_extension_card_cont">

class="blog_extension_card_cont_l">

评论记录:

回复评论: