Azure Kinect

最近想做一个物体的三维重建,就买了微软最新的深度相机,功能相比前两代虽然有了很大提升,但是可参考的资料确很少,最简单的图像获取,点云图像保存,都费了我一番功夫最后再参考了现有的资料以及结合相机的源码,完成了图像保存以及点云获取保存

直接上全部的代码

我用的是VS2017专业版,OpenCV4.2,Microsoft.Azure.Kinect.Sensor.1.4.1。

//C++

#include (xyzImage.get_stride_bytes()));

//cv_xyzImage.convertTo(cv_xyzImage_32F, CV_32FC3, 1.0 / 1000, 0);// 转为float,同时将单位从 mm 转换为 m.

//cv::imshow("xyzimage", cv_xyzImage_32F);

//show image

cv::imshow("color", cv_rgbImage_no_alpha);

cv::imshow("depth", cv_depth_8U);

cv::imshow("ir", cv_irImage_8U);

//save image

double time_rgb = static_cast<double>(std::chrono::duration_cast<std::chrono::microseconds>(

rgbImage.get_device_timestamp()).count());

std::string filename_rgb = std::to_string(time_rgb / 1000000) + ".png";

double time_d = static_cast<double>(std::chrono::duration_cast<std::chrono::microseconds>(

depthImage.get_device_timestamp()).count());

std::string filename_d = std::to_string(time_d / 1000000) + ".png";

double time_ir = static_cast<double>(std::chrono::duration_cast<std::chrono::microseconds>(

irImage.get_device_timestamp()).count());

std::string filename_ir = std::to_string(time_ir / 1000000) + ".png";

imwrite("./rgb/" + filename_rgb, cv_rgbImage_no_alpha);

imwrite("./depth/" + filename_d, cv_depth_8U);

imwrite("./ir/" + filename_ir, cv_irImage_8U);

//const int32_t TIMEOUT_IN_MS = 1000;

//std::string file_name;

//uint32_t device_count = 0;

//k4a_device_t device1 = NULL;

//k4a_device_configuration_t config1 = K4A_DEVICE_CONFIG_INIT_DISABLE_ALL;

//k4a_capture_t capture1 = NULL;

//k4a_image_t depth_image = NULL;

//k4a_calibration_t calibration1;

k4a_image_t xy_table = NULL;

k4a_image_t point_cloud = NULL;

int point_count = 0;

double time_point = static_cast<double>(std::chrono::duration_cast<std::chrono::microseconds>(

rgbImage.get_device_timestamp()).count());

std::string filename_point = std::to_string(time_point / 1000000) + ".ply";

//file_name = "./pointcloud.ply";

/* device_count1 = k4a_device_get_installed_count();

if (device_count1 == 0)

{

printf("No K4A devices found

");

return 0;

}*/

/*if (K4A_RESULT_SUCCEEDED != k4a_device_open(K4A_DEVICE_DEFAULT, &device))

{

printf("Failed to open device

");

}*/

/* config1.depth_mode = K4A_DEPTH_MODE_WFOV_2X2BINNED;

config1.camera_fps = K4A_FRAMES_PER_SECOND_30;*/

/* k4a_device_get_calibration(device1, config1.depth_mode, config1.color_resolution, &calibration1);*/

k4a_image_create(K4A_IMAGE_FORMAT_CUSTOM,

k4aCalibration.depth_camera_calibration.resolution_width,

k4aCalibration.depth_camera_calibration.resolution_height,

k4aCalibration.depth_camera_calibration.resolution_width * (int)sizeof(k4a_float2_t),

&xy_table);

create_xy_table(&k4aCalibration, xy_table);

k4a_image_create(K4A_IMAGE_FORMAT_CUSTOM,

k4aCalibration.depth_camera_calibration.resolution_width,

k4aCalibration.depth_camera_calibration.resolution_height,

k4aCalibration.depth_camera_calibration.resolution_width * (int)sizeof(k4a_float3_t),

&point_cloud);

/* k4a_device_start_cameras(device, &config);

k4a_device_get_capture(device, &capture, TIMEOUT_IN_MS);*/

//depth_image = k4a_capture_get_depth_image(capture1);

if (depthImage == 0)

{

printf("Failed to get depth image from capture

");

}

generate_point_cloud(depthImage, xy_table, point_cloud, &point_count);

write_point_cloud(filename_point.c_str(), point_cloud, point_count);

/* k4a_image_release(depthImage);

k4a_capture_release(capture);*/

k4a_image_release(xy_table);

k4a_image_release(point_cloud);

//returnCode = 0;

//k4a_device_close(device1);

std::cout << "Acquiring!" << endl;

//写入depth.txt, rgb.txt文件

rgb_out << std::to_string(time_rgb / 1000000) << " " << "rgb/" << filename_rgb << endl;

d_out << std::to_string(time_d / 1000000) << " " << "depth/" << filename_d << endl;

ir_out << std::to_string(time_ir / 1000000) << " " << "ir/" << filename_ir << endl;

rgb_out << flush;

d_out << flush;

ir_out << flush;

k4aTransformation.destroy();

cv_rgbImage_with_alpha.release();

cv_rgbImage_no_alpha.release();

cv_depth.release();

cv_depth_8U.release();

cv_irImage.release();

cv_irImage_8U.release();

capture.reset();

if (cv::waitKey() == 'q')

{//按键采集,用户按下'q',跳出循环,结束采集

std::cout << "----------------------------------" << std::endl;

std::cout << "------------- closed -------------" << std::endl;

std::cout << "----------------------------------" << std::endl;

break;

}

}

else {

std::cout << "false: K4A_WAIT_RESULT_TIMEOUT." << std::endl;

}

}

cv::destroyAllWindows();

rgb_out << flush;

d_out << flush;

ir_out << flush;

rgb_out.close();

d_out.close();

ir_out.close();

// 释放,关闭设备

rgbImage.reset();

depthImage.reset();

irImage.reset();

capture.reset();

device.close();

return 1;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

- 245

- 246

- 247

- 248

- 249

- 250

- 251

- 252

- 253

- 254

- 255

- 256

- 257

- 258

- 259

- 260

- 261

- 262

- 263

- 264

- 265

- 266

- 267

- 268

- 269

- 270

- 271

- 272

- 273

- 274

- 275

- 276

- 277

- 278

- 279

- 280

- 281

- 282

- 283

- 284

- 285

- 286

- 287

- 288

- 289

- 290

- 291

- 292

- 293

- 294

- 295

- 296

- 297

- 298

- 299

- 300

- 301

- 302

- 303

- 304

- 305

- 306

- 307

- 308

- 309

- 310

- 311

- 312

- 313

- 314

- 315

- 316

- 317

- 318

- 319

- 320

- 321

- 322

- 323

- 324

- 325

- 326

- 327

- 328

- 329

- 330

- 331

- 332

- 333

- 334

- 335

- 336

- 337

- 338

- 339

- 340

- 341

- 342

- 343

- 344

- 345

- 346

- 347

- 348

- 349

- 350

- 351

- 352

- 353

- 354

- 355

- 356

- 357

- 358

- 359

- 360

- 361

- 362

- 363

- 364

- 365

- 366

- 367

- 368

- 369

- 370

- 371

- 372

- 373

- 374

- 375

- 376

- 377

- 378

- 379

- 380

- 381

- 382

- 383

- 384

- 385

- 386

- 387

- 388

- 389

- 390

- 391

- 392

- 393

- 394

- 395

- 396

- 397

- 398

- 399

- 400

- 401

- 402

- 403

- 404

- 405

- 406

- 407

- 408

- 409

- 410

- 411

- 412

- 413

- 414

- 415

- 416

- 417

- 418

- 419

- 420

- 421

- 422

- 423

- 424

- 425

- 426

- 427

- 428

- 429

- 430

- 431

- 432

- 433

- 434

- 435

- 436

- 437

- 438

- 439

- 440

- 441

- 442

- 443

- 444

- 445

- 446

- 447

- 448

- 449

- 450

- 451

- 452

- 453

- 454

- 455

- 456

- 457

- 458

- 459

- 460

- 461

- 462

- 463

- 464

- 465

- 466

- 467

- 468

- 469

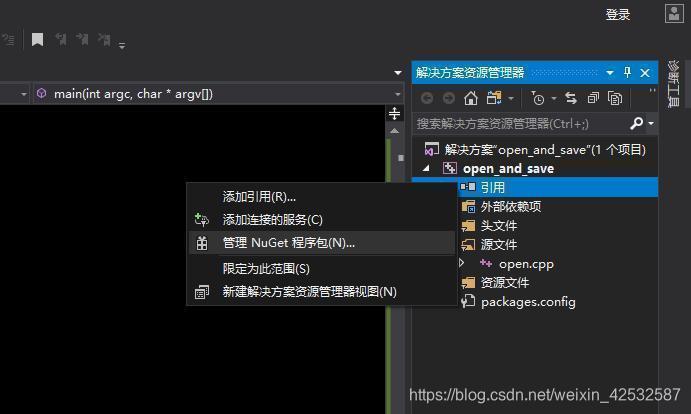

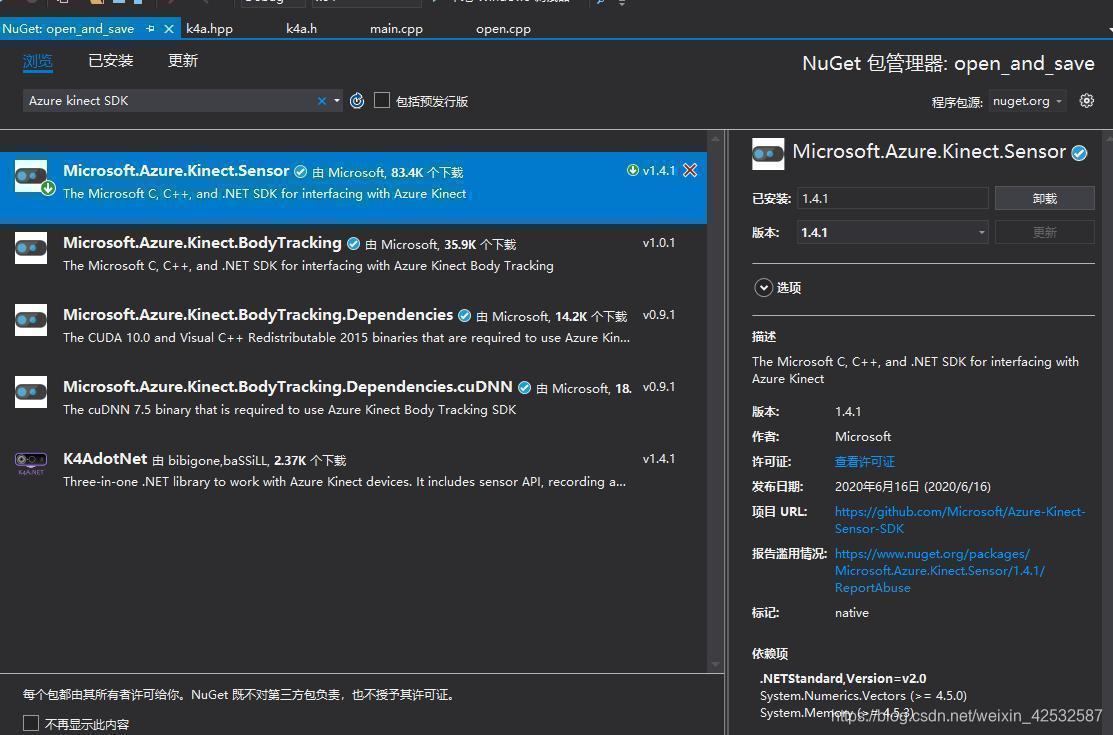

相关包的安装

本文用的OpenCV4.2以及深度相机的SDK包是直接通过NuGet程序包直接安装的,下面是每步的安装截图

同样,OpenCV也是相同的方法,但是当我安装OpenCV时,用低版本时总是报错,当用到4.2时才正常,我看网上的说是OpenCV低版本不支持VS2017,我也不知是不是这个原因,反正用到高版本时就行了,用NuGet直接安装OpenCV还有个好处是省了各种配置,只要装上就可用

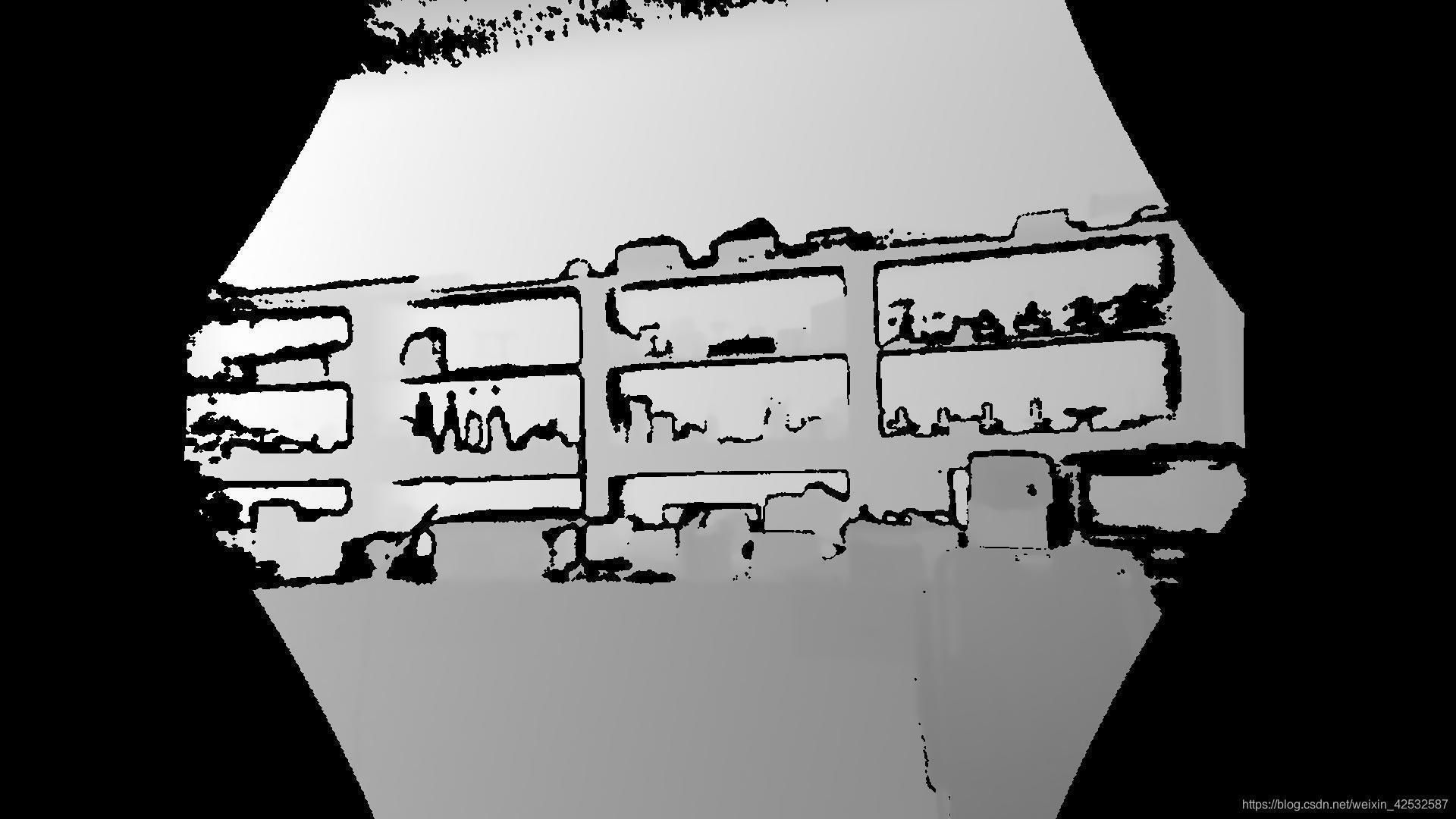

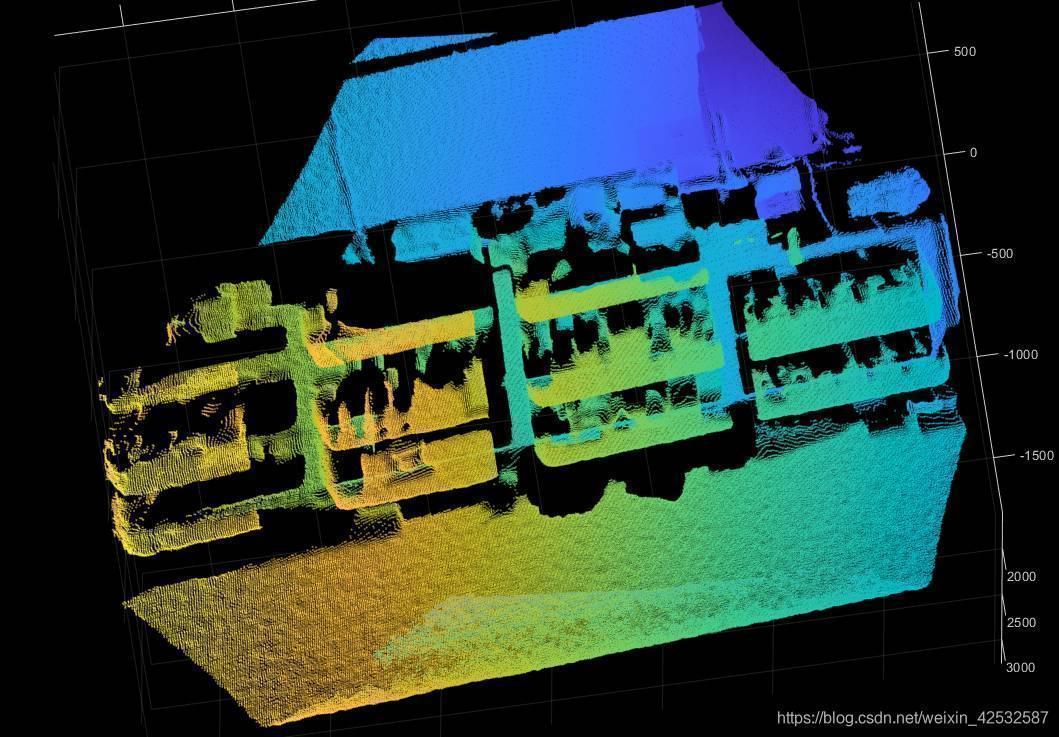

程序运行结果

放几张程序运行结果图

深度图的显示是根据这篇博客,采用归一化的方法进行显示保存,最后保存的图像依然是16位,后面尝试微软演示(5,6,5)的显示方式

点云可视化是利用保存的.ply文件进行可视化的,C++对我一个外行太不友好了

matlab显示代码如下

ptCloud = pcread('F:Depth_image_C++open_and_saveopen_and_save14.551055.ply'); % read from a PLY file

figure

pcshow(ptCloud);

title('Original Data');

- 1

- 2

- 3

- 4

文件保存

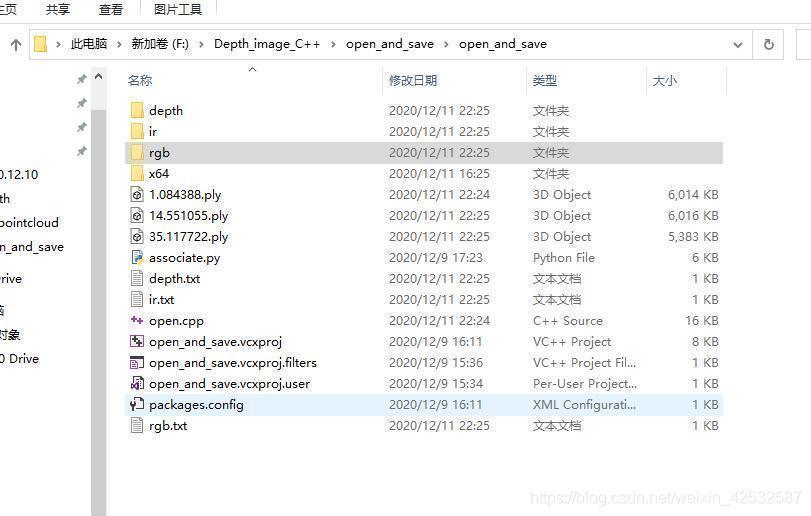

文件保存需要分别新建几个文件夹由于保存文件,如图

最后

这算是我自己学习过程中的一个记录,如果能够对你们有帮助那是更好了。

https://blog.csdn.net/qq_40936780/article/details/102634734?utm_medium=distribute.pc_relevant.none-task-blog-title-3&spm=1001.2101.3001.4242

https://blog.csdn.net/Zlp19970106/article/details/107120743/?utm_medium=distribute.pc_relevant.none-task-blog-title-2&spm=1001.2101.3001.4242

还有篇参考博客找不到了,等找到了再添加吧

评论记录:

回复评论: