目录

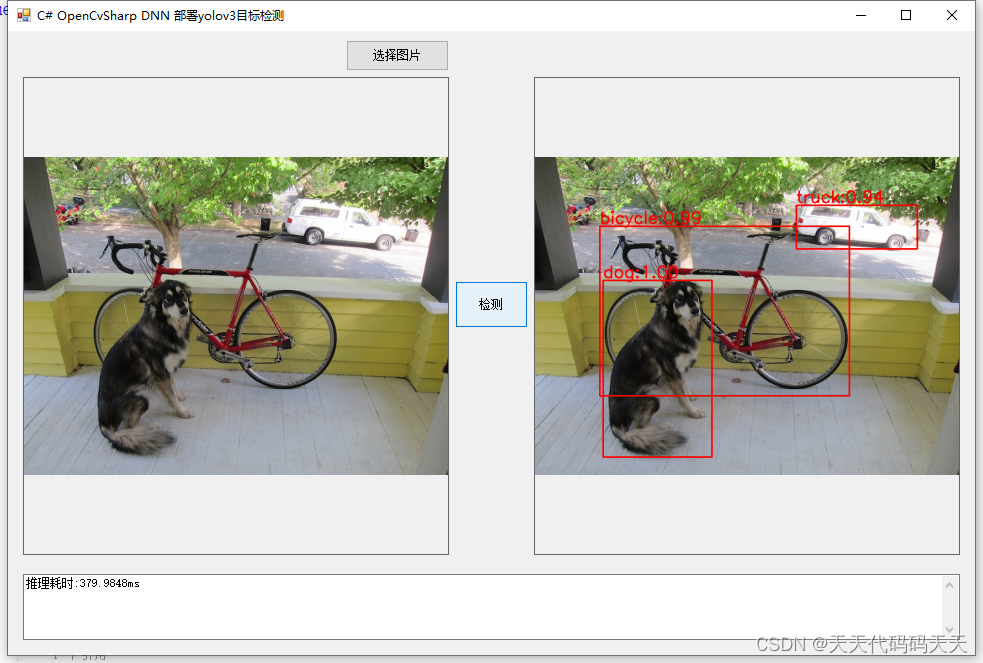

C# OpenCvSharp DNN 部署yolov3目标检测

效果

yolov3.cfg

- [net]

- # Testing

- #batch=1

- #subdivisions=1

- # Training

- batch=16

- subdivisions=1

- width=416

- height=416

- channels=3

- momentum=0.9

- decay=0.0005

- angle=0

- saturation = 1.5

- exposure = 1.5

- hue=.1

-

- learning_rate=0.001

- burn_in=1000

- max_batches = 500200

- policy=steps

- steps=400000,450000

- scales=.1,.1

-

- [convolutional]

- batch_normalize=1

- filters=32

- size=3

- stride=1

- pad=1

- activation=leaky

-

- # Downsample

-

- [convolutional]

- batch_normalize=1

- filters=64

- size=3

- stride=2

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=32

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=64

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- # Downsample

-

- [convolutional]

- batch_normalize=1

- filters=128

- size=3

- stride=2

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=64

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=128

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- [convolutional]

- batch_normalize=1

- filters=64

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=128

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- # Downsample

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=3

- stride=2

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=128

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- [convolutional]

- batch_normalize=1

- filters=128

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- [convolutional]

- batch_normalize=1

- filters=128

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- [convolutional]

- batch_normalize=1

- filters=128

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

-

- [convolutional]

- batch_normalize=1

- filters=128

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- [convolutional]

- batch_normalize=1

- filters=128

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- [convolutional]

- batch_normalize=1

- filters=128

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- [convolutional]

- batch_normalize=1

- filters=128

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- # Downsample

-

- [convolutional]

- batch_normalize=1

- filters=512

- size=3

- stride=2

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=512

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=512

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=512

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=512

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=512

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=512

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=512

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=512

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- # Downsample

-

- [convolutional]

- batch_normalize=1

- filters=1024

- size=3

- stride=2

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=512

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=1024

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- [convolutional]

- batch_normalize=1

- filters=512

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=1024

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- [convolutional]

- batch_normalize=1

- filters=512

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=1024

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- [convolutional]

- batch_normalize=1

- filters=512

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=1024

- size=3

- stride=1

- pad=1

- activation=leaky

-

- [shortcut]

- from=-3

- activation=linear

-

- ######################

-

- [convolutional]

- batch_normalize=1

- filters=512

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- size=3

- stride=1

- pad=1

- filters=1024

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=512

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- size=3

- stride=1

- pad=1

- filters=1024

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=512

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- size=3

- stride=1

- pad=1

- filters=1024

- activation=leaky

-

- [convolutional]

- size=1

- stride=1

- pad=1

- filters=255

- activation=linear

-

-

- [yolo]

- mask = 6,7,8

- anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

- classes=80

- num=9

- jitter=.3

- ignore_thresh = .7

- truth_thresh = 1

- random=1

-

-

- [route]

- layers = -4

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [upsample]

- stride=2

-

- [route]

- layers = -1, 61

-

-

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- size=3

- stride=1

- pad=1

- filters=512

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- size=3

- stride=1

- pad=1

- filters=512

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=256

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- size=3

- stride=1

- pad=1

- filters=512

- activation=leaky

-

- [convolutional]

- size=1

- stride=1

- pad=1

- filters=255

- activation=linear

-

-

- [yolo]

- mask = 3,4,5

- anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

- classes=80

- num=9

- jitter=.3

- ignore_thresh = .7

- truth_thresh = 1

- random=1

-

-

-

- [route]

- layers = -4

-

- [convolutional]

- batch_normalize=1

- filters=128

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [upsample]

- stride=2

-

- [route]

- layers = -1, 36

-

-

-

- [convolutional]

- batch_normalize=1

- filters=128

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- size=3

- stride=1

- pad=1

- filters=256

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=128

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- size=3

- stride=1

- pad=1

- filters=256

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- filters=128

- size=1

- stride=1

- pad=1

- activation=leaky

-

- [convolutional]

- batch_normalize=1

- size=3

- stride=1

- pad=1

- filters=256

- activation=leaky

-

- [convolutional]

- size=1

- stride=1

- pad=1

- filters=255

- activation=linear

-

-

- [yolo]

- mask = 0,1,2

- anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

- classes=80

- num=9

- jitter=.3

- ignore_thresh = .7

- truth_thresh = 1

- random=1

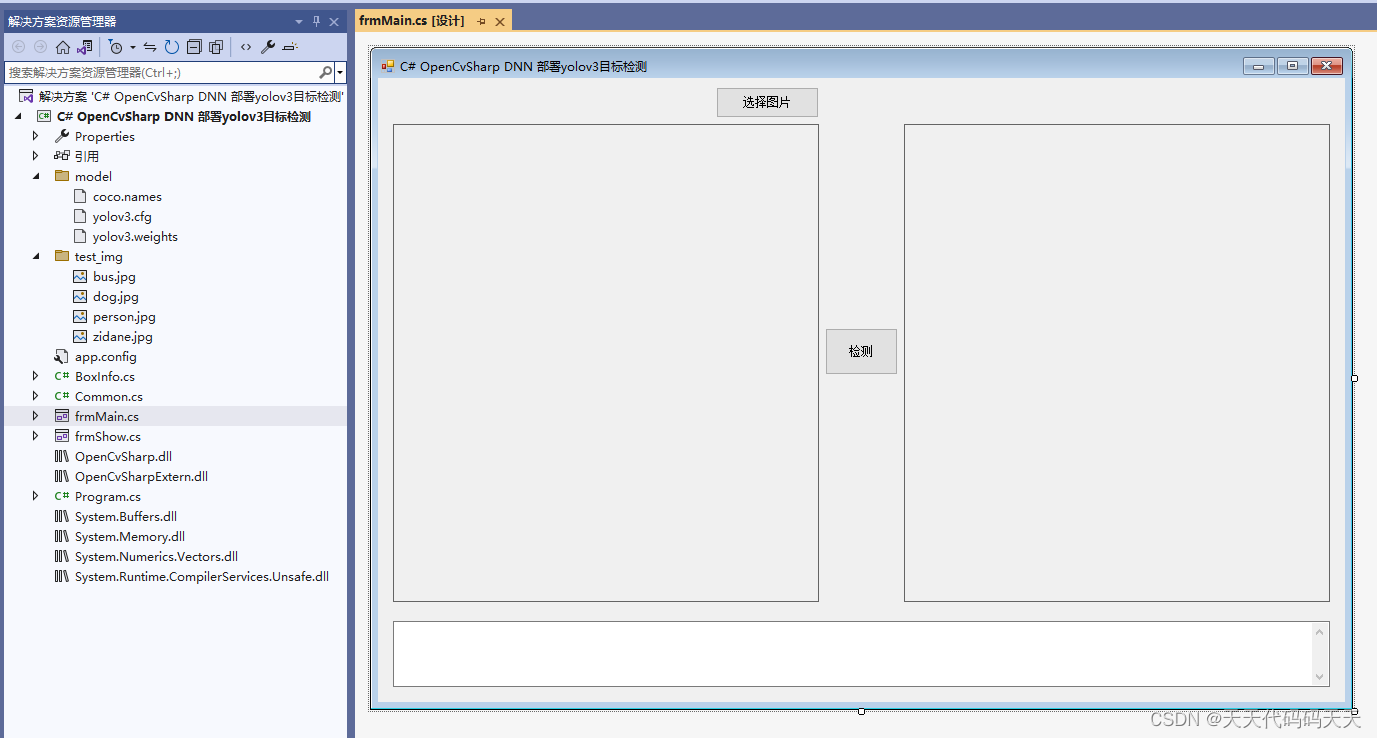

项目

代码

using OpenCvSharp;

using OpenCvSharp.Dnn;

using System;

using System.Collections.Generic;

using System.Drawing;

using System.IO;

using System.Linq;

using System.Windows.Forms;

namespace OpenCvSharp_DNN_Demo

{

public partial class frmMain : Form

{

public frmMain()

{

InitializeComponent();

}

string fileFilter = "*.*|*.bmp;*.jpg;*.jpeg;*.tiff;*.tiff;*.png";

string image_path = "";

DateTime dt1 = DateTime.Now;

DateTime dt2 = DateTime.Now;

float confThreshold;

float nmsThreshold;

int inpHeight;

int inpWidth;

List

int num_class;

Net opencv_net;

Mat BN_image;

Mat image;

Mat result_image;

private void button1_Click(object sender, EventArgs e)

{

OpenFileDialog ofd = new OpenFileDialog();

ofd.Filter = fileFilter;

if (ofd.ShowDialog() != DialogResult.OK) return;

pictureBox1.Image = null;

pictureBox2.Image = null;

textBox1.Text = "";

image_path = ofd.FileName;

pictureBox1.Image = new Bitmap(image_path);

image = new Mat(image_path);

}

private void Form1_Load(object sender, EventArgs e)

{

confThreshold = 0.5f;

nmsThreshold = 0.4f;

inpHeight = 416;

inpWidth = 416;

opencv_net = CvDnn.ReadNetFromDarknet("model/yolov3.cfg", "model/yolov3.weights");

class_names = new List

StreamReader sr = new StreamReader("model/coco.names");

string line;

while ((line = sr.ReadLine()) != null)

{

class_names.Add(line);

}

num_class = class_names.Count();

image_path = "test_img/dog.jpg";

pictureBox1.Image = new Bitmap(image_path);

}

private unsafe void button2_Click(object sender, EventArgs e)

{

if (image_path == "")

{

return;

}

textBox1.Text = "检测中,请稍等……";

pictureBox2.Image = null;

Application.DoEvents();

image = new Mat(image_path);

BN_image = CvDnn.BlobFromImage(image, 1 / 255.0, new OpenCvSharp.Size(inpWidth, inpHeight), new Scalar(0, 0, 0), true, false);

//配置图片输入数据

opencv_net.SetInput(BN_image);

//模型推理,读取推理结果

var outNames = opencv_net.GetUnconnectedOutLayersNames();

var outs = outNames.Select(_ => new Mat()).ToArray();

dt1 = DateTime.Now;

opencv_net.Forward(outs, outNames);

dt2 = DateTime.Now;

List

List

List

for (int i = 0; i < outs.Length; ++i)

{

float* data = (float*)outs[i].Data;

for (int j = 0; j < outs[i].Rows; ++j, data += outs[i].Cols)

{

Mat scores = outs[i].Row(j).ColRange(5, outs[i].Cols);

double minVal, max_class_socre;

OpenCvSharp.Point minLoc, classIdPoint;

// Get the value and location of the maximum score

Cv2.MinMaxLoc(scores, out minVal, out max_class_socre, out minLoc, out classIdPoint);

if (max_class_socre > confThreshold)

{

int centerX = (int)(data[0] * image.Cols);

int centerY = (int)(data[1] * image.Rows);

int width = (int)(data[2] * image.Cols);

int height = (int)(data[3] * image.Rows);

int left = centerX - width / 2;

int top = centerY - height / 2;

classIds.Add(classIdPoint.X);

confidences.Add((float)max_class_socre);

boxes.Add(new Rect(left, top, width, height));

}

}

}

int[] indices;

CvDnn.NMSBoxes(boxes, confidences, confThreshold, nmsThreshold, out indices);

result_image = image.Clone();

for (int i = 0; i < indices.Length; ++i)

{

int idx = indices[i];

Rect box = boxes[idx];

Cv2.Rectangle(result_image, new OpenCvSharp.Point(box.X, box.Y), new OpenCvSharp.Point(box.X + box.Width, box.Y + box.Height), new Scalar(0, 0, 255), 2);

string label = class_names[classIds[idx]] + ":" + confidences[idx].ToString("0.00");

Cv2.PutText(result_image, label, new OpenCvSharp.Point(box.X, box.Y - 5), HersheyFonts.HersheySimplex, 1, new Scalar(0, 0, 255), 2);

}

pictureBox2.Image = new Bitmap(result_image.ToMemoryStream());

textBox1.Text = "推理耗时:" + (dt2 - dt1).TotalMilliseconds + "ms";

}

private void pictureBox2_DoubleClick(object sender, EventArgs e)

{

Common.ShowNormalImg(pictureBox2.Image);

}

private void pictureBox1_DoubleClick(object sender, EventArgs e)

{

Common.ShowNormalImg(pictureBox1.Image);

}

}

}

- using OpenCvSharp;

- using OpenCvSharp.Dnn;

- using System;

- using System.Collections.Generic;

- using System.Drawing;

- using System.IO;

- using System.Linq;

- using System.Windows.Forms;

-

- namespace OpenCvSharp_DNN_Demo

- {

- public partial class frmMain : Form

- {

- public frmMain()

- {

- InitializeComponent();

- }

-

- string fileFilter = "*.*|*.bmp;*.jpg;*.jpeg;*.tiff;*.tiff;*.png";

- string image_path = "";

-

- DateTime dt1 = DateTime.Now;

- DateTime dt2 = DateTime.Now;

-

- float confThreshold;

- float nmsThreshold;

-

- int inpHeight;

- int inpWidth;

-

- List<string> class_names;

- int num_class;

-

- Net opencv_net;

- Mat BN_image;

-

- Mat image;

- Mat result_image;

-

- private void button1_Click(object sender, EventArgs e)

- {

- OpenFileDialog ofd = new OpenFileDialog();

- ofd.Filter = fileFilter;

- if (ofd.ShowDialog() != DialogResult.OK) return;

-

- pictureBox1.Image = null;

- pictureBox2.Image = null;

- textBox1.Text = "";

-

- image_path = ofd.FileName;

- pictureBox1.Image = new Bitmap(image_path);

- image = new Mat(image_path);

- }

-

- private void Form1_Load(object sender, EventArgs e)

- {

- confThreshold = 0.5f;

- nmsThreshold = 0.4f;

-

- inpHeight = 416;

- inpWidth = 416;

-

- opencv_net = CvDnn.ReadNetFromDarknet("model/yolov3.cfg", "model/yolov3.weights");

-

- class_names = new List<string>();

- StreamReader sr = new StreamReader("model/coco.names");

- string line;

- while ((line = sr.ReadLine()) != null)

- {

- class_names.Add(line);

- }

- num_class = class_names.Count();

-

- image_path = "test_img/dog.jpg";

- pictureBox1.Image = new Bitmap(image_path);

-

- }

-

- private unsafe void button2_Click(object sender, EventArgs e)

- {

- if (image_path == "")

- {

- return;

- }

- textBox1.Text = "检测中,请稍等……";

- pictureBox2.Image = null;

- Application.DoEvents();

-

- image = new Mat(image_path);

-

- BN_image = CvDnn.BlobFromImage(image, 1 / 255.0, new OpenCvSharp.Size(inpWidth, inpHeight), new Scalar(0, 0, 0), true, false);

-

- //配置图片输入数据

- opencv_net.SetInput(BN_image);

-

- //模型推理,读取推理结果

- var outNames = opencv_net.GetUnconnectedOutLayersNames();

- var outs = outNames.Select(_ => new Mat()).ToArray();

-

- dt1 = DateTime.Now;

-

- opencv_net.Forward(outs, outNames);

-

- dt2 = DateTime.Now;

-

- List<int> classIds = new List<int>();

- List<float> confidences = new List<float>();

- List

boxes = new List(); -

- for (int i = 0; i < outs.Length; ++i)

- {

- float* data = (float*)outs[i].Data;

- for (int j = 0; j < outs[i].Rows; ++j, data += outs[i].Cols)

- {

- Mat scores = outs[i].Row(j).ColRange(5, outs[i].Cols);

-

- double minVal, max_class_socre;

- OpenCvSharp.Point minLoc, classIdPoint;

- // Get the value and location of the maximum score

- Cv2.MinMaxLoc(scores, out minVal, out max_class_socre, out minLoc, out classIdPoint);

-

- if (max_class_socre > confThreshold)

- {

- int centerX = (int)(data[0] * image.Cols);

- int centerY = (int)(data[1] * image.Rows);

- int width = (int)(data[2] * image.Cols);

- int height = (int)(data[3] * image.Rows);

- int left = centerX - width / 2;

- int top = centerY - height / 2;

-

- classIds.Add(classIdPoint.X);

- confidences.Add((float)max_class_socre);

- boxes.Add(new Rect(left, top, width, height));

- }

- }

- }

-

- int[] indices;

- CvDnn.NMSBoxes(boxes, confidences, confThreshold, nmsThreshold, out indices);

-

- result_image = image.Clone();

-

- for (int i = 0; i < indices.Length; ++i)

- {

- int idx = indices[i];

- Rect box = boxes[idx];

- Cv2.Rectangle(result_image, new OpenCvSharp.Point(box.X, box.Y), new OpenCvSharp.Point(box.X + box.Width, box.Y + box.Height), new Scalar(0, 0, 255), 2);

- string label = class_names[classIds[idx]] + ":" + confidences[idx].ToString("0.00");

- Cv2.PutText(result_image, label, new OpenCvSharp.Point(box.X, box.Y - 5), HersheyFonts.HersheySimplex, 1, new Scalar(0, 0, 255), 2);

- }

-

- pictureBox2.Image = new Bitmap(result_image.ToMemoryStream());

- textBox1.Text = "推理耗时:" + (dt2 - dt1).TotalMilliseconds + "ms";

-

- }

-

- private void pictureBox2_DoubleClick(object sender, EventArgs e)

- {

- Common.ShowNormalImg(pictureBox2.Image);

- }

-

- private void pictureBox1_DoubleClick(object sender, EventArgs e)

- {

- Common.ShowNormalImg(pictureBox1.Image);

- }

- }

- }

下载

微信公众号

微信公众号

评论记录:

回复评论: