目录

1 背景介绍:

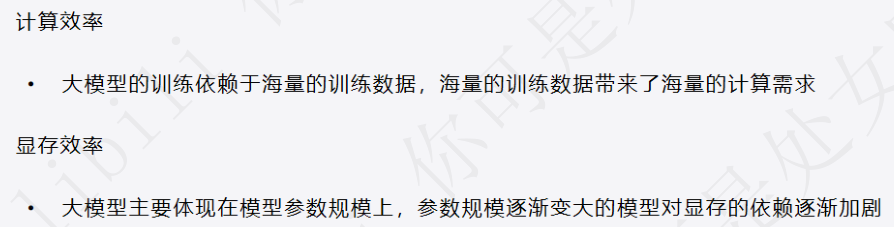

1.1 大模型训练的难点是什么?

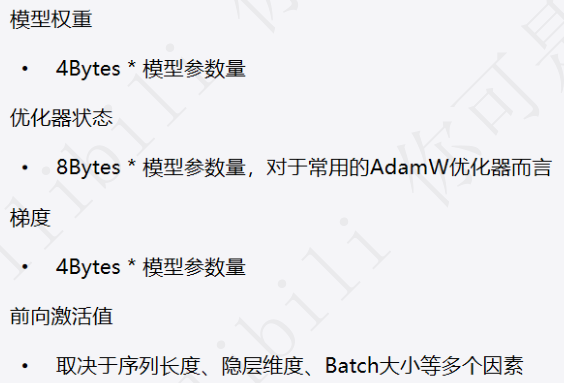

1.2 模型训练的显存占用:

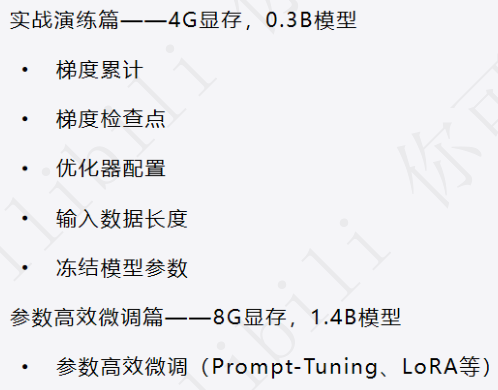

1.3 如何降低训练时的显存占用?

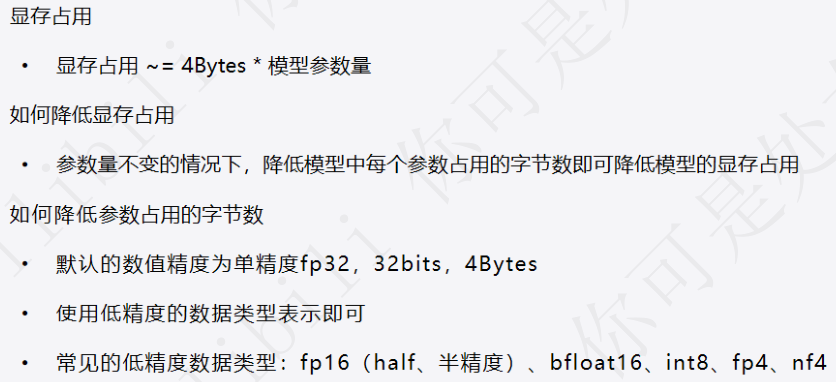

1.4 模型本身的显存占用:

之前我们节约显存占用的优化手段都是针对降低训练时的显存占用,那么如何降低模型本身的显存占用呢?这就需要涉及低精度问题。

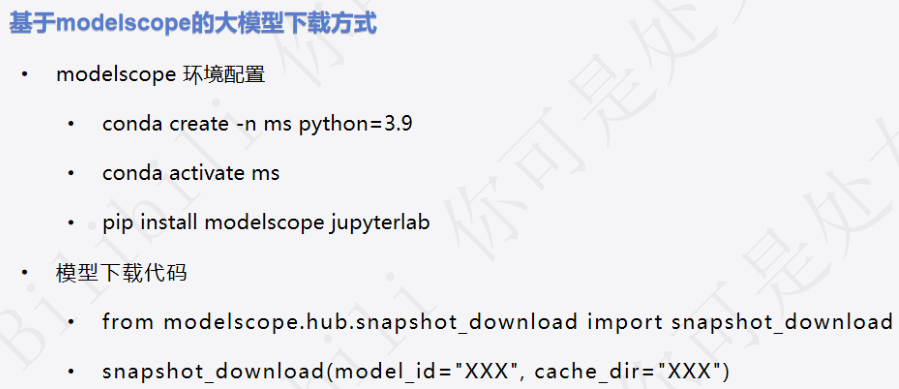

2 模型下载:

可以理解modelscope为一个更适合中国宝宝的transformers库,当然没有transformers那么完善,但用于下载模型还是可以的。

2.1 配置环境

pip install modelscope jupyterlab2.2 从modelscope上下载

- from modelscope.hub.snapshot_download import snapshot_download

-

- # model_id 模型id

- # cache_dir 本地缓存目录

- # ignore_file_pattern 无需下载的文件

- snapshot_download(

- model_id="Shanghai_AI_Laboratory/internlm-20b", cache_dir="F:\Modelscope_models"

- )

3 模型加载(以chat-glm为例):

因为transformers中没有chat_glm的官方实现,所以要设置trust_remote_code=True。

huggingface可以加载modelscope上的模型,但是不是所有模型都可以。

- from transformers import AutoTokenizer, AutoModel

-

- tokenizer = AutoTokenizer.from_pretrained("d:/Pretrained_models/ZhipuAI/chatglm2-6b/", trust_remote_code=True)

- tokenizer

ChatGLMTokenizer(name_or_path='d:/Pretrained_models/ZhipuAI/chatglm2-6b/', vocab_size=64794, model_max_length=1000000000000000019884624838656, is_fast=False, padding_side='left', truncation_side='right', special_tokens={}, clean_up_tokenization_spaces=False)

- model = AutoModel.from_pretrained("d:/Pretrained_models/ZhipuAI/chatglm2-6b/", trust_remote_code=True)

- model

- ChatGLMForConditionalGeneration(

- (transformer): ChatGLMModel(

- (embedding): Embedding(

- (word_embeddings): Embedding(65024, 4096)

- )

- (rotary_pos_emb): RotaryEmbedding()

- (encoder): GLMTransformer(

- (layers): ModuleList(

- (0): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (1): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (2): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (3): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (4): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (5): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (6): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (7): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (8): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (9): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (10): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (11): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (12): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (13): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (14): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (15): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (16): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (17): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (18): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (19): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (20): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (21): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (22): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (23): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (24): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (25): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (26): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- (27): GLMBlock(

- (input_layernorm): RMSNorm()

- (self_attention): SelfAttention(

- (query_key_value): Linear(in_features=4096, out_features=4608, bias=True)

- (core_attention): CoreAttention(

- (attention_dropout): Dropout(p=0.0, inplace=False)

- )

- (dense): Linear(in_features=4096, out_features=4096, bias=False)

- )

- (post_attention_layernorm): RMSNorm()

- (mlp): MLP(

- (dense_h_to_4h): Linear(in_features=4096, out_features=27392, bias=False)

- (dense_4h_to_h): Linear(in_features=13696, out_features=4096, bias=False)

- )

- )

- )

- (final_layernorm): RMSNorm()

- )

- (output_layer): Linear(in_features=4096, out_features=65024, bias=False)

- )

- )

评论记录:

回复评论: